AI

AI

AI

AI

AI

AI

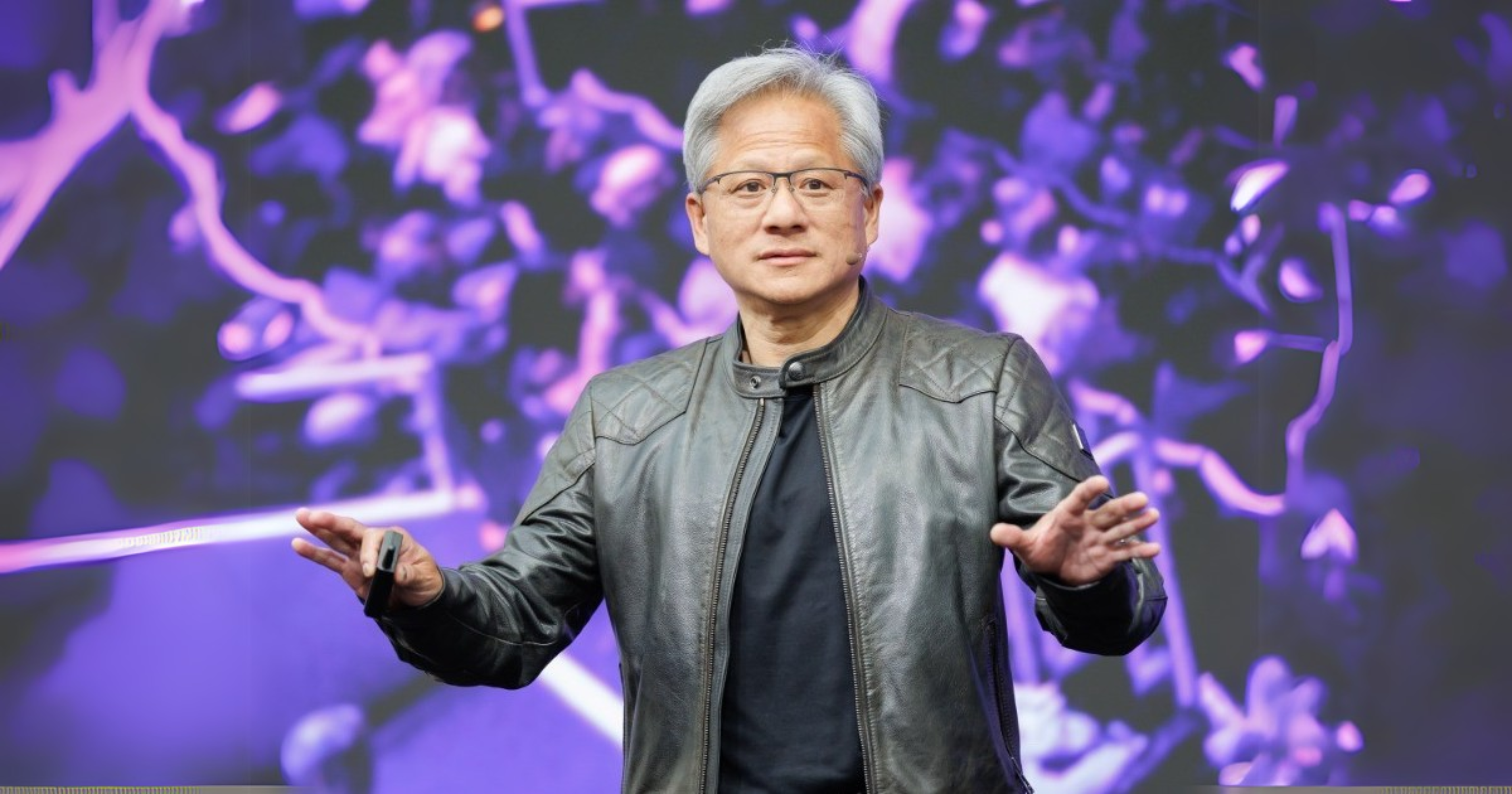

In the most recent years since the pandemic, tech’s top players have held multiple in-person conferences in locations around the world. It seems as though Nvidia Corp. Chief Executive Jensen Huang, clad in his signature black leather jacket, has made a personal appearance on the keynote stage at every one of them.

The “leather-jacket effect” has been one of the tech world’s most important stories over the past three years. There is no question that Nvidia’s influence in the tech world’s artificial intelligence direction has grown significantly, and Huang’s many appearances speak to both his company’s long-term strategic vision and role in building an ecosystem of partnerships. Nvidia has positioned itself at the forefront of AI innovation and a conversation around the future direction of technology itself, driving a wave of advancement not seen since the rise of the personal computer and the birth of the internet.

“Nvidia’s momentum is simply remarkable and has caught the attention of everyone in the industry,” said theCUBE Research Chief Analyst Dave Vellante. “The pace of innovation coming out of the AI ecosystem, generally, and Nvidia, specifically, is astounding.”

It would be tempting to characterize Nvidia’s rapid rise to the top of the technology food chain as a case of being in the right place at the right time when AI suddenly became hot. Yet there is more to the story behind the 31-year-old company’s growth. Nvidia has followed a deliberate strategy to build a sophisticated software stack around its processor portfolio, which enabled it to deliver tools and microservices that were tailor-made for the kind of platform that AI applications required.

“Nvidia bet that what they now call accelerated computing would go mainstream and by having the key pieces — very large and fast GPUs, proprietary networking and purpose built software — it would get a jump on the market, which it did,” Vellante said.

A key decision for the company occurred in 2006 when it introduced Complete Unified Device Architecture, or CUDA. This parallel computing platform lets developers work with application programming interfaces and supports a wide range of functions including deep learning.

In 2012, AlexNet, a convolutional neural network or CNN designed by a team that included OpenAI co-founder Ilya Sutskever, won the ImageNet challenge using Nvidia’s CUDA architecture. This showed that Nvidia’s technology could move beyond the gaming world where the company had earlier made its name.

“The AlexNet research paper was downloaded more than 100,000 times, putting CUDA on the map,” Tony Baer, principal at dbInsight LLC, explained in an analysis for SiliconANGLE.

Thomas Kurian, CEO of Google Cloud talks with Jensen Huang, CEO of Nvidia, on the keynote stage at Google Cloud Next in August, 2023.

Though AI and deep learning were still viewed as smaller, niche projects within the technology world in 2012, Nvidia’s Huang seized the opportunity. His annual presentations at the firm’s GTC event began to include a vision for how Nvidia would make new GPUs for machine learning and deep neural networks that could supplant the costly and lengthy process driven by central processing units or CPUs.

“What took 1,000 servers and about $5 million is now possible to do on just three GPU accelerated servers,” Huang said during his GTC keynote in 2014. “The computational density is on a completely different scale. It’s 100 times less energy, 100 times less cost.”

Two years later, Huang would donate his company’s first DGX supercomputer to Elon Musk, who was co-chair at the time of a newly launched venture called OpenAI. Musk had expressed his interest in the device to Nvidia’s CEO while it was under development. By the end of 2022, OpenAI had unveiled ChatGPT to the world.

As Nvidia has grown into an AI-driven colossus, it has also worked actively to cultivate partnerships with a broad range of industry players, including the largest cloud providers in the world.

Amazon Web Services Inc. customers can reserve access to hundreds of Nvidia’s H100 Tensor Core GPUs co-located with Amazon EC2 UltraClusters for machine learning workloads. In March, Google Cloud announced that it had adopted the Nvidia Grace Blackwell computing platform, and that the chipmaker’s H100-powered DGX cloud would now become generally available to Google’s customers.

Huang speaks at AWS re:Invent in Las Vegas in November 2023.

In August of last year, Microsoft announced that it would offer Nvidia’s most powerful GPUs as part of its v5 Virtual Machine series through the Azure cloud, a move viewed by analysts as an extension of the cloud provider’s major investment in OpenAI.

Interest among major cloud players in Nvidia’s GPU platform is being driven by a simple reality: cloud customers want it. At one point in 2023, lead times for delivery of Nvidia’s H100 AI chips were reported to be in the neighborhood of 40 weeks.

For any company in the highly competitive tech world, this is a dream scenario. Nvidia managed its way through the roller coaster demand of cryptocurrency mining and online gaming over the past 15 years to capitalize on a wave of technology adoption that began in 2023 and has not let up.

“Luck meets vision, skill and courage,” Vellante said. “They were lucky in a way that the crypto boom gave a boost beyond gaming to bridge the gap between the pre- and post-ChatGPT worlds. But they had the skill, expertise and vision to take on a massive challenge and create the market.”

In the process of building a clear lead in the processor market, with Nvidia capturing an estimated 80% share of AI chips used in data centers, the company has made itself a prime target for competition.

Advanced Micro Devices Inc. is locked in a battle with Nvidia in the GPU market. In April, AMD launched its Ryzen PRO 8040 series processors for AI PCs that leverage a combination of CPUs, GPUs and on-chip neural processing units for dedicated AI processing power. Nvidia countered with the release of its RTX A400 GPU to accelerate ray tracing and AI workloads. In June, AMD took aim at Nvidia’s data center GPU dominance with its release of the Instinct MI325X processor for generative AI workloads.

Huang speaks at HPE Discover in Las Vegas in June 2024.

Nvidia’s arms race with AMD has put pressure on Intel to keep pace. Intel released its third-generation Gaudi AI chips in April, with the claim they will provide four times faster performance than its previous generation in data processing for AI applications and outperform Nvidia’s H100 graphics card.

Broadcom Inc. is increasingly being viewed as a prime challenger to Nvidia’s dominance. Recent reports have indicated that major AI chip purchasers, such as Microsoft, Meta and Google, are looking to diversify their supply chains. Broadcom, which is developing its own AI chips in collaboration with large tech industry players, could begin to challenge Nvidia’s market strength in 2025.

There is also the potential for a smaller startup to challenge Nvidia’s hold on the data center GPU market. Cerebras Systems Inc., which has reportedly filed for an initial public offering, recently announced advances in material research that enable faster training of large language models at significantly lower cost.

Observers will learn more about how Nvidia intends to respond to the shifting competitive landscape at the company’s annual GTC event, scheduled for mid-March in San Jose, California. Although the company has traditionally followed a one-year cadence plan for releasing its next generation of AI chips, there is growing speculation that it will accelerate this cycle at GTC next spring.

This may involve an unveiling of its GB300 AI server powered by Blackwell GPUs and an earlier-than-expected announcement around its Rubin architecture. Rubin is Nvidia’s GPU microarchitecture initially teased by Huang during Computex Taipei in June. It uses a new form of high-bandwidth memory and an NVLink 6 Switch, and there are reports Nvidia will accelerate the timetable for Rubin from 2026 into next year.

What might challenge Nvidia’s current supremacy may have nothing to do with competition and everything to do with speed and scalability. Bandwidth and latency are hugely important in the processing of AI workloads and the current debate over InfiniBand versus Ethernet for data center switching technology could have an impact on the GPU giant.

The reason is that Nvidia has maintained a strong focus on InfiniBand for its technology because of its high-throughput, low latency fabric. Yet some analysts are predicting that Ethernet adoption for AI training will accelerate over the next several years as the technology improves to allow greater scalability of compute clusters beyond 100 terabits per second. Nvidia’s latest InfiniBand switches deliver an aggregated 51.2 terabits per second, and the firm’s proprietary switching protocols could ultimately place it at a disadvantage as a more open, AI-driven computing world changes around them.

“One area Nvidia is exposed on is that they might be viewed as proprietary and centralized around their technology,” said theCUBE Research’s John Furrier. “The impact of generative AI is changing computing, storage, and networking where a new ‘computing paradigm’ around clustered systems is emerging fast. I expect to see Nvidia get challenged, not on the GPU and software stack side, but on the industry trend toward open, distributed and heterogeneous computing.”

The competitive challenges facing Nvidia have not deterred stock market investors from pushing the company’s valuation to an all-time high. At one point in June, it briefly surpassed Microsoft as the world’s most valuable company, and the Goldman Sachs Group’s trading desk referred to Nvidia this year as “the most important stock on planet Earth.”

In the firm’s most recent earnings report, Nvidia posted a quarterly net income of $19.3 billion, which exceeded the income generated over the first nine months of the previous fiscal year.

“That is unheard-of growth and acceleration for such an established company,” Holger Mueller of Constellation Research Inc. noted in comments for SiliconANGLE.

Although demand for Nvidia’s AI processors has remained strong, its leather-jacketed CEO appears to have no illusions about the competitive world he is in. This has been a critical year for Nvidia to scale and demonstrate its ability to collaborate within the broad technology ecosystem.

“I don’t think people are trying to put me out of business — I probably know they’re trying to, so that’s different,” said Huang, during an interview late last year. “I live in this condition where we’re partly desperate, partly aspirational.”

That combination has allowed Nvidia to build what industry observers refer to as a “moat” in the chip market, where the firm’s strategy has insulated it from invaders seeking to pick off pieces of its business.

In today’s computing era, that moat is characterized by large language models and AI breakthroughs. Nvidia has built itself as a full platform provider, supplying end-to-end solutions for the AI data center market with massive advances in teraflop performance and a promise to deliver even more innovation in the processor arena. It has become the AI Factory, and if anyone wants to cross the moat and lay siege to the castle, they had better start rowing now.

“One of the key aspects of Nvidia’s moat is it builds entire AI systems and then disaggregates and sells them in pieces,” said Vellante. “As such, when it sells AI offerings, be they chips, networking, software and the like, it knows where the bottlenecks are and can assist customers in fine-tuning their architectures. Nvidia’s moat is deep and wide in our view. It has an ecosystem and it is driving innovation hard.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.