AI

AI

AI

AI

AI

AI

Nvidia Corp. today announced the launch of new tools that will advance the development of physical artificial intelligence models, such as models that power self-driving cars, warehouse and humanoid robots.

World foundation models, or WFMs, assist engineers and developers by generating and simulating virtual worlds as well as their physical interactions so that robots can be trained in various scenarios.

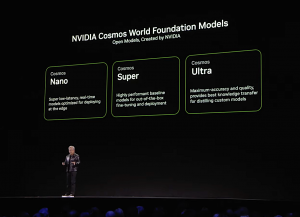

Nivida announced today at CES 2025 that it’s making available the first family of Cosmos WFMs for physics-based simulation and synthetic data generation. Alongside these AI foundation models, the company also provided tokenizers, guardrails and customization capabilities for AI models so that developers can fine-tune models to suit their needs.

“Physical AI will revolutionize the $50 trillion manufacturing and logistics industries,” said Jensen Huang (pictured, below), co-founder and chief executive of Nvidia. “Everything that moves — from cars and trucks to factories and warehouses — will be robotic and embodied by AI.”

Cosmos is a set of world foundation models that are trained on more than 9 quadrillion tokens from 20 million hours of real-world human interactions, environment, industrial, robotics and driving data. This allows the model family to provide a large variety of simulation data optimized for real-time, low-latency inference that can be distilled into custom models.

Developers can use Cosmos to generate entire virtual worlds from text or video prompts. It will allow robotics developers and engineers to generate and augment their synthetic data to test and debug their AI models before they are deployed in the real world by rapidly generating virtual environments based on their own needs.

Developers can use Cosmos to generate entire virtual worlds from text or video prompts. It will allow robotics developers and engineers to generate and augment their synthetic data to test and debug their AI models before they are deployed in the real world by rapidly generating virtual environments based on their own needs.

“Today’s AV developers need to drive millions of miles. Even more resource intensive is processing, filtering and labeling the thousands of petabytes of data capture,” said Rev Lebaredian, vice president of Omniverse and simulation at Nvidia. “And physical testing is dangerous. Humanoid developers have a lot to lose when one robot prototype can cost hundreds of thousands of dollars.”

In the end, engineers and developers ultimately discover that it doesn’t matter how much real-world data they collect. They still need to augment that data with additional synthetic data to train and fine-tune their AI model for the “last mile” to cover edge cases and eventualities for rigor and safety.

Cosmos can be paired readily with Nvidia Omniverse, the company’s real-time 3D graphics collaboration and simulation platform that allows artists, developers and enterprises to build realistic 3D models and scenes of factories, cities and other spaces using fully realized physics. With this tool, companies can develop digital twins that can simulate real-world environments to train robots in scenarios easier than putting their physical counterparts through an actual robot boot camp.

Today developers can preview the first Cosmos WFM family of models from the NGC catalog and Hugging Face.

Omniverse, Nvidia’s digital twin simulation and collaboration platform, has been expanded with four new blueprints to accelerate industrial and robotics workflows including developing and training physical AI models.

Mega, powered by Omniverse Sensor RTX application programming interfaces, will help robotics and AI engineers develop and test physical AI robot fleets at large scale before deployment into real-world facilities. Mega provides enterprises with a digital twin capability by simulating robot behavior at scale in virtual worlds using sensor data across complex scenarios.

In warehouses, distribution and factories, autonomous mobile robots, robotic arms and humanoids can work alongside people, move through aisles and interact with each other. It provides a framework that allows software-defined capabilities across a virtual environment for sensor and robot autonomy for testing and training.

Supply chain solutions company KION Group and consulting firm Accenture Plc partnered with Nvidia to become the first to adopt Mega for optimizing operations in retail, customer packaged goods and more.

Powered by Omniverse Sensor RTX, autonomous vehicle simulation will allow AV developers to replay driving data, generate new ground-truth data and perform testing to develop better AI models. Nvidia also released a reference workflow blueprint for real-time digital twins for Computer Aided Engineering, or CAE, built on Nvidia CUDA-X acceleration, physics AI and Omniverse libraries that allow for real-time physics visualization.

Isaac GR00T, Nvidia’s humanoid robot AI learning model, gets a blueprint that allows users to put on an Apple Vision Pro and demonstrate tasks. Acquiring new skills for humanoid robots is done by observing and mimicking human demonstrations. Collecting these requires numerous captures of high-quality datasets.

By implementing the GR00T blueprint, and having it generate a large synthetic dataset from a small number of human demonstrations through simulating the capture as a digital twin, this laborious task can be alleviated.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.