INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Celestial AI Inc., a startup that develops optical technology for linking chips, has raised $250 million in funding at a $2.5 billion valuation.

The Series-C1 investment comes a year after the company’s previous raise. Celestial disclosed in its announcement of the new funding round today that Fidelity Management & Research was the lead investor. It was joined by more than a half-dozen other backers, including prominent chip industry executive Lip-Bu Tan and AMD Ventures.

Many modern processors comprise not one but multiple chiplets, or compute modules, that are linked together using a technology called an interconnect. This interconnect is a miniature network that moves data between the chiplets to coordinate their work. Data travels between chiplets in the form of electrical signals.

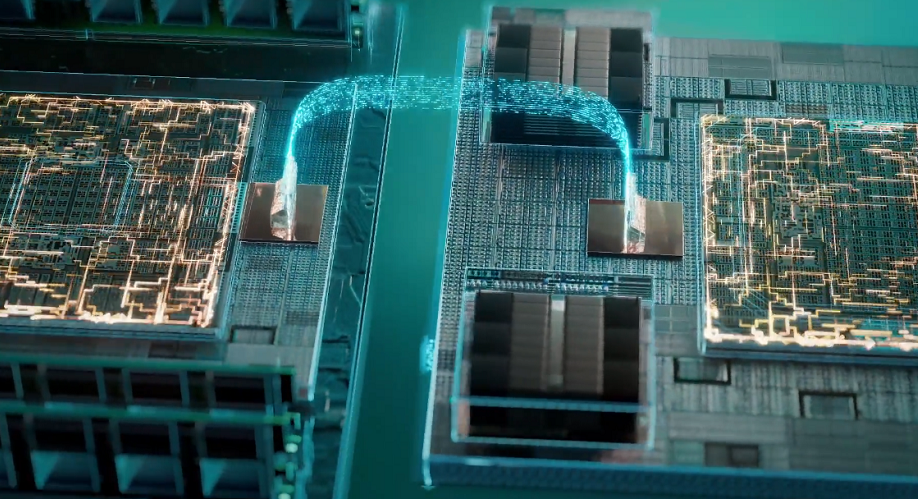

Santa Clara, California-based Celestial AI develops an interconnect that transmits information in the form of light rather than electricity. Photons travel over fiber-optic links faster than electrons over copper, which allows data to move more quickly between a processor’s chiplets. The result is an increase in processing speeds.

Celestial AI’s optical interconnect is known as the Photonic Fabric. According to the company, chipmakers can incorporate the technology into their processors in the form of an interposer. An interposer is a base layer on which a processor’s chiplets can be placed.

The company says Photonic Fabric lends itself particularly well to powering artificial intelligence chips. Such chips often generate more heat than other types of processors, which can cause technical issues in the interposer that functions as their base layer. According to Celestial AI, Photic Fabric mitigates the challenge because it can operate at “much higher” temperatures than other optical interconnects.

The company is also promising a second benefit: reduced hardware costs.

Large language models store much of their data in a type of high-speed RAM called high bandwidth memory or HBM. This memory is usually integrated into graphics cards. As a result, a company that wishes to add more HBM memory to its AI cluster must buy more graphics cards even if it doesn’t need the extra processing capacity.

According to Celestial AI, Photonic Fabric can be used to add HBM memory to an AI cluster without having to add graphics cards. The interconnect makes it possible to expand an AI processor’s memory pool by connecting it to a remote appliance loaded with HBM RAM. In theory, this approach is more cost-efficient than buying additional graphics cards.

Currently, connecting AI chips to remote HBM appliances is impractical because of range limitations. HBM memory works effectively only if it’s placed immediately next to an AI processor’s logic circuits. Celestial AI says its optical interconnect removes this range limitation: It can transfer data between chips located more than 160 feet apart. The company is promising die-to-die bandwidth of up to 14.4 terabits per second.

Celestial AI also offers its technology in another form factor: a network switch with a system-in-a-package, or SiP, design. A SiP is a processor that combines multiple integrated circuits in a single package. The switch allows multiple processors to exchange data with one another and function as one large chip.

“With the emergence of complex reasoning models and agentic AI, the requirements on AI infrastructure are compounding,” said Chief Executive David Lazovsky. “Cluster sizes must scale from a few AI processors in a server to tens of processors in a single rack and thousands of processors across multiple racks, all while relying on high-bandwidth, low-latency network connectivity to handle massive data transfers between processors.”

Bloomberg reported that the company will use its latest funding round to commercialize its interconnect technology. It plans to start mass-producing Photonic Fabric in 2027.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.