AI

AI

AI

AI

AI

AI

Google LLC is pulling out all the stops to give developers all the tools they need to bring the next era of generative artificial intelligence agents into reality, including access to powerful AI models, development kits and platforms to build and deploy AI agent-driven applications.

Today during the Google Cloud Next 2025 event in Las Vegas, the search giant said the next phase of the agentic AI trend will involve multi-agent systems, where multiple AI agents will work together – even if they are built on different frameworks or operate from different providers.

AI agents are intelligent systems that can act on a user’s behalf using an AI model to reason, plan and execute multistep tasks with minimal or no human interaction. By combining multiple agents, they can complete even more complex tasks that cross business intentions and relationships.

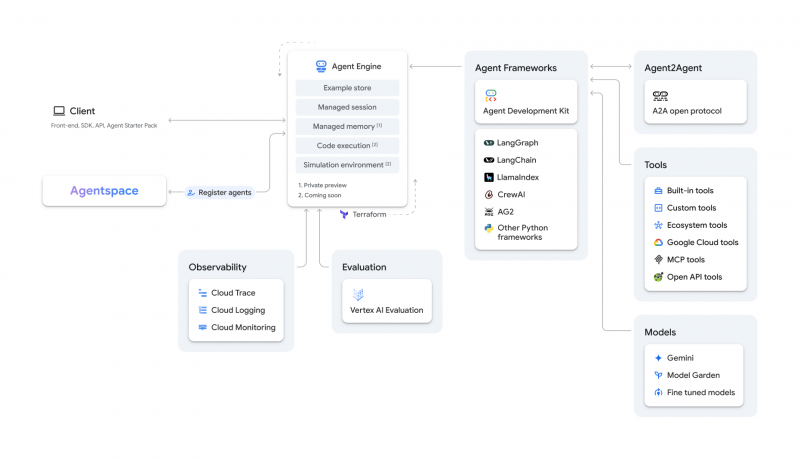

To enable that, Google announced that Vertex AI will receive several tools and enhancements to provide developers and AI engineers to build agents for the multi-agent future. They include an open-source Agent Developer Kit for building agents, an Agent Garden filled with example agents, and an Agent Engine for deploying custom agents into production.

Vertex AI is Google’s fully managed platform for developers to train and deploy AI models and applications. It also contains numerous tools for customization and training of large language models, which are fundamental for powering AI agents. With the addition of these agentic AI tools, developers will be able to build and deploy multi-agent ecosystems.

The Agent Development Kit is an open-source framework aimed at simplifying AI agent development designed for multi-agent systems while allowing developers precise control over their behavior. It’s currently available in the Python programming language, with more languages coming later in the year. According to Google an AI agent can be built and deployed with under 100 lines of code.

Developers can choose to develop their own agent from scratch or jumpstart development with an example agent chosen from the Agent Garden. The Garden is a collection of ready-to-use sample agent patterns and components accessible to the ADK chosen to help developers build their applications quickly.

AI engineers can also choose what model they want to run under the hood, from Google’s flagship Gemini or any model within the Model Garden. Google said Vertex AI provides access to over 200 models from third-party providers, including Anthropic PBC, Meta Platforms Inc., Mistral AI, AI21 Labs and Qodo.

“Using Agent Development Kit, Revionics is building a multi-agent system to help retailers set prices based on their business logic — such as staying competitive while maintaining margins — and accurately forecasting the impact of price changes,” said Aakriti Bhargava, vice president of product engineering and AI at Revionics.

Revionics used the ADK to streamline the process of building multi-agent transfer and planning for data retrieval and tooling to automate entire pricing workflows, Bhargava added.

Once developers have built agents, they need to deploy them. Agent Engine provides a fully managed runtime for deploying agents in production.

Agent Engine integrates with ADK, or any other framework such as LangGraph, Crew.ai and others, to make it easy to go from development to deployment. It includes evaluation tools from Vertex AI to improve agent performance or fine-tune underlying models to refine agent behavior based on real-world usage.

Google said in the coming months it intends to expand Agent Engine with advanced tools and testing capabilities. Agents will be able to execute code and use applications like a human user. The company said it also intends to release a simulation environment to allow developers to rigorously test agents to ensure reliability in real-world situations.

To network agents across the enterprise ecosystem, Google introduced the Agent2Agent protocol, which enables agents to communicate with each other irrespective of the framework or vendor they are built on. Using A2A, agents can publish their capabilities and negotiate how they will interact before establishing secure communication to complete tasks.

To build the A2A open communication standard, Google partnered with over 50 industry leaders including Box, Deloitte, Elastic, Salesforce, ServiceNow, UiPath, UKG and Weights & Biases.

Google Agentspace is a centralized tool for providing enterprise employees with knowledge and expertise powered by generative AI agents that can take actions on their behalf. Launched in December, the product uses Google foundation models, powerful agents and enterprise data to allow employees to discover information, discover actionable insights and execute tasks.

Today, the company said it is expanding Agentspace so that enterprise employees can access it directly from the search box in the Chrome Enterprise browser. Workers can now also discover new agents from the Agent Gallery and create new ones using the no-code Agent Designer.

“Imagine being able to find any piece of information within the organization – whether that’s text, images, websites, audio, and video – with the ease and power of Google-quality search,” said Raj Pai, vice president of product management for cloud AI at Google. “That’s what we’re bringing to enterprises with Google’s AI-powered multimodal search capabilities in Agentspace.”

Agent Gallery is generally available to allow listed users who can now choose available agents from across the enterprise, including from Google, built by internal teams and partners.

At launch, the Gallery includes two new Google-built expert agents joining the previously available NotebookLM for Enterprise. Deep Research agent will explore complex topics on behalf of users similar to the Deep Research capability of the Gemini LLM and synthesize information from internal and external sources into an easy-to-read report. An Idea Generation agent will brainstorm and develop novel ideas in any area, then help evaluate them to find the best solution “via a competitive system inspired by the scientific method,” the company said.

Agent Designer is in preview, which provides a no-code interface for connecting to enterprise data sources to automate everyday knowledge work tasks. Even users with limited technical expertise will be able to build AI agents personalized for their day-to-day practices and needs.

Beyond expert agents and building agents, Agentspace also supports the Agent2Agent protocol, which will allow its agentic platform to communicate with external agents to complete tasks on the user’s behalf.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.