INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

EnCharge AI Inc. said today its highly efficient artificial intelligence accelerators for client computing devices are almost ready for prime time after more than eight years in development.

The startup, which has raised more than $144 million in funding from a host of backers that include Tiger Global, Maverick Silicon and Samsung Ventures, has just lifted the lid on its powerful new EnCharge EN100 chip. It features a novel “charge-based memory” that uses about 20 times less energy than traditional graphics processing units.

EnCharge said the EN100 can deliver more than 200 trillion operations per second of computing power within the constraints of client platforms such as laptops and workstations, eliminating the need for AI inference to be run in the cloud.

Until today, the most powerful large language models that drive sophisticated multimodal and AI reasoning workloads have required so much computing power that it was only practical to run them in the cloud. But cloud platforms come at a cost, both in terms of dollars and also in latency and security, which makes them unsuitable for many types of applications.

EnCharge believes it can change that with the EN100, as it enables on-device inference that’s vastly more powerful than before. The company, which was founded by a team of engineering Ph.D.s and incubated at Princeton University, has created powerful analog in-memory-computing AI chips that dramatically reduce the energy requirements for many AI workloads. Its technology is based on a highly programmable application-specific integrated circuit that features a novel approach to memory management.

The primary innovation is that the EN100 utilizes charge-based memory, which differs from traditional memory design in the way it reads data from the electrical current on a memory plane, as opposed to reading it from individual bit cells. It’s an approach that enables the use of more precise capacitors, as opposed to less precise semiconductors. As a result, it can deliver enormous efficiency gains during data reduction operations that involve matrix multiplication.

In essence, instead of communicating individual bits and bytes, the EN100 chip just communicates the result of its calculations.

“You can do that by adding up the currents of all the bit cells, but that’s noisy and messy,” EnCharge co-founder and Chief Executive Naveen Verma told SiliconANGLE in a 2022 interview. “Or you can do that accumulation using the charge. That lets you move away from semiconductors to very robust and scalable capacitors. That operation can now be done very precisely.”

Not only does this mean the EN100 uses vastly less energy than traditional GPUs, but it also makes the chip extremely versatile. They can be highly optimized, either for efficiency, performance or fidelity.

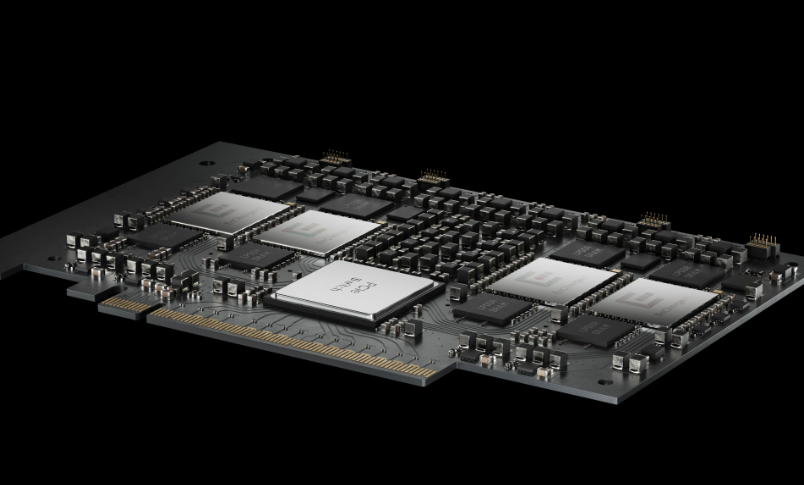

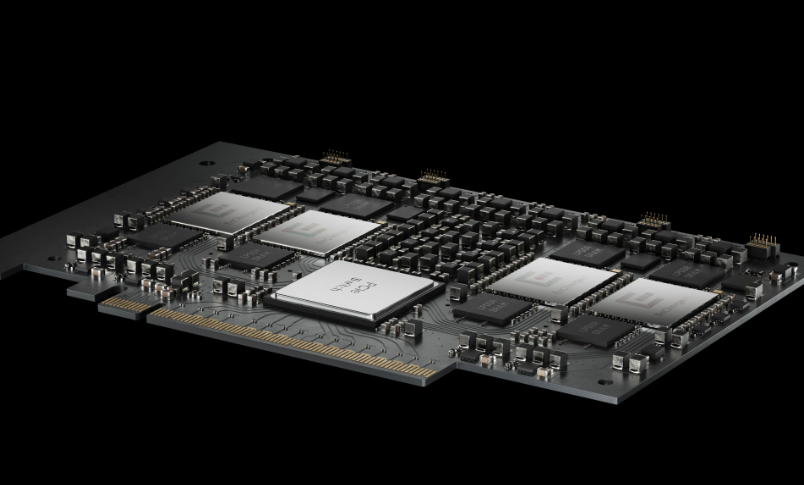

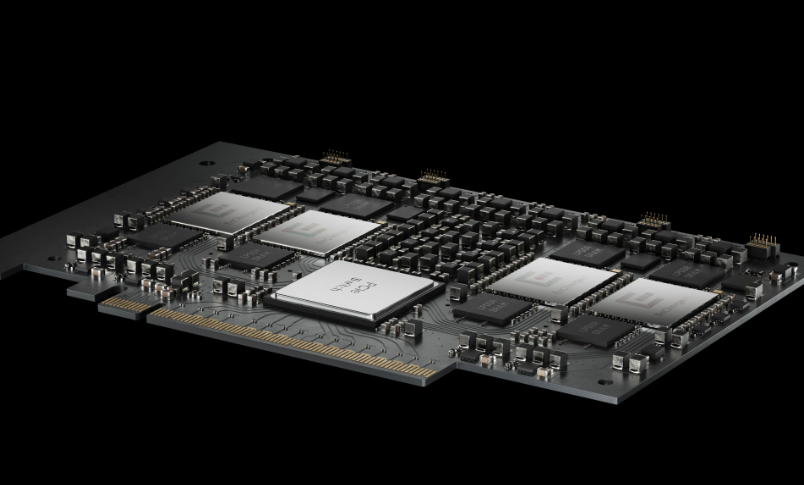

Moreover, the EN100 comes in a tiny form factor, mounted onto cards that can plug into a PCIe interface, so they can easily be hooked up to any laptop or personal computer. They can also be used in tandem with cloud-based GPUs.

EnCharge said the EN100 is available in two flavors. The M.2 designed for laptops, offering up to 200 TOPS in an 8.25-watt power envelope, paving the way for high-performance AI applications on battery-powered devices.

There’s also an EN100 PCIe for workstations, which packs in four neural processing units to deliver up to 1 petaOPS of processing power, matching the capacity of Nvidia Corp.’s most advanced GPUs. According to EnCharge, it’s aimed at “professional AI applications” that leverage more advanced LLMs and large datasets.

The company said both form factors are capable of handling generative AI chatbots, real-time computer vision and image generation tasks that would previously have been viable only in the cloud.

Verma said the launch of EN100 marks a fundamental shift in AI computing architecture. “It means advanced, secure and personalized AI can run locally, without relying on cloud infrastructure,” he said. “We hope this will radically expand what you can do with AI.”

Alongside the chip, EnCharge offers a comprehensive software suite for developers to carry out whatever optimizations they deem necessary, so they can increase its processing power, or focus more on efficiency gains, depending on the application they have in mind.

EnCharge said the first round of EN100’s early-access program is already full, but it’s inviting more developers to sign up for a second round that’s slated to begin soon.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.