AI

AI

AI

AI

AI

AI

Artificial intelligence and agentic AI are reshaping what “storage” means in the enterprise, moving it from a static repository to a distributed, high-speed fabric that spans clouds, sites and legacy boundaries — always ready for the next decision.

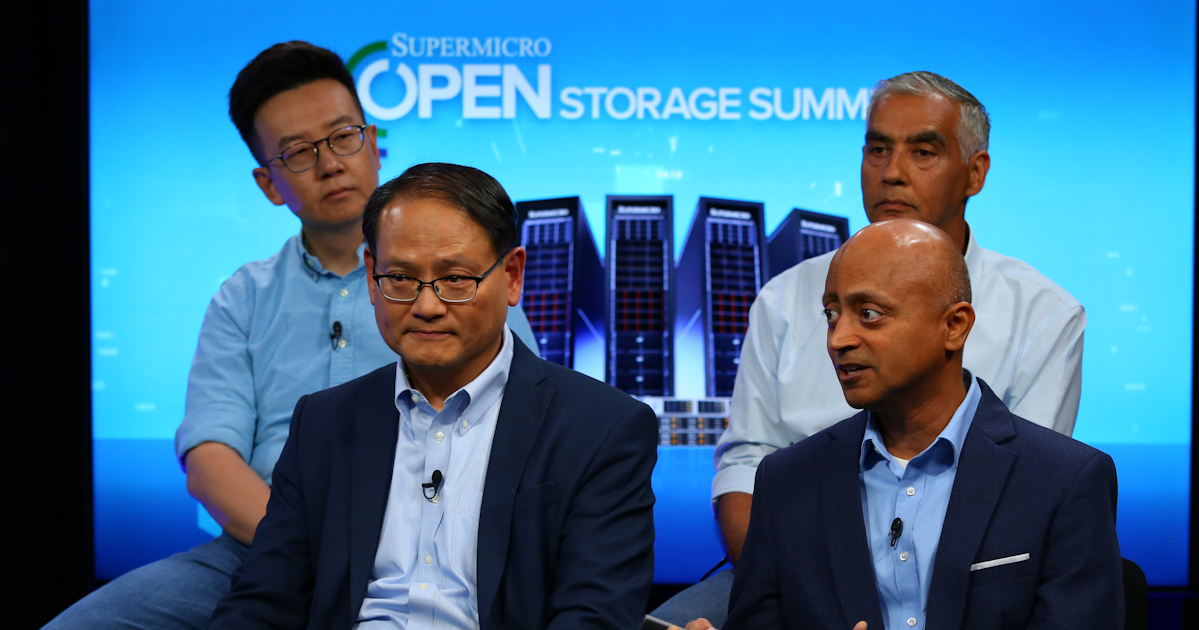

This evolution is driving architectures beyond traditional silos, according to Vince Chen (pictured, bottom row, left), senior director of solutions architecture at Super Micro Computer Inc. Multi-site designs, photonic interconnects and streaming data pipelines are enabling real-time orchestration at massive scale. The result is storage that doesn’t just hold data — it moves, connects and powers the instant responsiveness that next-generation AI systems require.

Experts from Supermicro, DDN, AMD and Western Digital talk with theCUBE about building storage infrastructure capable of powering agentic AI at scale.

“It’s not just a dedicated storage,” Chen said. “You probably need to broaden your view on potentially looking at multi-parts and then how you manage your data across multiple sites.”

Chen spoke with Rob Strechay at the Supermicro Open Storage Summit, during an exclusive broadcast on theCUBE, SiliconANGLE Media’s livestreaming studio. They were joined by Balaji Venkateshwaran (bottom row, right), vice president of AI product management at DataDirect Networks Inc.; Kevin Kang (top row, left), senior manager of product marketing and product management at Advanced Micro Devices, Inc.; Praveen Midha (top row, right), director of segment and technical marketing – data center flash – at Western Digital Corp. The group unpacked the technical and strategic considerations of building storage infrastructure capable of powering agentic AI systems at scale. (* Disclosure below.)

The rise of agentic AI is pushing storage architectures to their limits. These systems must handle massive volumes of high-speed, low-latency data while integrating seamlessly with compute and networking layers. Inference workloads in particular are growing more complex, with evolving architectures requiring both scale and flexibility to keep up, according to Midha. This complexity often comes from the multiple phases required to process and generate responses in real time.

“2025 is really the year of inferencing and agentic AI because we were actually severely under-calling the amount of compute and storage that inference and agentic AI was going to translate into,” Midha said. “If you double click under the hood, what we see is that inference is really a two-stage process. There is a pre-fill and there’s a decode. Pre-fill is really where you take the input tokens, you start building up the context in this intermediate buffer called the [key value] cache, and then on the decode side, you actually start outputting tokens, and then you also take these tokens and build it back into the KV cache to really hold all the context.”

Meeting those requirements means treating more of the dataset as “hot” and ready for retrieval at any time, according to Venkateshwaran. This requires a shift from an iceberg model, where only a small portion was hot and the rest cold, to a world where the entire iceberg is active.

“Now the whole thing is the data where you could extract at any point you do [retrieval-augmented generation] inferencing, you extract data at any point,” Venkateshwaran said. “That’s where agentic AI is, and that means you need to be able to actively manage the entire dataset.”

Each stage of the AI data path — from ingesting and preparing large sequential datasets to enabling real-time, low-latency inference — comes with unique storage and compute demands, according to Kang. AMD offers a full-stack portfolio of CPUs, GPUs, SmartNICs, DPUs and software designed to manage data flows efficiently, move data directly to GPU memory, and minimize latency across AI pipelines.

“Since we’re moving to the agentic AI — so not just the AI giving answers — AI today learns by itself, adapts and takes the actions after that,” Kang said. “So, the system needs to access data fast, use it well and update data in real time.”

No single company can meet all the demands of agentic AI alone. Scalable, end-to-end solutions require close collaboration between hardware providers, software vendors and system integrators, according to Chen. These partnerships ensure that infrastructure components are not only compatible but optimized for the workloads they serve.

“With the architecture, it’s almost like having no limit to scale and support the growth of customer demand,” Chen said. “For customers with different infrastructures and different requirements, we are here to work with partners so we can design, architect and offer the best solutions.”

Minimizing data movement is another key to scalable AI infrastructure. Excess data egress creates latency and bottlenecks that can undermine even the most powerful compute environments, according to Venkateshwaran.

“The other thing is to be able to minimize data movement so that you don’t have to do egress and data because that introduces latency, it introduces delay, performance bottlenecks,” he said. “Infinia has a special architecture that minimizes data movement. And not only that, due to extensive ability to tag, almost unlimited tagging, which is the first time in the industry, to be able to tag all the data so that you can fast find when you need to find it.”

GPUs are expensive, and keeping them fully utilized is essential for delivering a return on investment on AI investments. Purpose-built storage can eliminate bottlenecks and ensure that GPUs receive data quickly enough to work at peak efficiency, according to Venkateshwaran. He pointed to a recent deployment where a targeted storage change delivered dramatic performance gains.

“When we replaced the AWS object store with Infinia Object Storage, no other changes, we saw a tremendous speed up, meaning the improvement in latency, which results in the application speed up of 22,” Venkateshwaran said. “That shows you the power of designing and developing the right storage management and the data intelligence software required to meet the demanding applications such as agentic AI.”

GPU performance is also tied to power efficiency. With GPUs consuming roughly 10 times more power than CPUs, they can dominate both infrastructure budgets and operational expenses, according to Midha.

“I think power is top of mind for everybody today, especially the data center architects,” he said. “GPUs are power hungry; they’re almost 10 times more power hungry than CPUs. If you’re working with a certain budget, a large portion of that is being allocated to GPUs, which means you have less share of wallet on the storage side. Point number two is [that] power rates are actually different across different geographies, and it’s actually becoming the biggest constraint when you’re designing new data centers or maintaining existing ones.”

Here’s the complete video interview, part of SiliconANGLE’s and theCUBE’s coverage of the Supermicro Open Storage Summit:

(* Disclosure: TheCUBE is a paid media partner for the Supermicro Open Storage Summit. Neither Super Micro Computer Inc., the sponsor of theCUBE’s event coverage, nor other sponsors have editorial control over content on theCUBE or SiliconANGLE.)

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.