SECURITY

SECURITY

SECURITY

SECURITY

SECURITY

SECURITY

Artificial intelligence security lab startup Irregular announced today that it has raised $80 million in new funding to build its defensive systems, testing infrastructure and security tools to help vet and harden next-generation AI models for safe deployment.

Founded in 2023 under its former named Pattern Labs Inc., Irregular pitches itself as the world’s first frontier AI security lab and is devoted to securing advanced AI systems before they can be misused. The company has as its mission to test, harden and defend next-generation AI models by running them through adversarial and red-teaming environments, in partnership with the world’s leading AI developers.

Irregular runs controlled simulations of cutting-edge AI models to probe how those models might be exploited. The simulations explore threat scenarios such as antivirus evasion, autonomous offensive behavior, system infiltration, or other misuse vectors, measuring both how the AI could carry out an attack and how resilient it is when under counterattack.

Along with testing, Irregular also offers defensive tools, frameworks and scoring systems that guide how AI systems should be secured in practice.

Irregular partners with leading AI labs and government institutions, embedding its testing work into the lifecycles of major frontier models. The collaboration allows it to “see around corners” — anticipating threats before they materialize in deployed systems and advising on security roadmaps, compliance and deployment policy.

The company is already working on shaping industry standards. Its evaluations are cited in OpenAI’s system cards for GPT-4, o3, o4 mini and GPT-5, and the U.K. government and Anthropic PBC use Irregular’s SOLVE framework — the latter to vet cyber risks in Claude 4. In addition, Google DeepMind researchers recently cited the company in a paper on the evaluation of emerging cyberattack capabilities of AI.

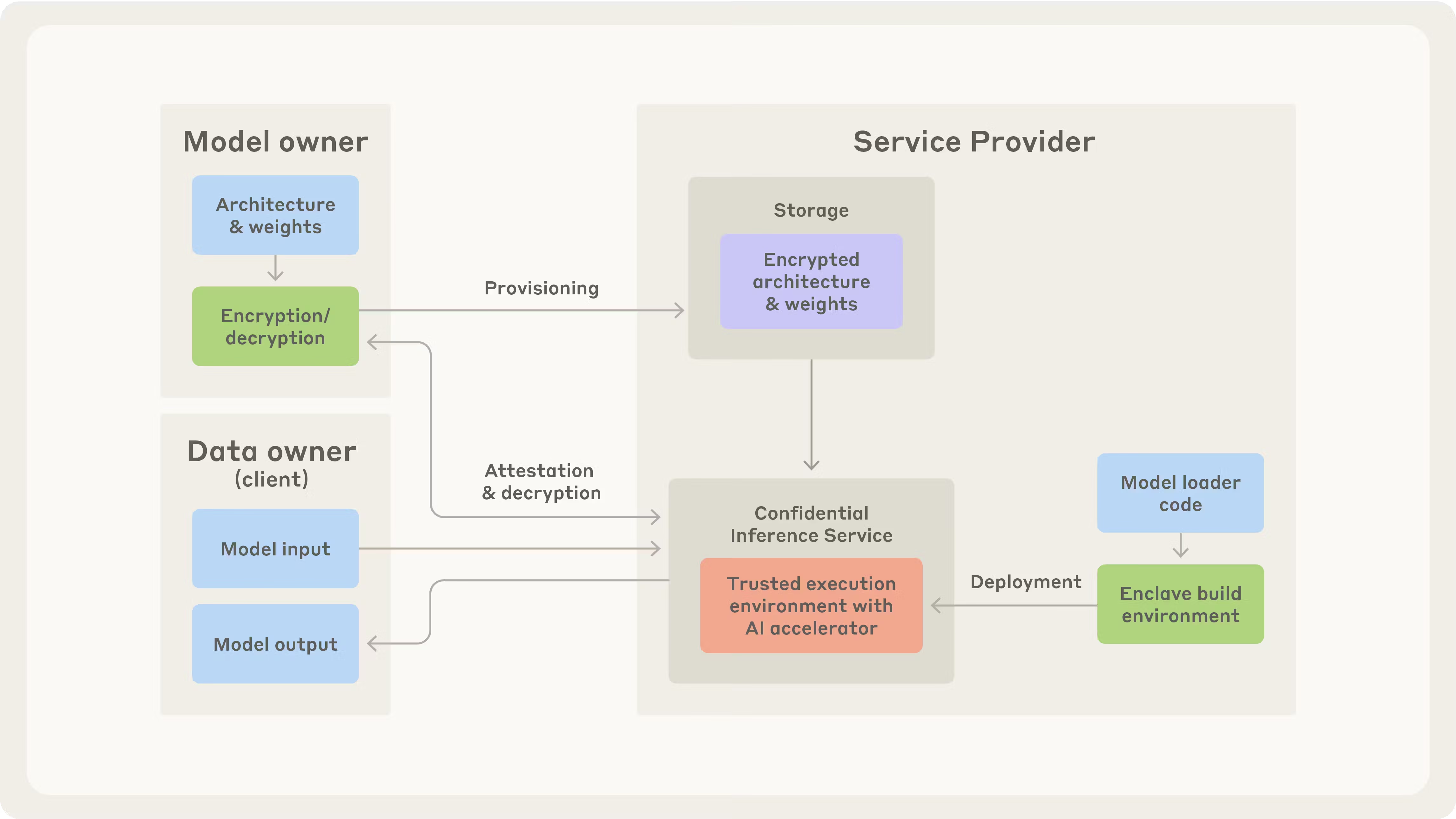

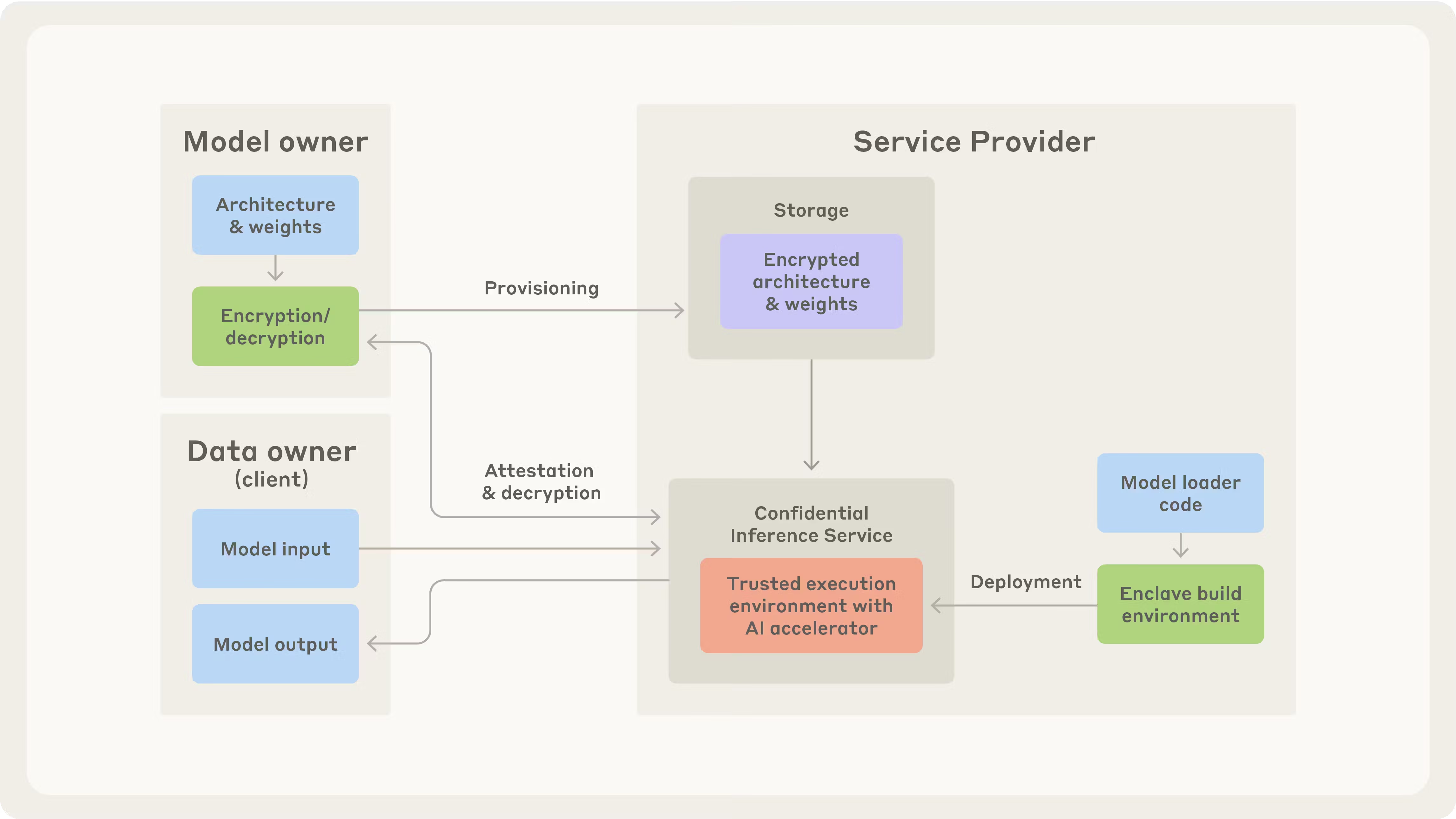

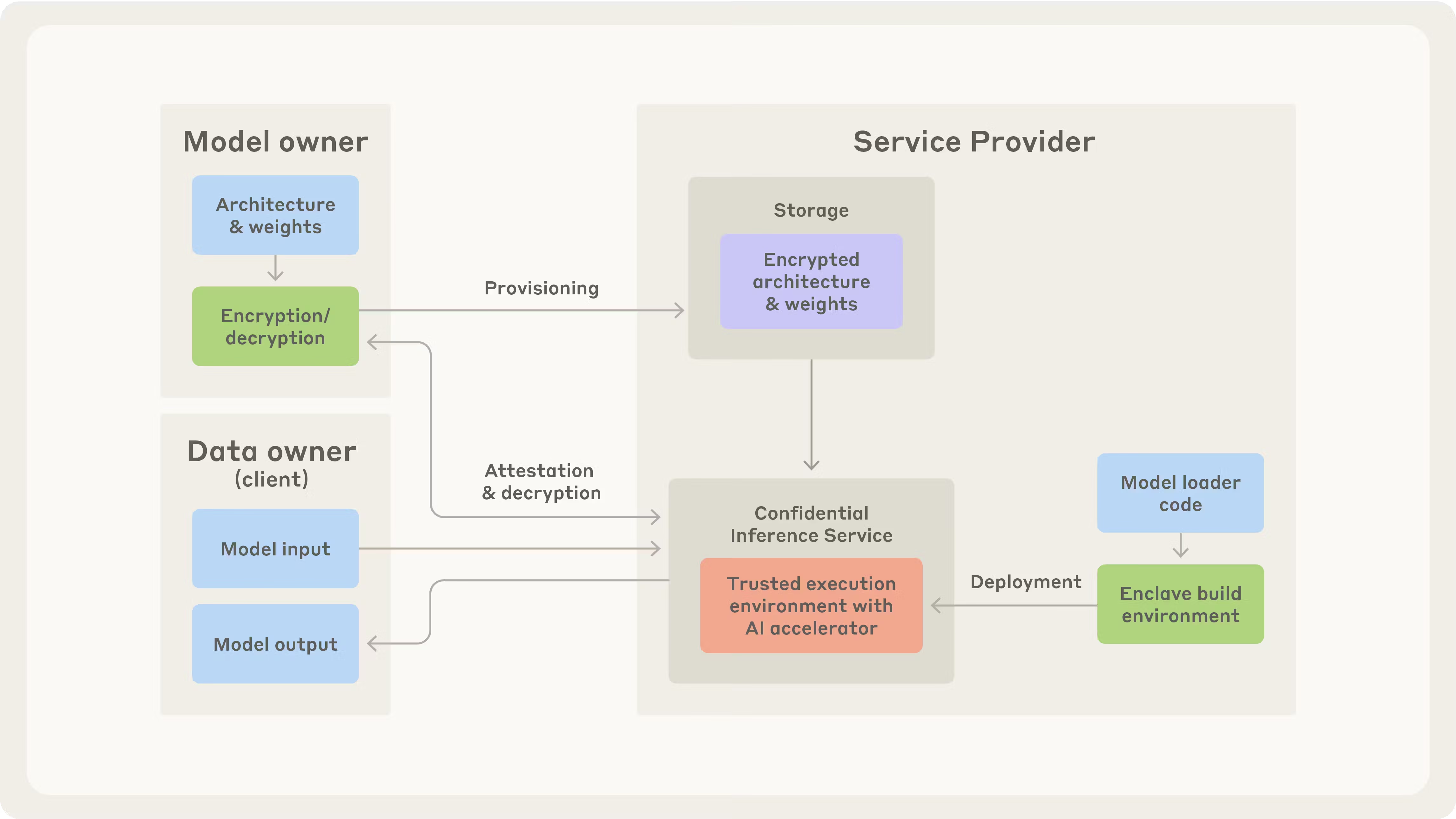

The company co-authored a whitepaper with Anthropic presenting a novel approach to using confidential computing technologies to enhance the security of AI model weights and user data privacy. It also co-authored with RAND Corp. a joint seminal paper on AI model theft and misuse, helping shape Europe’s policy discussions on AI security and setting a benchmark for the field.

“Irregular has taken on an ambitious mission to make sure the future of AI is secure as it is powerful,” said Dan Lahav, co-founder and chief executive officer of Irregular. “AI capabilities are advancing at breakneck speed; we’re building the tools to test the most advanced systems way before public release and to create the mitigations that will shape how AI is deployed responsibly at scale.”

The funding round was led by Sequoia Capital Operations and Redpoint Ventures LP, with Swish Ventures and notable angel investors including Wiz Inc. CEO Assaf Rappaport and E.ON SE CEO Ofir Ehrlich also participating.

“The real AI security threats haven’t emerged yet,” said Shaun Maguire, a partner at Sequoia Capital. “What stood out about the Irregular team is how far ahead they’re thinking. They’re working with the most advanced models being built today and laying the groundwork for how we’ll need to make AI reliable in the years ahead.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.