INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

At this year’s Open Compute Project Summit, Nvidia Corp. took another major step toward redefining the data center as a mega artificial intelligence factory.

The company announced that Meta Platforms Inc. and Oracle Corp. will adopt its Spectrum-X Ethernet networking platform, a purpose-built system designed for AI workloads that connects millions of graphics processing units into one unified fabric.

For Meta and Oracle, this isn’t just a networking upgrade — it’s a bet on a new architecture for AI-scale computing, or AI factories. And for the industry, it marks a clear shift: Ethernet is no longer “good enough” for AI — it’s being reinvented for it.

Unlike traditional Ethernet solutions retrofitted for AI, Nvidia Spectrum-X was engineered from the ground up to handle the communication patterns of large-scale AI workloads — massive all-to-all GPU synchronization, low-latency messaging and congestion-prone flows.

As I’ve discussed on theCUBE in our AI Factory Series, Spectrum-X represents a networking stack purpose-built to accelerate generative AI by removing bottlenecks, maximizing GPU utilization and enabling both intra-data center and giga-scale, cross-data center deployments.

This purpose-built approach is Nvidia’s path to unlocking performance gains at scale. The company claims 1.6 times higher networking performance for AI communication versus conventional Ethernet — a leap that directly translates to less GPU idle time and higher throughput during training and inference.

Meta’s integration of Spectrum-X into its Facebook Open Switching System, or FBOSS, and Minipack3N switch marks a key moment for open networking. It extends Meta’s open hardware and software philosophy into the AI infrastructure layer — now serving as an acceleration backbone tuned for the trillion-parameter model era.

As Gaya Nagarajan, vice president of networking engineering at Meta, said, “Meta’s next-generation AI infrastructure requires open and efficient networking at a scale the industry has never seen before.”

By merging Spectrum-X Ethernet with FBOSS, Meta is pairing open, programmable control planes with AI-optimized physical infrastructure. The result is predictable, congestion-free performance while preserving the flexibility of Meta’s disaggregated network model.

Oracle Cloud Infrastructure is taking a complementary approach — scale. Oracle is using Spectrum-X to build giga-scale AI factories powered by the upcoming Nvidia Vera Rubin architecture.

“By adopting Spectrum-X Ethernet, we can interconnect millions of GPUs with breakthrough efficiency,” said Mahesh Thiagarajan, executive vice presidet of Oracle Cloud Infrastructure.

This signals Oracle’s intent to compete directly with the largest hyperscalers in AI compute, building globally distributed AI factories capable of massive training jobs. Spectrum-X’s scale-across capabilities (SpectrumXGS) allow Oracle to connect clusters across multiple data centers — even across countries — into one logical AI system. It’s a vision of AI without physical boundaries.

From my perspective, the details of Spectrum-X’s architecture explain why both Meta and Oracle are standardizing on it:

Integrated hardware stack:

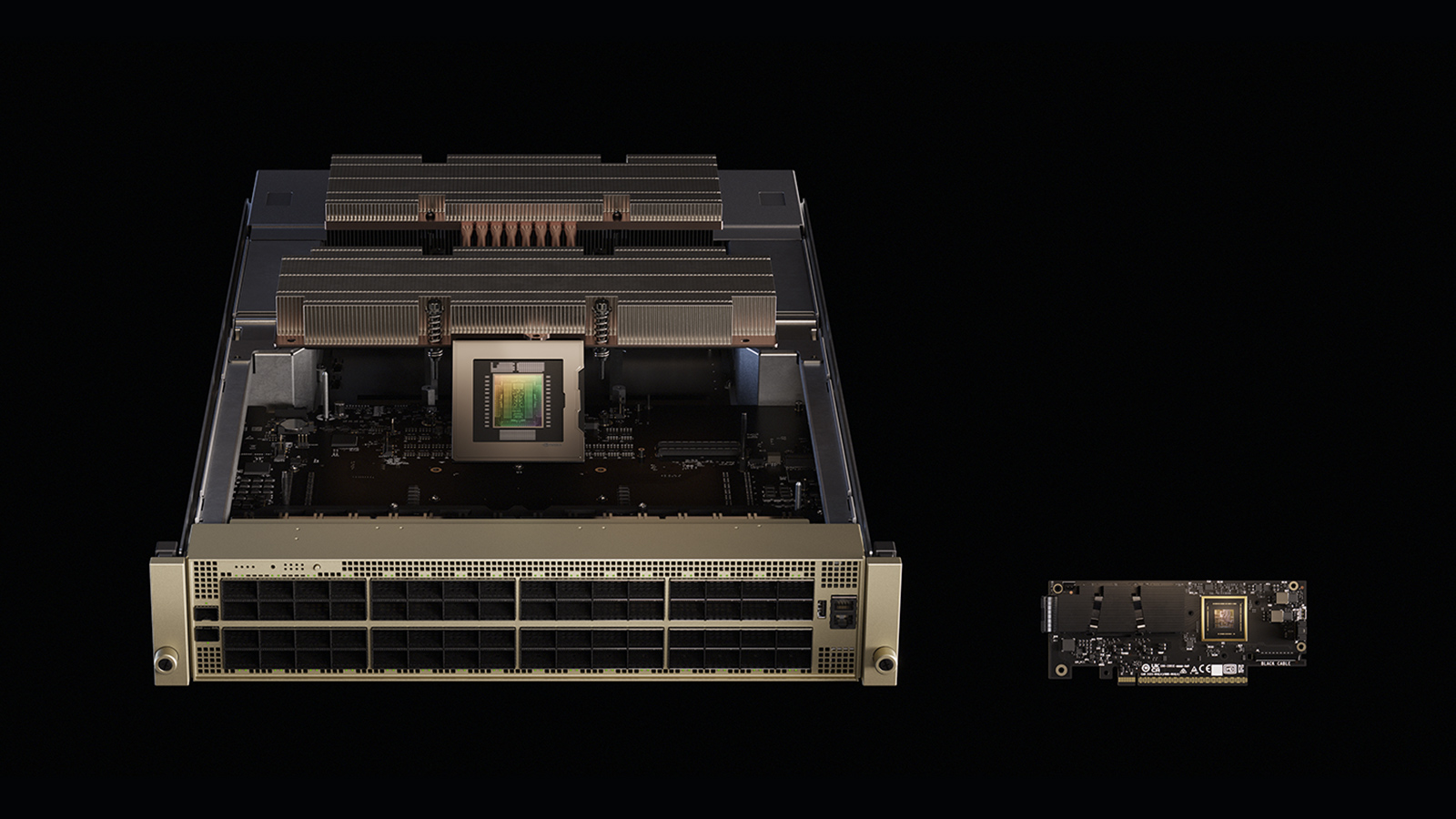

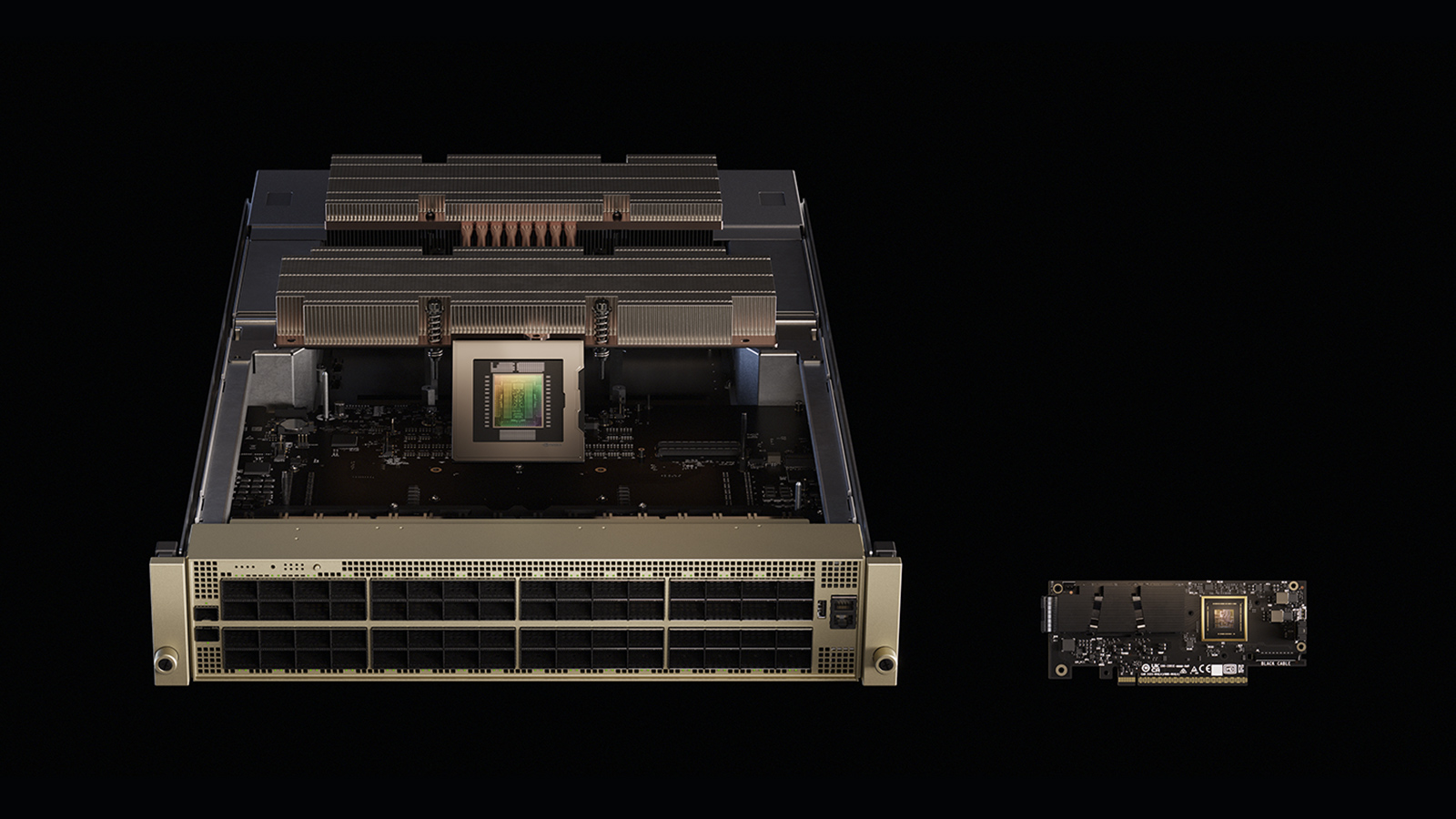

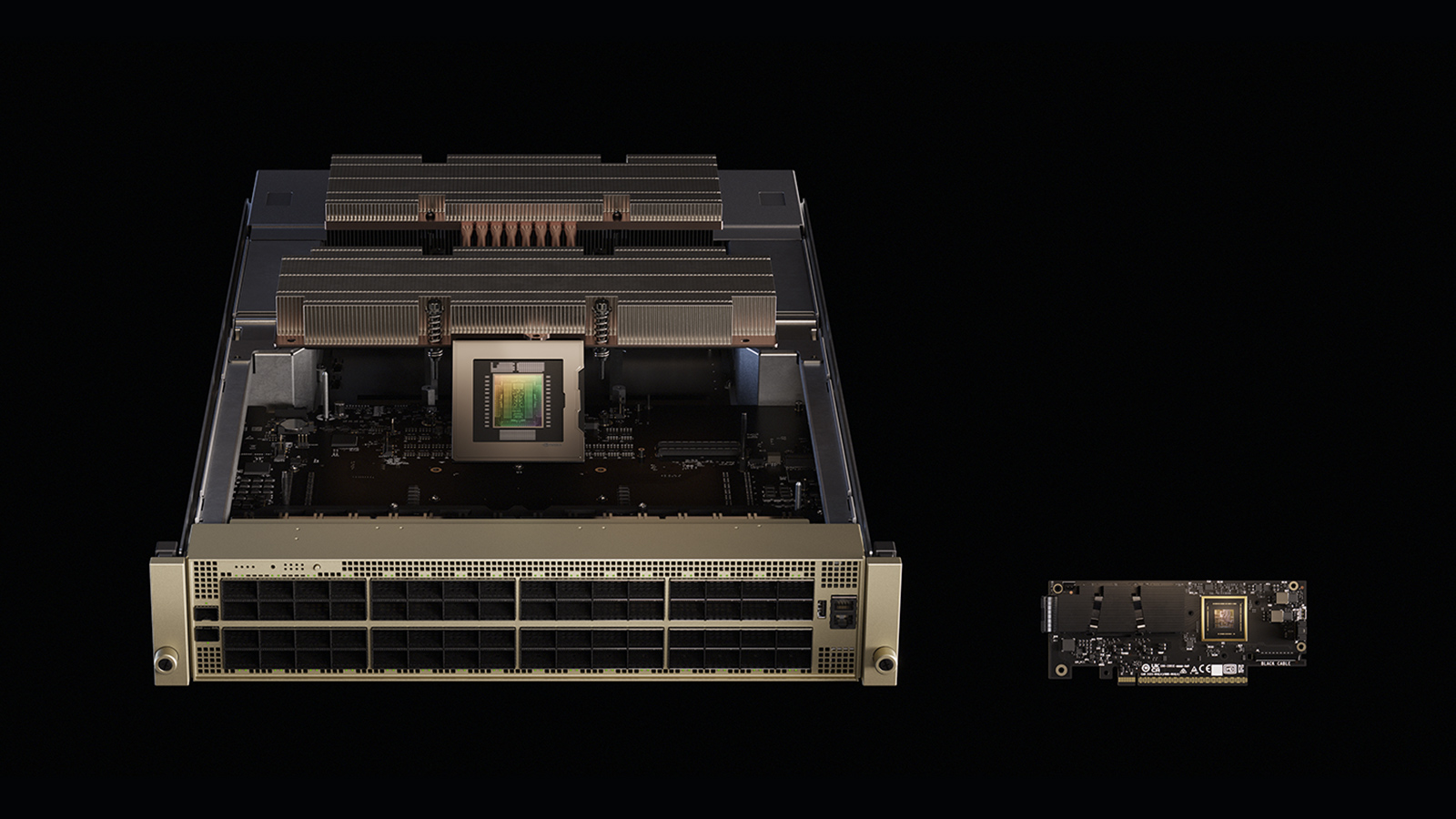

Spectrum-X combines the Spectrum4 Ethernet switch (offering 51.2 terabits per second of throughput) with BlueField-3 SuperNICs and DPUs, which offload and secure network services so GPUs can focus purely on compute.

Higher networking performance:

By delivering roughly 1.6 times better effective performance, Spectrum-X reduces GPU idle time — crucial for cost efficiency when training large models.

Advanced telemetry and routing:

End-to-end visibility, adaptive routing and congestion control dynamically adjust packet flows to prevent the dreaded “elephant flow” bottlenecks common in AI workloads.

High-speed RDMA and multitenancy:

BlueField-3 enables 400 gigabits per second RDMA over converged Ethernet — enabling faster GPU-to-GPU communication and secure, multitenant environments.

Gigascale scaling (SpectrumXGS):

SpectrumXGS extends networking beyond a single data center, enabling multi-site AI super-factories with consistent performance — a true foundation for distributed AI systems.

Software ecosystem integration:

Spectrum-X is part of Nvidia’s full-stack strategy — integrated with DOCA, Cumulus Linux, Pure SONiC, NetQ, AI Enterprise and AI Workbench for development, deployment and operational visibility.

The result is an end-to-end networking solution for AI that delivers both high performance and operational simplicity. If deployed effectively, it can reduce total cost of ownership while increasing return on investment for expensive GPU assets.

As I’ve argued in theCUBE’s AI factory coverage, networking has quietly become the de facto operating system of the AI era. Today’s AI applications are more data-hungry than ever, and GPUs are vastly more capable — but their potential is realized only when data moves efficiently. Networking is now both the enabler and bottleneck.

The industry consensus is forming around a new reality: AI’s performance frontier has shifted from compute to connectivity. Networking plays the same integrative role that operating systems once did — orchestrating, scheduling and synchronizing distributed resources across clouds, edges and data centers.

In this new paradigm, the network is the control plane of AI factories — the connective tissue that binds compute, storage and data into one intelligent system. This also explains why inference, not just training, is driving architectural evolution. As Shekar Ayyar and others have pointed out, inference happens across distributed environments that require AI-aware networks capable of understanding latency, congestion and workload locality.

This shift demands adaptive, programmable and observable network fabrics that extend from core data centers to the edge. Networking now underpins observability, security and orchestration for hybrid AI deployments, ensuring that models and agents can operate predictably across environments.

In short, networking is becoming the nervous system of the AI factory — orchestrating computation, enabling data mobility and transforming the data center into a living, distributed organism of intelligence.

What Nvidia, Meta,and Oracle are collectively signaling is that we’ve entered the industrial phase of AI infrastructure.

Nvidia Chief Executive Jensen Huang captured it best when he said: “Trillion-parameter models are transforming data centers into giga-scale AI factories.… Spectrum-X is the nervous system of the AI factory.”

Meta’s integration shows how open networking meets AI acceleration, while Oracle’s adoption underscores the rise of mega AI factories as the new hyperscale. Both point to a world where network design becomes the strategic lever for the future of AI — the road to superintelligence determined by performance, cost and energy efficiency.

The adoption of Spectrum-X by Meta and Oracle validates a major architectural inflection point: AI is no longer constrained by compute and energy — it’s now constrained by the network.

With Spectrum-X, Nvidia is redefining Ethernet as AI Ethernet — a fully instrumented, GPU-aware, congestion-free data fabric capable of scaling across geographies. It’s the missing piece that turns clusters into connected AI supercomputers and AI factories at mega scale.

This move places Nvidia at the center of a new large-scale computing stack — from silicon to systems to the global AI network. For hyperscalers like Meta and Oracle, the message is clear: AI performance now starts with the network.

As I’ve been saying for over a year: The network is the computer — and networking is the operating system of AI factories.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.