AI

AI

AI

AI

AI

AI

Artificial intelligence tooling startup Lemurian Labs Inc. today announced that it has closed a $28 million funding round.

Pebblebed Ventures and Hexagon jointly led the Series A investment. They were joined by Oval Park Capital, which led a seed round for Lemurian three years ago, and more than a half dozen other backers.

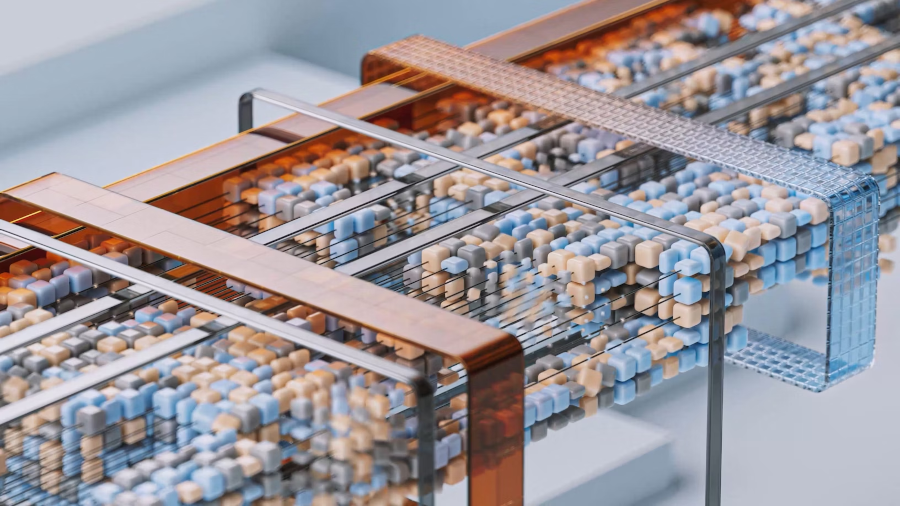

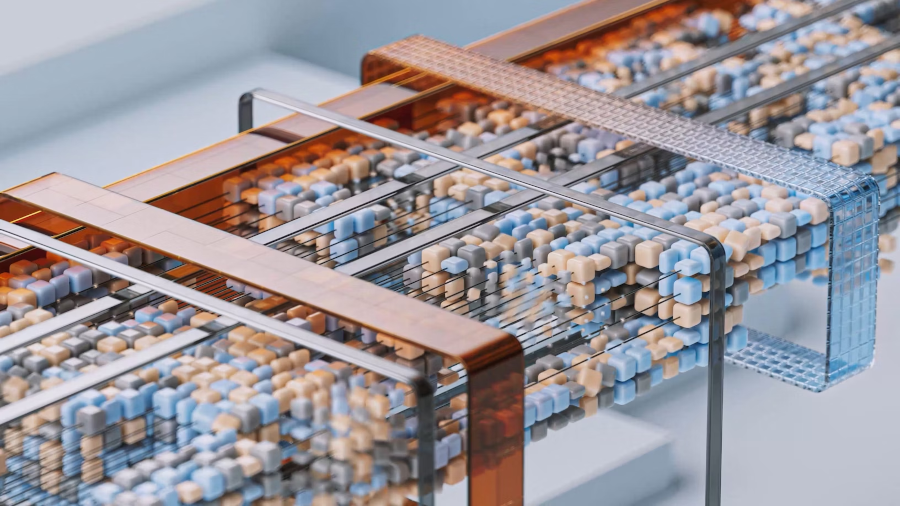

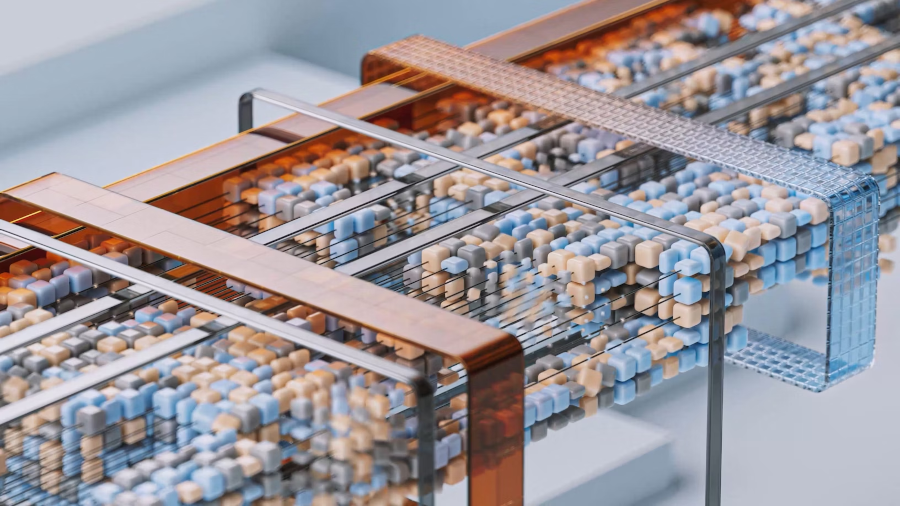

An AI model is a collection of kernels, code snippets optimized to run in parallel across a large number of graphics processing unit cores. Carrying out calculations in parallel is faster than running them one after another. GPUs have significantly more cores than a central processing unit, which allows them to run more parallel calculations.

Different GPUs implement AI model kernels in different ways. As a result, an AI model optimized for one chipmaker’s graphics cards often can’t run on silicon from competitors. Porting kernels across chips takes a significant amount of time and requires highly specialized skills.

Santa Clara, California-based Lemurian is developing a compiler called Tachyon that it says automates the task. The software enables developers to write an AI model once and run it across multiple chipmakers’ GPUs without major code changes. The compiler supports Nvidia Corp., Intel Corp. and Advanced Micro Devices Inc. chips.

“Lemurian is reframing the grim choice that AI’s hardware-software interface has forced on users: choosing between vendor-locked vertical stacks or brittle, rewrite-prone portability,” said Pebblebed Ventures founding partner Keith Adams.

Lemurian claims that Tachyon can not only make AI models portable but also boost their performance in the process. That improves hardware utilization, which in turn lowers the associated expenses. The company estimates that its technology can cut inference costs by 60% to 80% in some cases.

One of the ways Tachyon makes AI models more hardware-efficient is by reducing data movement. Graphics cards perform inference by loading data from memory into their cores, processing it and then sending the results back to memory. Shortening that RAM round trip reduces the amount of time GPU cores must wait for data to arrive, which speeds up calculations.

Tachyon reduces data movement partly by applying a method called operator fusion to customers’ AI models. Each computing operation that a GPU performs requires it to move data to and from memory. Operator fusion consolidates multiple operations into a single calculation, which means the RAM round trip must only be completed once instead of several times.

Tachyon provides a programming language called Tachyon DSL that enables developers to customize how it compiles AI models. The language is based on Python, which has a relatively simple syntax. Once a Tachyon-powered model is deployed in production, a module known as a dynamic runtime makes regular adjustments to optimize performance.

Lemurian plans to launch Tachyon next summer through a beta testing program. The company will use its new funding to hire more engineers and expand its partner network.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.