CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

Google LLC today bolstered its public cloud platform with the addition of Tensor Processing Units, an internally designed chip series specifically built to power artificial intelligence workloads.

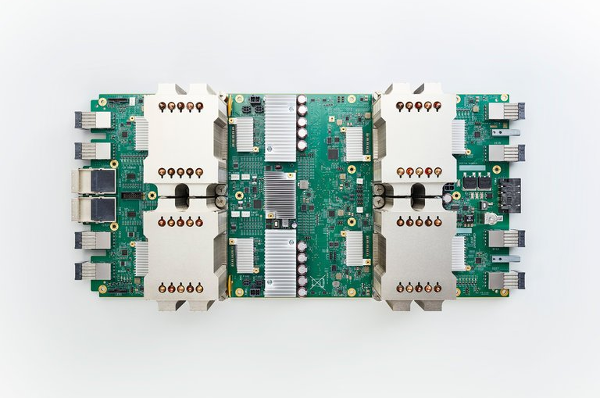

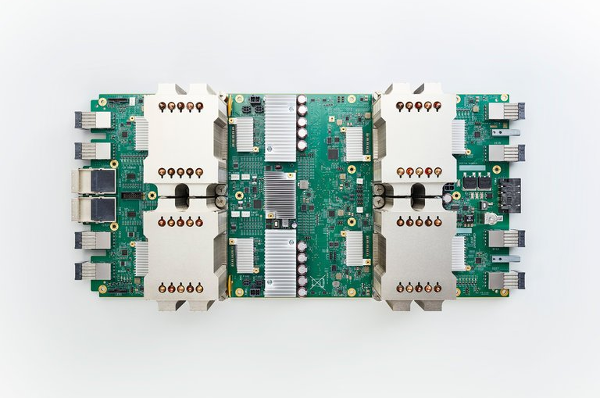

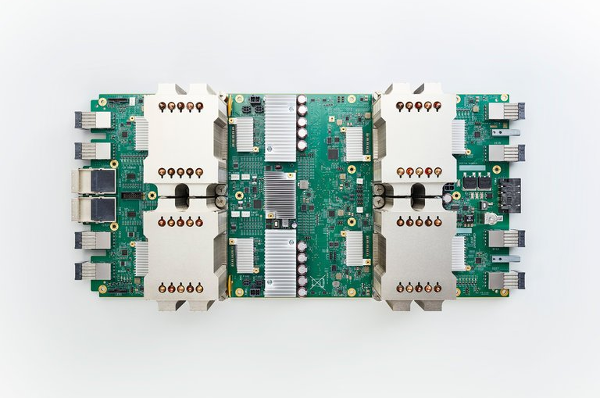

A single TPU (pictured) consists of four application-specific integrated circuits paired with 64 gigabytes of “ultrahigh-bandwidth” memory. The combined unit can provide up to 180 teraflops, or a trillion floating-point operations per second, a standard unit of computer performance. Later this year, Google plans to add a clustering option that will let cloud customers combine multiple TPUs into a “pod” with speed in the petaflop range, or 1,000 times as fast as a teraflop.

The company didn’t share any more performance details in today’s announcement. However, a blog post penned by two of Google’s top engineers last year revealed that the pods used internally at the time included 64 TPUs with a combined output of 11.5 petaflops. For comparison, the world’s most powerful supercomputer comes in at 93 petaflops, although it’s worth noting the search giant most likely didn’t use the same benchmarking methods to measure the TPUs’ speed.

Either way, the chips represent a major addition to the Google Cloud Platform. When Google first gave the world a glimpse of the TPU’s specifications last April, it divulged that the chip can run at least certain machine learning workloads 15 to 30 times faster than off-the-shelf silicon. This includes graphics processing units, which lend themselves particularly well to machine learning models. Made mainly by Nvidia Corp. as well as Advanced Micro Devices Inc., they remain the go-to choice for most projects today.

As a result, Google’s cloud customers should gain the ability to train and run their AI software considerably faster. Jeff Dean, leader of the Google Brain team behind machine learning research and development at Google, said on a Twitter thread that a single cloud TPU can be used to bring an implementation of the popular ResNet-50 image classification model up to 75 percent accuracy in 24 hours at a total cost of less than $200.

Google has created several pre-optimized neural network packages that customers may run on TPUs. The lineup includes a version of ResNet-50, as well models for machine translation, language modelling and identifying objects within images. Companies can also create their own AI workloads using Google’s open-source TensorFlow machine learning engine.

Customers that prefer using conventional graphic cards for their AI projects also received a new feature today. Google has added GPU support to its Kubernetes Engine service to allow for machine learning models to be packaged into software containers. The latter technology provides a lightweight abstraction layer that enables developers to roll out updates and move an application across environments more easily.

The new TPUs are available from $6.50 per unit per hour, while GPUs rented through Kubernetes Engine will be billed at Google’s existing rates for each supported chip model.

And Google isn’t alone in pursuing its own AI chips, either. Chip giant Intel Corp. has been touting its latest central processing units for AI workloads, as well as custom chips known as field-programmable gate arrays. And today, The Information reported that Amazon.com Inc. is developing its own AI chip that would help its Echo smart speakers and other hardware that uses its Alexa digital assistant do more processing on the device so it can respond more quickly than it could calling out to the cloud.

With reporting from Robert Hof

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.