CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

Graphics chips have become the standard for enabling artificial intelligence, as the kind of parallel processing that speeded up video games turned out to be ideal for AI too. Now they’re increasingly finding their way into cloud computing, where their processing power can be rented by companies looking to offer image and speech recognition, self-driving cars and more.

In particular, graphics processing units from market inventor and leader Nvidia Corp. are focusing on carrying out AI tasks at the network edge to enable faster services. That process, called inferencing, refers to the ability of neural networks to infer things from new data they’re presented with in real time — distinct from the process of training the models in the first place.

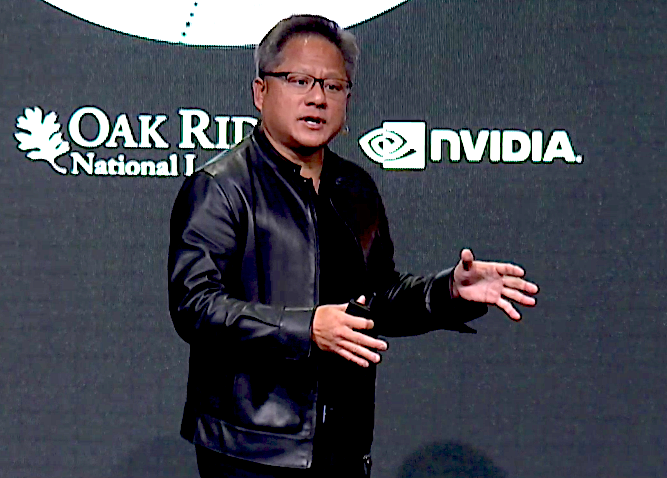

Today, Nvidia Chief Executive Jensen Huang (pictured) announced that its newest cloud GPU, called the T4 (below) and introduced in September, will be available on Google LLC’s cloud. He made the announcement during a keynote at the SC18 supercomputing conference that runs this week in Dallas.

Google is the first cloud provider to offer access to the T4, but it likely won’t be the last. “It’s quite impressive how quickly it appeared in the cloud,” Ian Buck, vice president and general manager of Nvidia’s Accelerated Computing, said in a briefing. “We’re in the era of the rise of GPU computing.”

Google’s cloud isn’t the only place it’s available. Some 57 server designs from the major computer makers such as Dell EMC, IBM Corp., Lenovo Group Ltd. and Super Micro Computer Inc. also incorporate the chip, which is about the size of a cell phone.

Google’s cloud isn’t the only place it’s available. Some 57 server designs from the major computer makers such as Dell EMC, IBM Corp., Lenovo Group Ltd. and Super Micro Computer Inc. also incorporate the chip, which is about the size of a cell phone.

Buck said that because of its small size and relatively low power needed to run it, the T4 is intended for AI applications run at the edge of networks. It also can be used for distributed training of AI models and for computer graphics.

Google appears to be focusing especially on inferencing, which will be available on the Google Compute Engine with deep learning virtual machine images. It’s also coming “shortly” via the Google Kubernetes Engine for managing containers, the software that enables applications to be run in multiple computing environments without changes, and via Google’s managed Cloud Machine Learning Engine.

Although Nvidia has led the way in accelerating AI computing work, using GPUs to make up for the slowdown in the rising performance of central processing unit chips that still are the foundation of most computers, it’s increasingly facing competition thanks to the rapid rise of AI-driven services. That’s both from other chipmakers, such as Advanced Micro Devices Inc., Intel Corp. and Xilinx Inc., and other kinds of chips, such as custom chips called field-programmable gate arrays and application-specific integrated circuits.

“The slowdown in Moore’s Law is driving the acceleration of heterogeneous data center compute with GPUs, FPGAs and ASICs,” said Patrick Moorhead, president and principal analyst at Moor Insights & Strategy. “The entire space is heating up with companies like AMD, Intel, Nvidia and Xilinx all driving for a big piece of the action.”

Nvidia also talked up its presence in supercomputing today. In the semiannual TOP500 list of the world’s fastest supercomputers announced today, the company said there was a 48 percent jump from a year ago in the number of systems using its GPUs, to 127. Moreover, the chips are used in the two top-rated machines, the U.S. Department of Energy’s Summit supercomputer at Oak Ridge National Laboratory and its Sierra machine at Lawrence Livermore National Lab.

“This is a breakout year for NVIDIA in the world of supercomputing,” said Huang. “With the end of Moore’s Law, a new HPC market has emerged, fueled by new AI and machine learning workloads.”

THANK YOU