AI

AI

AI

AI

AI

AI

Nvidia Corp. says its solid performance in new benchmark tests released today show that its supercomputer hardware is one of the most suitable platforms for training artificial intelligence algorithms.

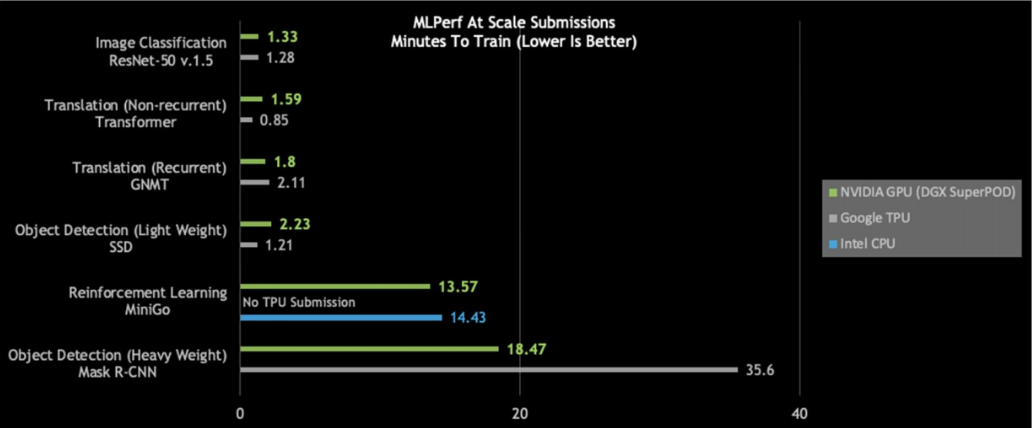

The company’s DGX SuperPOD platform, which is powered by its latest Tesla V100 Tensor Core graphics processing units and runs on its CUDA-X AI software, set new records in each of the six MLPerf categories, completing each task in less than 20 minutes.

MLPerf is an AI inference benchmark test that’s designed to measure system power, efficiency and performance in six categories, including one for image classification, two for object detection, two for translation, and one reinforcement learning, which relates to training robots, smart-city traffic flow systems and so on. More simply put, the MLPerf benchmark measures how fast a system can train machine learning models in those categories.

Nvidia’s DGX SuperPOD system competed alongside the latest AI hardware from Google LLC and Intel Corp. in the MLPerf benchmark tests, and showed that it was easily on a par with its rivals’ platforms.

“We broke eight performance records in this round, three at maximum scale and five per accelerator,” Paresh Kharya, director of product marketing for Accelerated Computing at Nvidia, said in a press briefing.

In fact, Nvidia actually smashed those records. For example, its NVIDIA DGX SuperPOD system could train the ResNet 50 model for image recognition, a task which used to take about eight hours just two years ago, in just 80 seconds in the latest tests.

Nvidia’s system also made mincemeat of even more difficult algorithms such as heavyweight object detection and reinforcement learning. Heavyweight object detection relies on the MASK R-CNN deep neural network and enables devices such as cameras, sensors, lidar and ultrasound machines to precisely identify and locate specific objects by combining multiple data sources.

Training the MASK R-CNN deep neural network used to take hundreds of hours. This time, Nvidia was able to complete the task in just under 19 minutes, which was almost twice as fast as it took other new AI systems to complete.

As for the MiniGo AI reinforcement training model, which is used to train robots on factory floors, for example, Nvidia’s platform zipped through this task in just 13.57 minutes.

All told, Nvidia’s DGX SuperPOD platform proved to be the fastest in three of the six MLPerf categories, and was only edged out by Google’s Tensor Processing Units in the SSD category and the two translation tests:

“Since the number of nodes varies considerably between Google and Nvidia, it is hard to tell who has the fastest chip,” said Karl Freund, a high-performance computing and deep learning analyst at Moor Insights & Strategy. “But Nvidia won three of the large scale benchmarks, as did Google. Moreover, Nvidia was able to realize significant speedups of around 80% using the same v100 chipsets last time, which is a tribute to its software prowess. That makes it a tough target to chase if you are a hopeful startup.”

Support our open free content by sharing and engaging with our content and community.

Where Technology Leaders Connect, Share Intelligence & Create Opportunities

SiliconANGLE Media is a recognized leader in digital media innovation serving innovative audiences and brands, bringing together cutting-edge technology, influential content, strategic insights and real-time audience engagement. As the parent company of SiliconANGLE, theCUBE Network, theCUBE Research, CUBE365, theCUBE AI and theCUBE SuperStudios — such as those established in Silicon Valley and the New York Stock Exchange (NYSE) — SiliconANGLE Media operates at the intersection of media, technology, and AI. .

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a powerful ecosystem of industry-leading digital media brands, with a reach of 15+ million elite tech professionals. The company’s new, proprietary theCUBE AI Video cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.