CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

Google LLC announced general availability of a new family of Compute Engine A2 virtual machines today that are based on Nvidia Corp.’s Ampere A100 Tensor Core graphics processing units.

The new VMs are the first in the world to pack 16 of Nvidia’s A100 GPUs into a single VM, making them the largest single-node GPU instance available from any public cloud infrastructure provider, Google said.

The powerful instances, aimed at some of the most demanding workloads that enterprises perform, will enable them to scale out and scale up various machine learning and high-performance computing jobs more efficiently and at a lower cost, Google added.

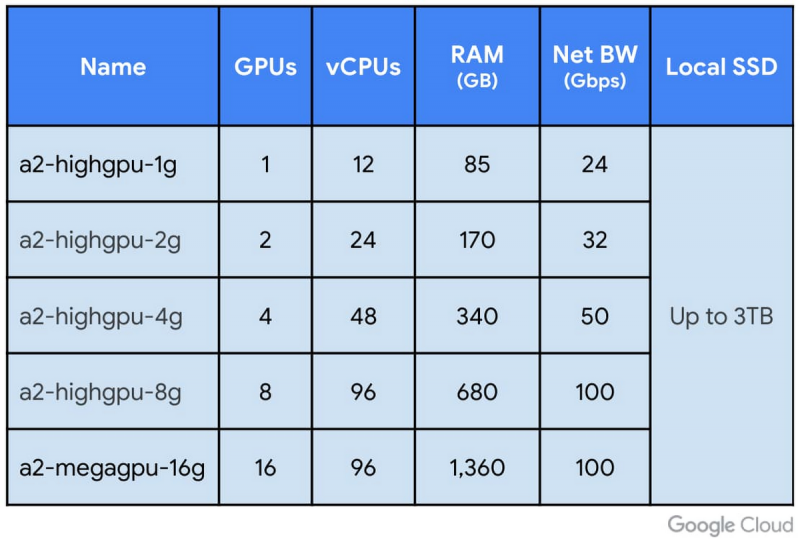

In a blog post, Google product managers Bharath Parthasarathy and Chris Kleban said customers can also choose smaller configurations of the A2 VMs, with options of one, two, four and eight GPUs per VM also available to provide greater flexibility.

“A single A2 VM supports up to 16 NVIDIA A100 GPUs, making it easy for researchers, data scientists, and developers to achieve dramatically better performance for their scalable CUDA compute workloads such as machine learning training, inference and HPC,” Parthasarathy and Kleban said. “The A2 VM family on Google Cloud Platform is designed to meet today’s most demanding HPC applications, such as CFD simulations with Altair ultraFluidX.”

Google also boasted that it can provide customers with “ultra-large GPU clusters” of thousands of GPUs available for the most demanding distributed ML training jobs. It said its VMs are tied together by Nvidia’s NVLink fabric, making them unique among VM offerings from any cloud provider.

“Thus, if you need to scale up large and demanding workloads, you can start with one A100 GPU and go all the way up to 16 GPUs without having to configure multiple VMs for a single-node ML training,” Parthasarathy and Kleban said.

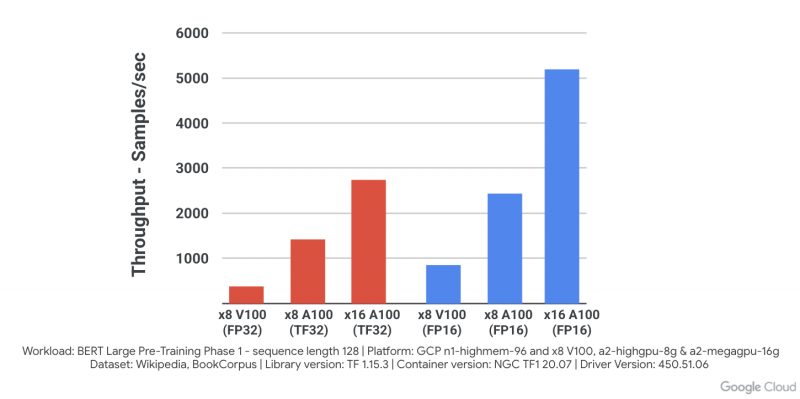

Google is backing its performance claims with some solid numbers. It said a single A100 on Google Cloud delivers a more than 10-times performance boost on the BERT Large pre-training model compared with the previous generation Nvidia V100 GPU, while also achieving linear scaling that goes from eight to 16 GPU shapes.

Google also lined up a bunch of early access customers to testify to the performance of the A2 instances. They include the artificial intelligence research firm Dessa, owned by Square Inc., which is focused on creating smarter and more personalized financial services and tools.

“We recognized that Compute Engine A2 VMs, powered by the NVIDIA A100 Tensor Core GPUs, could dramatically reduce processing times and allow us to experiment much faster,” said Dessa’s senior software engineer Kyle De Freitas. “Running NVIDIA A100 GPUs on Google Cloud’s AI Platform gives us the foundation we need to continue innovating and turning ideas into impactful realities for our customers.”

Meanwhile, a nonprofit group called the Allen Institute for Artificial Intelligence said it was able to speed up its AI research by four times using the A2 VMs. “It’s pretty much plug and play,” said the Allen Institute’s senior engineer Dirk Groeneveld. “At the end of the day, the A100 on Google Cloud gives us the ability to do drastically more calculations per dollar, which means we can do more experiments, and make use of more data.”

“This is a good accomplishment and I am sure that AWS and Azure won’t be too far behind,” said analyst Patrick Moorhead of Moor Insights & Strategy. “Google has been doing a fine job with analytic and machine learning workloads, so this is a nice feather in the cap.”

Google said the A2 instances powered by Nvidia’s A100 GPUs are available now in its us-central1, asia-southeast1 and europe-west4 regions, with more regions to come online later this year. The A2 VMs are available via on-demand, preemptible and committed usage discounts with prices starting at just 87 cents per hour. They are fully supported by Google Kubernetes Engine, Cloud AI Platform and other services, in addition to Compute Engine.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.