INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Nvidia Corp. is turbocharging its Nvidia HGX artificial intelligence supercomputing platform with some major enhancements to its compute, networking and storage performance.

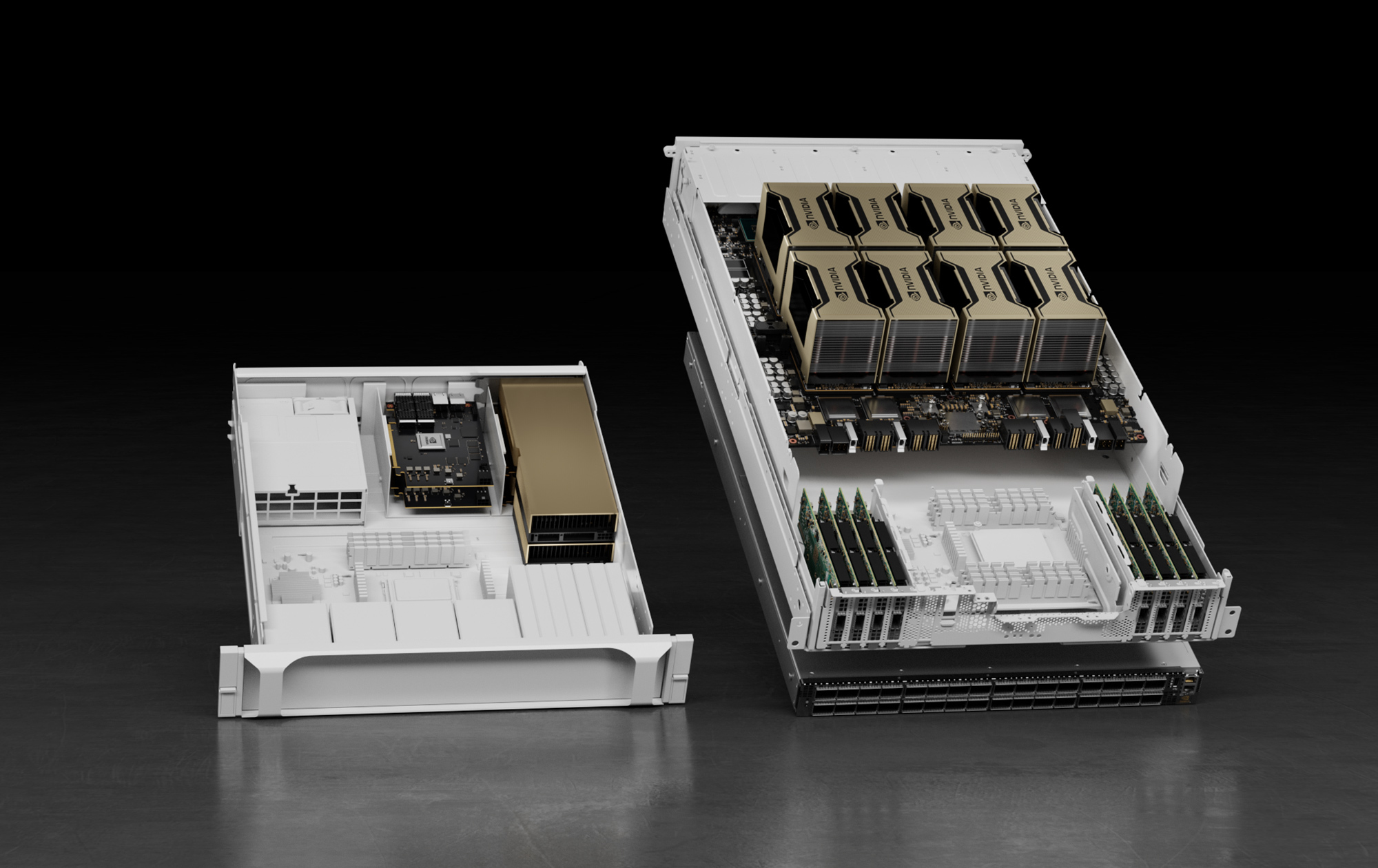

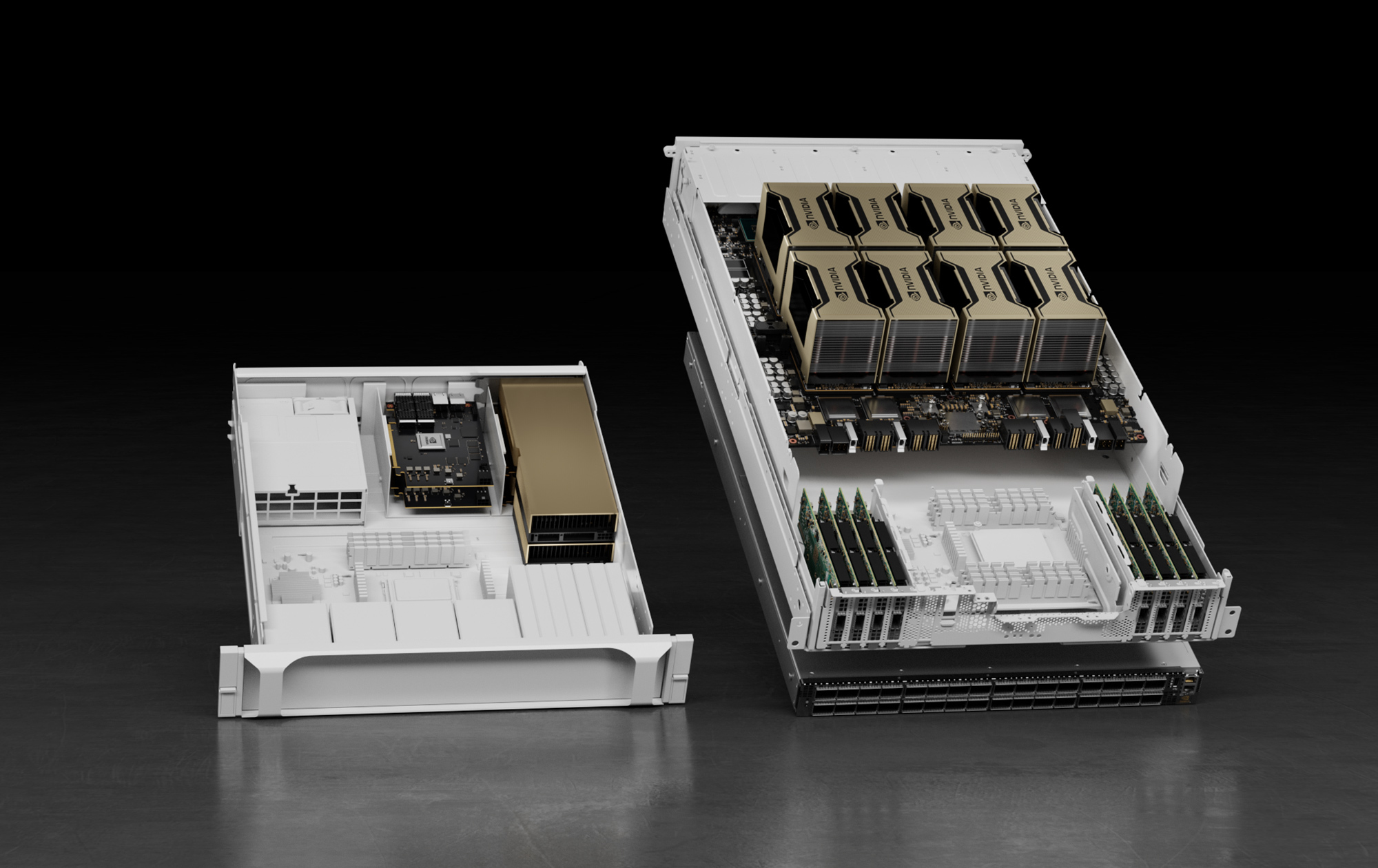

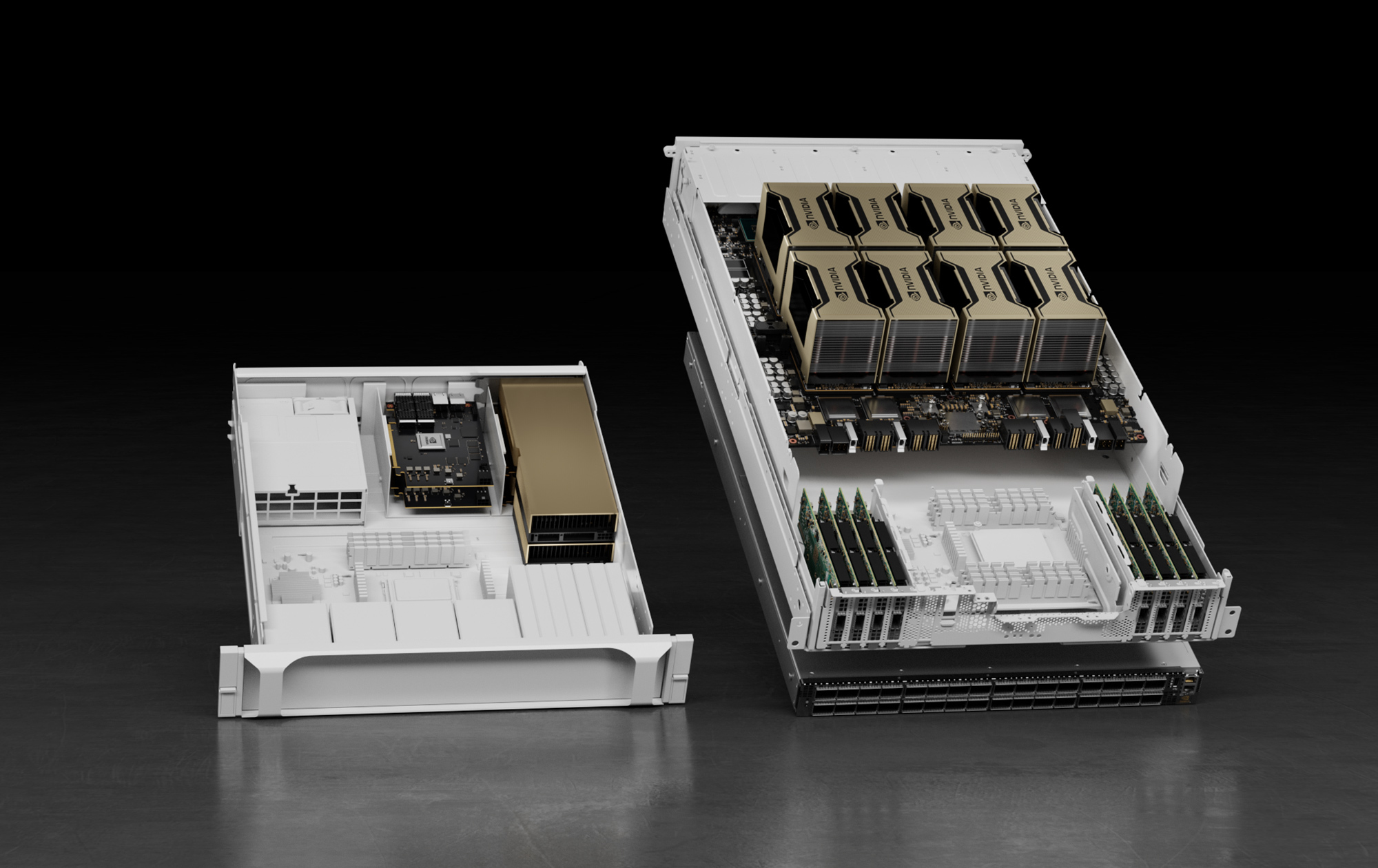

Nvidia HGX AI is an architecture for server platforms that’s designed to power high-performance computing workloads. It fuses up to 16 of Nvidia’s most powerful graphics processing units with technologies such as Infiniband networking NVLink.

Nvidia’s NVLink chip interconnect system is a key ingredient, making the GPUs in the system act together as one. This enables their combined computing power to be used in applications involving scientific computing and simulations, such as weather forecasting and genome mapping. They can also be used to train and run AI models.

The most important of today’s updates relates to the A100 GPUs that power the Nvidia HGX AI platform. The new A100 80-gigabyte PCIe GPUs announced today at the annual ISC High Performance event help to increase GPU memory bandwidth to 2 terabytes, representing a 25% gain on the older A100 40GB PCIe GPUs. They also provide 80GB of HBM2e high-bandwidth memory, Nvidia said.

This increased capacity allows for more data and larger neural networks to be held in-memory, thereby minimizing internode communication and energy consumption, the company explained. Meanwhile, the faster memory bandwidth helps achieve higher throughput for HPC workloads, leading to faster results.

Nvidia said the new A100 80GB PCIe GPUs will be available on HGX AI systems from a raft of partners: Altos Computing Inc., Cisco Systems Inc., Dell Technologies Inc., Fujitsu Ltd., Hewlett Packard Enterprise Co., New H3C Technologies Co. Ltd., Inspur Group, Lenovo Group, Quanta Cloud Technology, Penguin Computing Inc. and Super Micro Computer Inc. It will also be available as a service from cloud providers, including Amazon Web Services Inc., Microsoft Corp. and Oracle Corp.

Nvidia also announced a major enhancement to the Infiniband networking switch systems in the HGX AI platform. InfiniBand is a computer networking communications standard used in HPC that features very high throughput and very low latency. It’s used for data interconnections both among and within computers.

Nvidia said the latest Quantum-2 fixed configuration switch systems deliver 64 ports of NDR 400Gb/s InfiniBand per port, providing up to three times higher port density versus the previous generation HDR InfiniBand. The switches further provide scalable port configurations of up to 2,048 ports, enabling a total bidirectional throughput of 1.64 petabits per second, which is five times faster than the previous generation. Further, the switches enable 6.5 times greater scalability, Nvidia said.

These enhancements, when paired with the third-generation Nvidia SHARP in-network data reduction technology, boost AI acceleration by 32 times what the earlier generation architecture was capable of, Nvidia said.

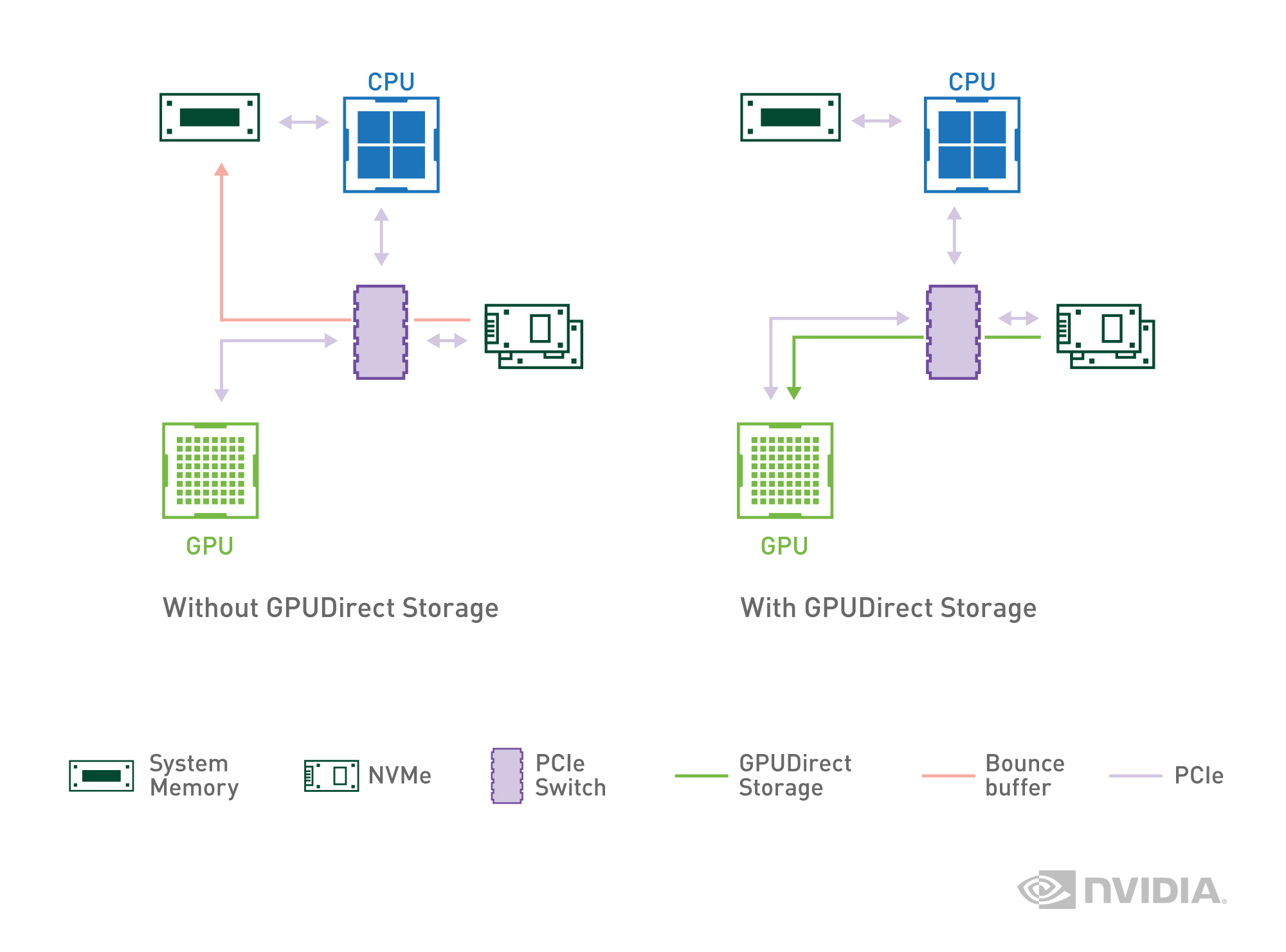

The Nvidia HGX AI platform gets a serious boost on the data storage side too, with Magnum IO GPUDirect Storage (below), which is a new storage technology that provides direct memory access between GPU memory and storage. This direct path means applications will see lower I/O latency and take advantage of the full network bandwidth available to them, while decreasing loads on the CPUs and better managing the impact of increased data consumption.

Nvidia founder and Chief Executive Jensen Huang said today’s platform enhancements mean that HPC is no longer reserved just for academia, but are accessible to a broad range of industries.

“Key dynamics are driving super-exponential, super-Moore’s law advances that have made HPC a useful tool for industries,” he said. “Nvidia’s HGX platform gives researchers unparalleled high performance computing acceleration to tackle the toughest problems industries face.”

The souped-up Nvidia HGX AI platform has already won appreciation from the U.K.’s Distributed Research using Advanced Computing supercomputing facility. DiRAC said today it will use the Nvidia platform to power its latest supercomputer, called Tursa, that’s set to go online later this year at the University of Edinburgh in Scotland.

Tursa, which will be the third of four envisaged DiRAC supercomputers, is being built by Atos and will be powered by 448 Nvidia A100 GPUs with four Nvidia HDR 200Gb/s InfiniBand networking adapters per node. It will also feature the new Nvidia Magnum IO GPUDirect memory access technology to ensure the highest possible inter-node bandwidth.

Constellation Research Inc. analyst Holger Mueller told SiliconANGLE that DiRAC’s partnership with Nvidia on Tursa is another strong validation of the chip maker’s supercomputing architecture. “It’s good to see these new platforms coming together to power a new range of supercomputer use cases around astronomy, particle and nuclear physics,” he said.

Researchers plan to use Tursa for ultrahigh-precision calculations of the properties of the subatomic particles that are necessary for interpreting data from massive particle physics experiments such as the Large Hadron Collider, Nvidia said.

Tursa helps strengthen Nvidia’s already very formidable presence in the supercomputing industry. The company announced in a blog post today that its technologies now power 342 of the TOP500 list of the world’s most powerful supercomputers, including 70% of all new systems and eight of the top 10.

THANK YOU