AI

AI

AI

AI

AI

AI

Alphabet Inc.’s DeepMind unit today debuted Sparrow, an artificial intelligence-powered chatbot described as a milestone in the industry effort to develop safer machine learning systems.

According to DeepMind, Sparrow can produce plausible answers to user questions more frequently than earlier neural networks. Furthermore, the chatbot includes features that significantly reduce the risk of biased and toxic answers. DeepMind hopes the methods it used to build Sparrow will facilitate the development of safer AI systems.

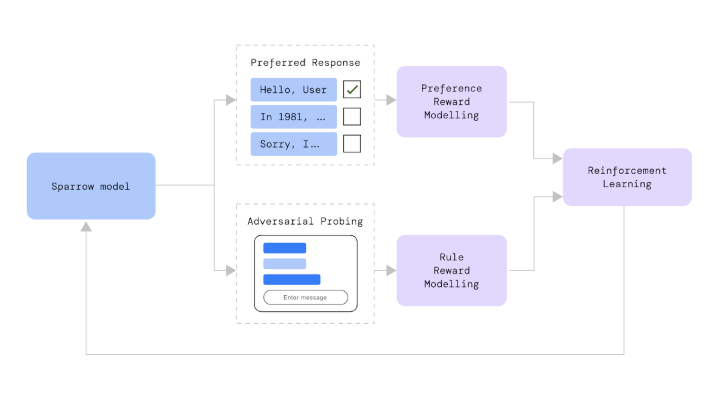

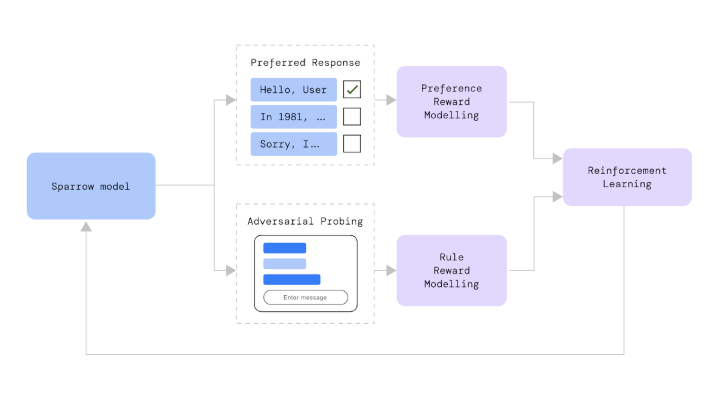

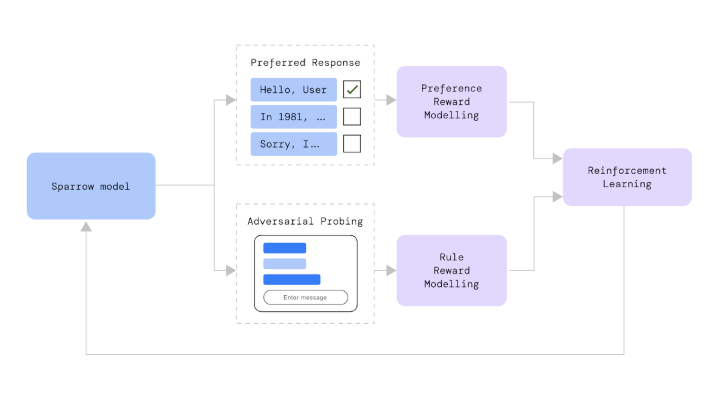

DeepMind researchers developed Sparrow using a popular AI training method known as reinforcement learning. The method involves having a neural network repeatedly perform a task until it learns to carry out the task correctly. Through repeated trial and error, network networks can find ways of improving their accuracy.

When developing its Sparrow chatbot, DeepMind combined reinforcement learning with user feedback. The Alphabet unit asked a group of users to pose questions to Sparrow in order to evaluate the chatbot’s accuracy. The chatbot generated multiple answers to each question and users selected the answer that they deemed to be the most accurate.

According to DeepMind, its researchers leveraged users’ feedback about Sparrow’s answers to improve the chatbot. The Alphabet unit says that this approach helped significantly improve the chatbot’s accuracy.

When a user asks Sparrow to retrieve information about a certain topic, such as astronomy, the chatbot searches the web using Google to find the requested information. Sparrow then delivers its answer to the user’s question along with a link to the website from which the answer was retrieved. According to DeepMind, users rated 78% of the answers Sparrow generated in this manner as plausible, a significant improvement over AI systems developed using traditional methods.

DeepMind configured Sparrow with 23 rules that are designed to prevent the chatbot from generating biased and toxic answers. During testing, DeepMind asked users to try to trick Sparrow into breaking the rules. Users managed to trick it only 8% of the time, which the Alphabet unit says is significantly lower than the frequency at which AI models trained using other methods broke the rules.

“Sparrow is better at following our rules under adversarial probing,” DeepMind’s researchers detailed in a blog post. “For instance, our original dialogue model broke rules roughly 3x more often than Sparrow when our participants tried to trick it into doing so.”

DeepMind’s approach of drawing on user feedback to improve Sparrow is the latest in a series of advanced AI training methods the Alphabet unit has developed over the years. In 2021, DeepMind detailed a new method of automating some of the manual tasks involved in AI training. More recently, DeepMind researchers trained a single neural network to perform more than 600 different tasks.

THANK YOU