AI

AI

AI

AI

AI

AI

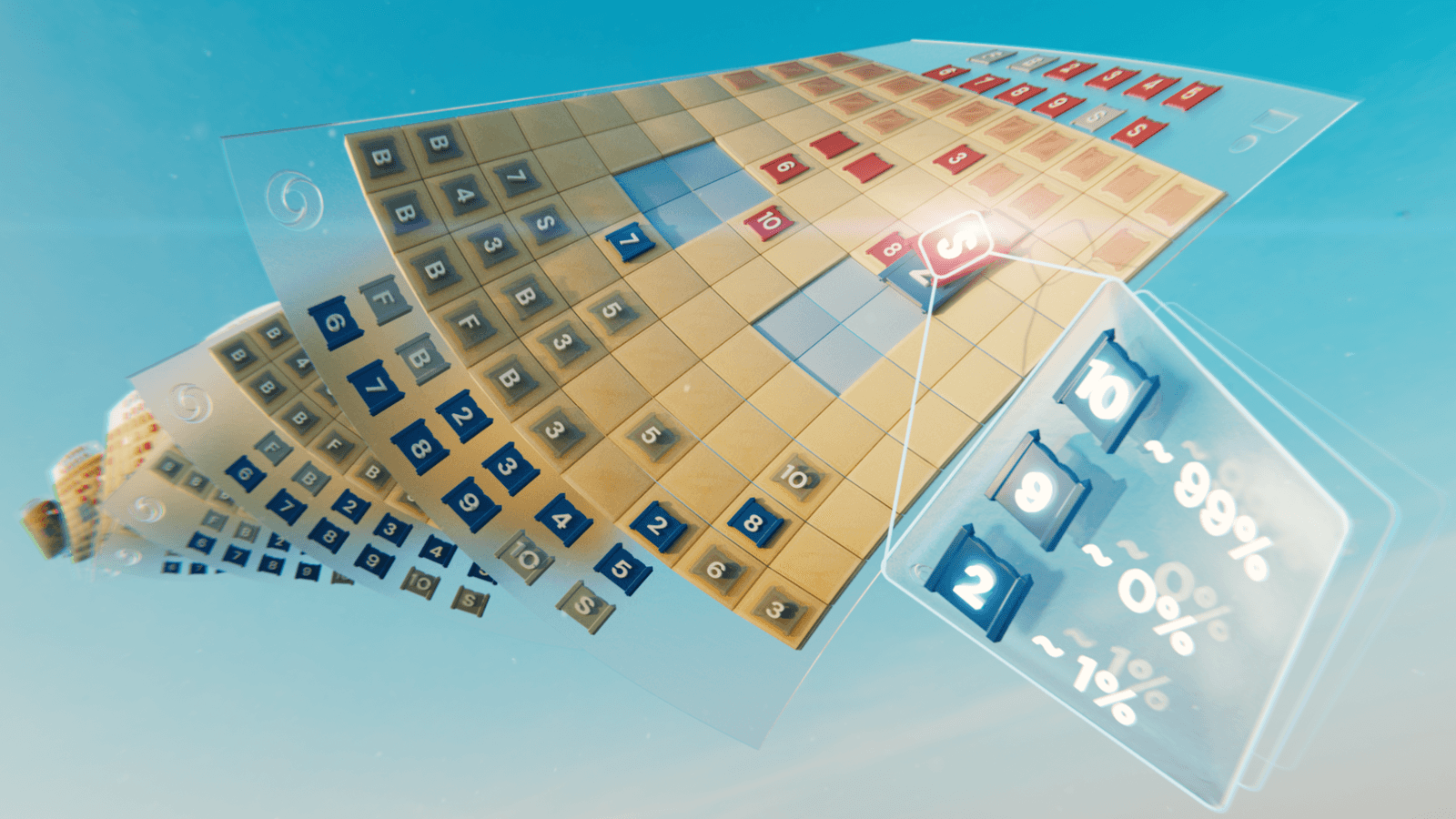

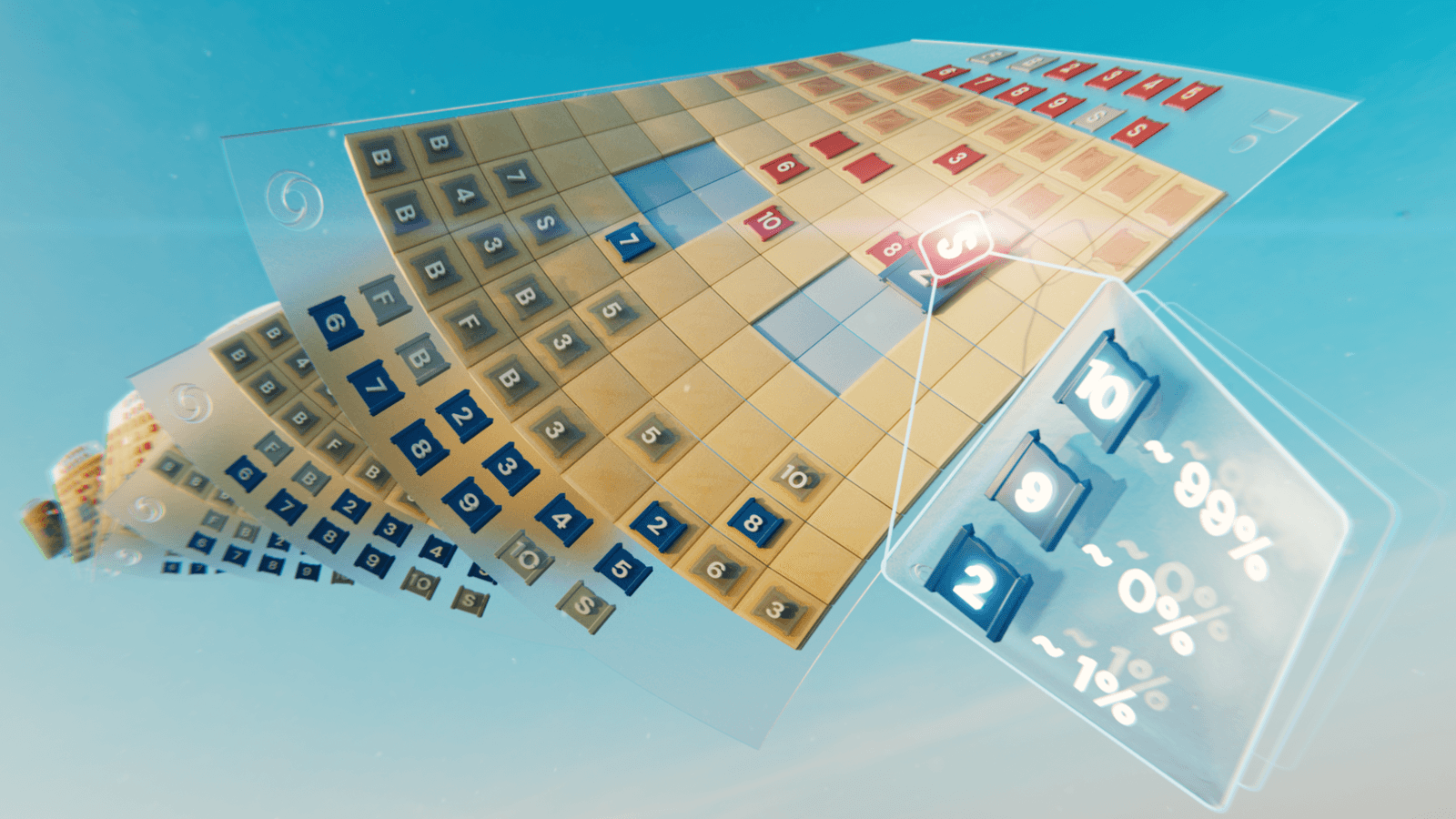

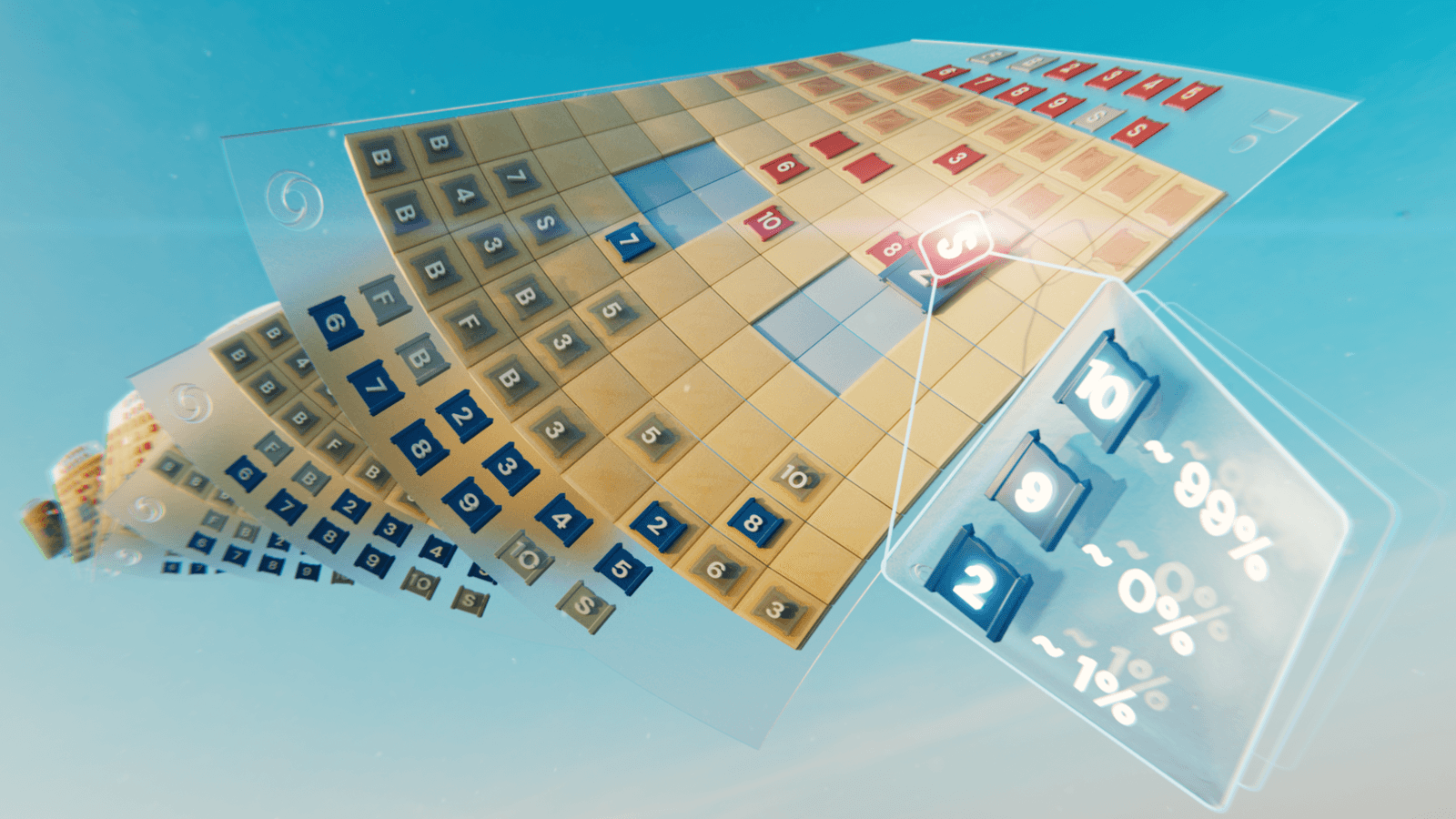

Alphabet Inc.’s DeepMind unit has developed a new artificial intelligence system capable of playing “Stratego,” a board game considered more complex than chess and Go.

DeepMind detailed the AI system, which it dubs DeepNash, on Thursday. The Alphabet unit says that DeepNash achieved a win rate of more than 84% in matches against expert human players.

“Stratego” is a two-player board game that is similar to chess in certain respects. Players receive a collection of game pieces that, like chess pieces, are maneuvered around the board until one of the players wins. But there are a number of differences between the two games that make “Stratego” more complicated than chess.

In “Stratego,” each player has only limited information about the other player’s game pieces. A player might know that the other player has placed a game piece on a certain section of the board, but not which specific game piece was placed there. This dynamic makes playing the game difficult for AI systems.

Another source of complexity is that there are more possibilities to consider than in chess. The number of potential tactics that players can use in a board game is measured with a metric known as the game tree complexity number. Chess has a game tree complexity number of 10 to the power of 123, while in “Stratego,” that number increases to 10 to the power of 535.

According to DeepMind, traditional methods of teaching AI systems to play board games can’t be applied well to “Stratego” because of its complexity. To address that limitation, DeepMind’s researchers developed a new AI method dubbed R-NaD that draws on the mathematical field of game theory. That method forms the basis of the DeepNash system DeepMind detailed this week.

According to DeepMind, DeepNash develops a plan for winning “Stratego” matches by simulating a so-called Nash equilibrium. A Nash equilibrium is a situation where each “Stratego” player uses the game strategy that has the highest chance of defeating the other player’s strategy. In such a situation, both players make the optimal combination of game moves during the match.

By studying what would happen if its opponent would make the optimal combination of moves, DeepNash can develop an action plan that maximizes its chance of winning.

To evaluate DeepNash’s performance, DeepMind had it play a series of matches against several earlier AI systems configured to play “Stratego.” DeepNash won more than 97% of the matches, according to the Alphabet unit. In another evaluation, DeepNash played an online version of “Stratego” and achieved a win rate of 84% against expert human players.

“To achieve these results, DeepNash demonstrated some remarkable behaviours both during its initial piece-deployment phase and in the gameplay phase,” DeepMind researchers detailed in a blog post. “DeepNash developed an unpredictable strategy. This means creating initial deployments varied enough to prevent its opponent spotting patterns over a series of games.”

DeepMind believes that the AI techniques it developed to build DeepNash could be applied to other tasks besides playing “Stratego.” According to the Alphabet unit, the AI system’s ability to develop an optimal course of action in complex situations could potentially be applied in fields such as traffic management.

“We also hope R-NaD can help unlock new applications of AI in domains that feature a large number of human or AI participants with different goals that might not have information about the intention of others or what’s occurring in their environment,” DeepMind’s researchers detailed.

THANK YOU