SECURITY

SECURITY

SECURITY

SECURITY

SECURITY

SECURITY

As generative AI and machine learning takes hold, the bad guys are paying attention and looking for ways to subvert these algorithms. One of the more interesting methods that is gaining popularity is called data poisoning.

Although it’s not new — an early version called Polygraph was proposed back in 2006 by some academic researchers — it is seeing a resurgence, thanks to AI’s popularity. New research from Forcepoint this week categorizes data poisoning into three attack types:

Another way to look at these kinds of attacks is split between black-box and white-box attacks. The former assumes the attacker has no prior knowledge of the inner workings of the AI’s model, while the latter assumes they do and tend to be more successful.

Fighting data poisoning isn’t easy, because software developers need to analyze their model inputs and find the fakes. Typical AI situations use massive data sets, which means if something is suspect, a new training session is required. That can be costly in terms of computer costs and elapsed time.

One way is to fight fire with fire. This has an interesting application, such as introducing misleading keywords or descriptions in the meta tags of an image, so that content creators and others could protect their intellectual property from being scraped across the web. Think of this as a type of data poisoning that is deliberately used as a defensive data protection measure. Called prompt-specific poisoning, it’s offered in one existing defensive tool from Cranium.ai, which just announced new funding today.

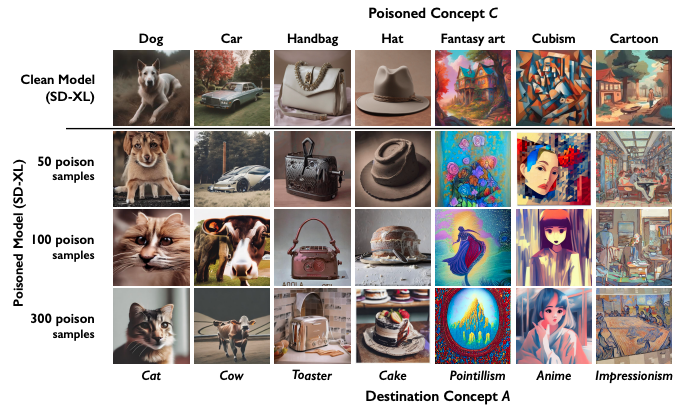

Another tool is described in a recent academic paper from researchers at the University of Chicago, what the authors call Nightshade. This is “an optimized prompt-specific poisoning attack where poison samples look visually identical to benign images with matching text prompts.” What this means is that the open-source software changes pixels in the images, so that pictures of dogs get classified as cats and so forth. The researchers show the effect of their code on the before and after effects across some common images.

But although Nightshade is interesting, AI users need to be on guard. The Forcepoint authors suggest being more diligent about the training data, with regular tests for statistical accuracy to detect anomalies and setting up rigid access controls to the data itself. They also suggest implementing a regular way to monitor the model’s performance and accuracy reporting, using cloud observability tools such as Azure Monitor and Amazon SageMaker, among others.

“While generative AI has a long list of promising use cases, its full potential can only be realized if we keep adversaries out and models protected,” Forcepoint wrote on its post.

THANK YOU