AI

AI

AI

AI

AI

AI

Artificial intelligence pioneer OpenAI today announced it is launching a new generation of embedding models that have the ability to convert text inputs into a numerical form for use in various machine learning applications.

That came as the company also unveiled new versions of its GPT-4 Turbo and moderation models, lower pricing for GPT-3.5 Turbo, and lowered the cost of access to its models via its application programming interface.

In the AI industry, embeddings refers to sequences of numbers that represent abstract concepts within natural language or code. They make it easier for machine learning algorithms to understand the relationships between those kinds of content when they perform tasks such as clustering or retrieval. It’s this ability that makes them vital for applications such as knowledge retrieval, using both ChatGPT or the Assistants API.

The new embedding models include text-embedding-3-small and text-embedding-3-large. They provide increased performance for developers at lower prices compared to the previous generation text-embedding-ada-002 model. What’s more, OpenAI said they can create embeddings with a maximum of 3,072 dimensions, meaning they can capture significantly more semantic information to improve the accuracy of downstream tasks.

The company explained in a blog post that the new models have helped to increase the average score on the most commonly used MIRACL benchmark for multi-language retrieval from 31.4% to 54.9%. Moreover, the average score on the MTEB benchmark for English tasks rose from 61% to 64.6%, the company said. What’s more, pricing for text-embedding-3-small is now said to be five-times cheaper than text-embedding-ada-002, making it much more affordable and therefore accessible to many more developers.

The GPT-4 Turbo and GPT-3.5 Turbo large multimodal models that can understand human text and language, and generate fresh content including code, have also been updated. The latest versions of those models are said to offer improvements in instruction following, more reproducible outputs and support parallel function calling. There’s also a new 16k context version of GPT-3.5 Turbo, which is able to process more complex inputs and outputs than the previous-generation 4k version.

GPT-4 Turbo gets an interesting fix too, the company explained: “This model completes tasks like code generation more thoroughly than the previous preview model and is intended to reduce cases of ‘laziness’ where the model doesn’t complete a task,” OpenAI’s researchers wrote.

OpenAI also updated its text moderation model that aims to detect if text inputs and outputs are sensitive or unsafe. According to the company, the latest version supports more languages and domains, and provides superior explanations of its predictions.

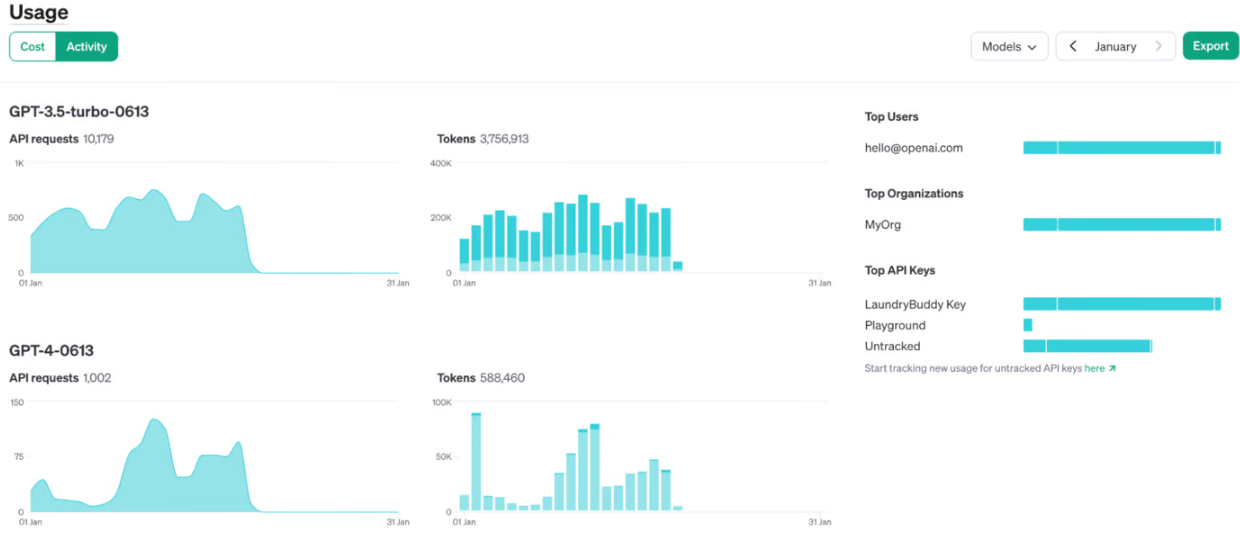

Finally, OpenAI is launching a new way for developers to manage their API keys and understand how much they’re using this service. With the update, it’s possible for developers to create multiple API keys and monitor their separate usage and billing details within the OpenAI Dashboard. This helps them to better understand the costs of each application or service they have created that embeds OpenAI’s models. In addition, the company said it’s reducing the price of API access to GPT-3.5 Turbo by 25%, making it more accessible.

According to OpenAI, today’s updates are part of its ongoing effort to improve the quality and capabilities of its AI models, while making them more useful and affordable for developers. The company is also inviting developers to contribute towards evaluations, to help it improve its model capabilities for different workloads.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.