AI

AI

AI

AI

AI

AI

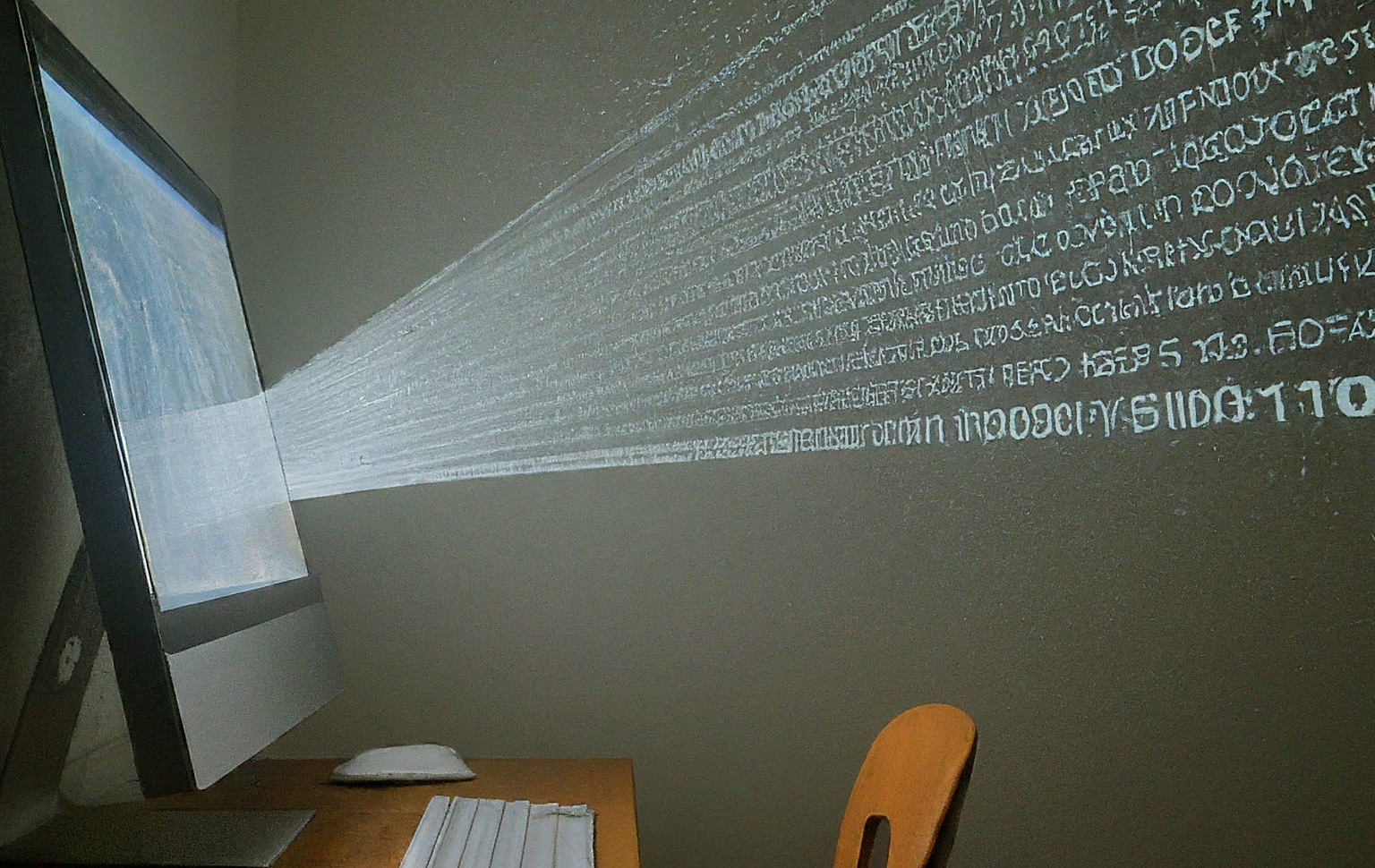

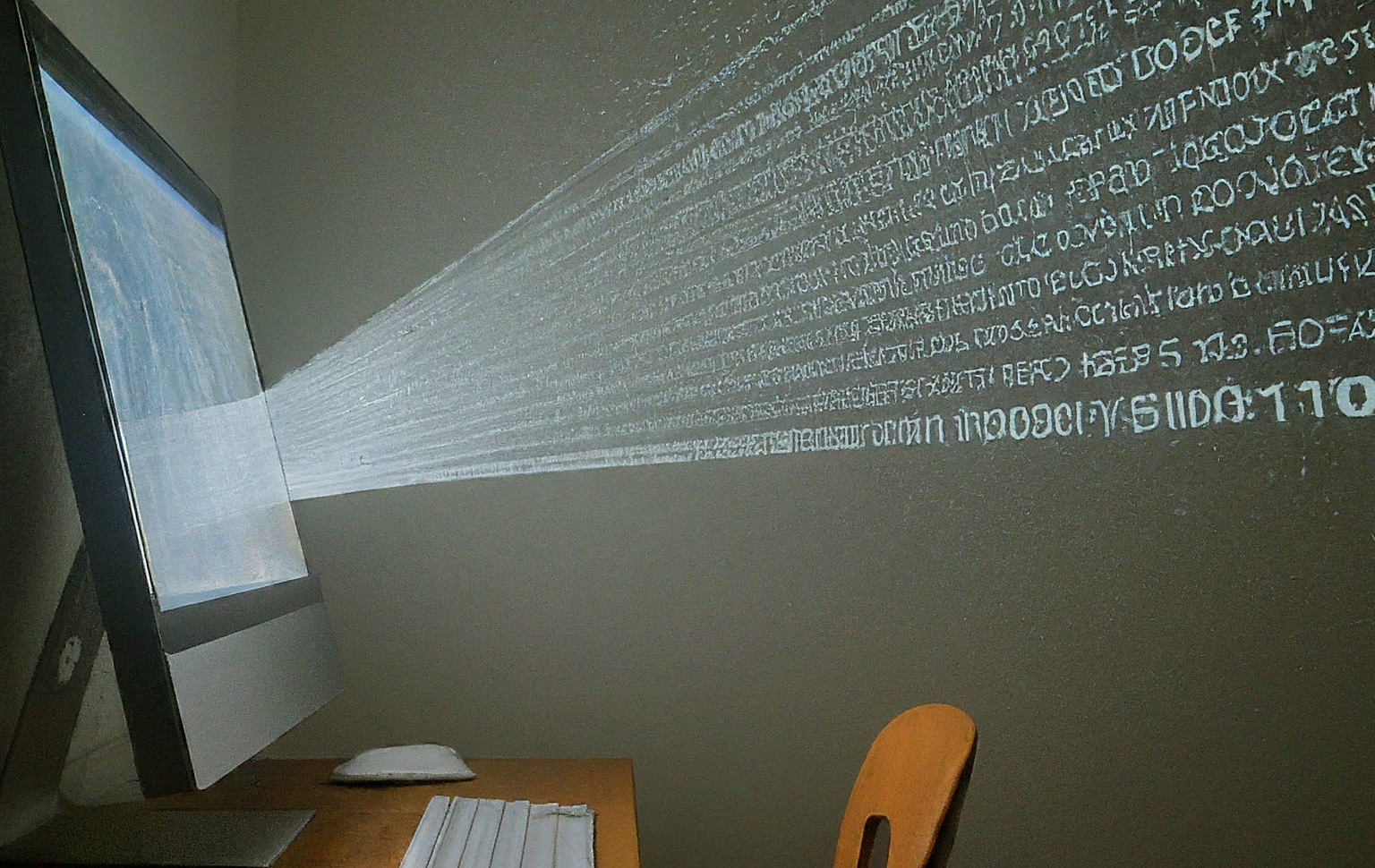

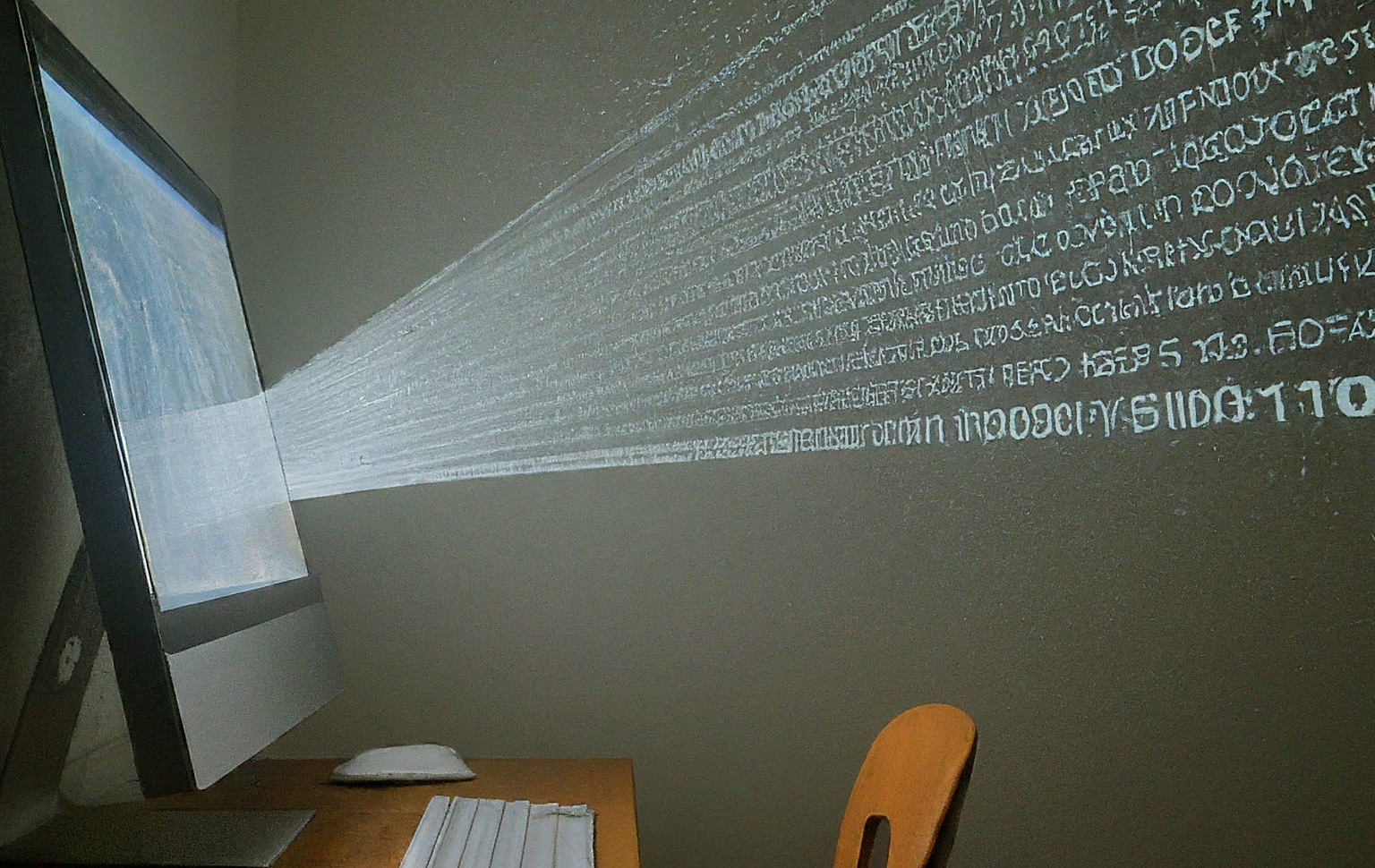

As artificial intelligence models continue to evolve at ever-increasing speed, the demand for training data and the ability to test capabilities grows alongside them. But in a world with equally growing demands for security and privacy, it’s not always possible to rely solely on real-world information – and sometimes authentic data is scarce or difficult to obtain.

The answer to this dilemma? Made-up data — or, as it’s known in AI circles, synthetic data.

Synthetic data is information that’s artificially created by computer algorithms instead of gathered from real-world events and is generally used to test and train artificial intelligence models. It’s most often generated based on real-world information and is designed to mimic it as closely as possible. But at the same time, it allows for reducing risks associated with the original data or filling in gaps missing in real-world data.

There are a number of methods for producing synthetic data, including using generative adversarial networks, statistical models and transformer-based AI models — similar to OpenAI’s GPT — which excel at capturing the patterns that exist in data. This type of data can be fully or partially synthetic, where it might contain no information from the original data, or it’s used to replace sensitive features of the original information.

That’s useful because sometimes real-world data falls short: Imagine a self-driving car needing more diverse scenarios than real traffic provides. In these cases, synthetic data can fill the gaps in real-world traffic data, creating a richer dataset for AI training.

To explore how synthetic data is being used right now to build, test and fine-tune AI models across different industries, SiliconANGLE consulted experts and companies using it, delving into the advantages of its increasing use — and the potential drawbacks.

For example, certain types of real-world data come with extreme privacy risks, such as healthcare patient information. But AI models for health still need to be trained to help doctors and clinicians provide the best care for patients. Algorithmically generated synthetic data can retain the statistical characteristics of the health data without revealing private information.

Bob Rogers, co-founder and chief scientific officer at BeeKeeperAI Inc., says his company has worked with secure healthcare data for machine learning and AI training for the University of California at San Francisco. BeeKeeperAI uses privacy-preserving technology to allow AI algorithm developers in healthcare and other regulated industries to build and deploy on secure compute while keeping real-world data in control of the originating source.

BeeKeeperAI’s Rogers said synthetic data is important for training AI models without exposing private data. Photo: LinkedIn

“In healthcare, you might have records of patients with names and medical record numbers, birth dates and then lab values,” Rogers said. “What you want to do with synthetic data is create a data set that looks like the real data, but doesn’t expose the private information about the patient’s name, medical record number, Social Security number, et cetera, but still covers the shape and behavior of the underlying data.”

Rogers said a machine learning algorithm the company was given to test by a developer had five expected inputs for gender. However, synthetic health data from UCSF quickly revealed a flaw in the algorithm because the data had 12 potential options for gender.

“In general, quite often private data is not easily accessible to algorithm developers,” said Rogers. That data can be extremely important for testing AI algorithms to make certain they’re valid and to train them to work with future data. Synthetic data that can represent private data can give developers a boost without raising regulatory concerns.

Outside highly regulated environments, synthetic data can also help fill gaps in real-world information for training AI models when there’s a lack of information to help train machine learning algorithms. A good example of this is fraud detection or cybersecurity attacks, where a model might need examples of something relatively rare, so data is rare too.

When there aren’t enough examples of an event, synthetic data can be used to create more of it to help train AI models to recognize it, said DataCebo’s Kalyan Veeramachaneni. Photo: LinkedIn

“Fraud is usually by definition 1,000th of the amount of data you have with no fraud,” said Kalyan Veeramachaneni, chief executive of DataCebo Inc., a company that helps manage the production and access to synthetic data. “Either you can wait for enough data to accumulate, or you can synthetically generate it with the little bit of data that you have from fraud, and then add that to your data set and train more robust models.”

Preparing and deploying a machine learning model more quickly can be paramount for fraud detection, especially if the type of fraud is peculiar or new. Most credit card or financial institutions do not have the time to wait for examples of fraud to fill up in their datasets so that they can train a new model for accurate detection, Veeramachaneni explained. So having synthetic data fill the gap in real-world data with extra examples of the fraud events can save time.

Using generative AI to produce synthetic training data based on examples of fraud could also offer a unique advantage in the ever-changing landscape of fraud detection: its ability to create realistic examples of fraud tactics. This type of synthetic data could help fraud detection stay ahead of the curve, whereas AI trained on authentic data would have to rely on historical patterns. Chances are good that although real-world fraudsters will keep the same core elements of their deception, they will also likely evolve their practices to evade detection and net better results.

Astronomer’s Steven Hillion discovered that synthetic data could be used to fill in the gaps when a moderation team didn’t have enough images to work with. Photo: LinkedIn

Steven Hillion, senior vice president of data and AI at Astronomer, told SiliconANGLE that a member of his team was working on a case where images were needed for content moderation purposes. Although the team had access to large datasets of images, they had trouble getting access to the particular images they needed.

“Say, if you’re looking at images that are not safe for children,” Hillion said. “I think the example that he mentioned was smoking, to take a rather innocent example. Well, it turns out there are not that many images of people smoking these days. Even if you look at larger image datasets… people just don’t do it.”

To help train the moderation AI model, the team would have to take a smaller sample of images of people smoking and “oversample.” This means creating a synthetic dataset based on the smoking images and using a generative AI model to create a larger dataset of people smoking in various poses, in different settings, with various plumes of smoke, in crowds, alone and in different lighting. This needed to be done so that the moderation system would have a better idea of what “a person smoking” would look like when applied.

Gartner has predicted that by 2030 synthetic data will overshadow real data in AI models, especially with the growth of large language models with their popularization after the launch of ChatGPT by OpenAI just over a year ago.

LLMs require a steady diet of data from a tremendous number of sources for training and fine-tuning to remain current. That creates some issues for the developers of foundation models and the companies that deliver models that provide domain expertise.

Foundation models, such as GPT-4 developed by OpenAI and Llama 2 produced by Meta Platforms Inc., rely heavily on public repositories and data scraped from the internet. This is becoming an increasing problem for LLM producers as copyright lawsuits have cropped up. Microsoft Corp. and OpenAI are facing multiple lawsuits from authors and the New York Times over copyright infringement. Universal Music Group sued LLM startup Anthropic because its ChatGPT rival Claude reproduced song lyrics.

According to a report from the Financial Times, Microsoft, OpenAI and AI startup Cohere have already begun testing synthetic data as a way to increase the performance of LLM production with fewer of these issues. “If you could get all the data that you needed off the web, that would be fantastic,” Cohere CEO Aidan Gomez told the FT. “In reality, the web is so noisy and messy that it’s not really representative of the data that you want. The web just doesn’t do everything we need.”

Gupshup’s Krishna Tammana said AI models update so quickly synthetic data is useful for testing and to “understand the efficacy of new models.” Photo: LinkedIn

Synthetic data also features prominently in preparation and testing models to prepare them for the ingestion of data. Gupshup Inc., for example, provides domain-specific generative LLMs for enterprise customers. That allows them to build AI apps that can use internal business knowledge to produce natural conversational experiences fused with industry-specific know-how.

“We do have synthetic data that we use with the permission of our customers,” said Krishna Tammana, chief technology officer at Gupshup. The company creates synthetic data based on existing customer data that can put updated foundation models through their paces. “The purpose is because these models are changing so fast that we need to test them against customer data to understand the efficacy of new models.”

Tammana added that synthetic data can be useful for helping tune a model to reduce errors and abnormalities such as hallucinations. Every time a new foundation model comes out it becomes a race to make sure that it can be fine-tuned against existing customer data and to prevent hallucinations, which happens when a model goes off the rails or produces completely erroneous answers.

Although synthetic data has a lot of benefits when it comes to helping with the performance of building models and testing them before they’re ready for deployment, the data quality itself is only as good as the models that produce it. That means it requires additional verification as opposed to human-verified and -categorized data.

In essence, synthetic data flows from “teacher AIs” and algorithms that generate it based on other data. Often that data comes from sources that could be biased or of poor quality. So if an underlying real world dataset has a bias, it can be reflected in or exaggerated by the synthetic data. If the engineers are aware of the bias, the dataset can be corrected by “debiasing” it, but that act itself can make working with the synthetic data challenging.

Another problem that can arise from synthetic data is that in the cases where it is designed to preserve privacy, there is a temptation to make it as expressive as possible so it can represent the statistical shape of the data. But that also creates a problem where the synthetic data could reveal too much about the underlying private information. A balance must be struck between providing privacy and making the data useful.

Some researchers see a problem rising for foundation models because they use public sources to ingest data to stay current for training. But as more generative AI is used to create content for the web, this means that AI models are taking in data produced by other models and training on them.

Although ingesting synthetic data from public sources can lead to “model collapse” and cause hallucinations if unchecked, Andy Pernsteiner from VAST said this may not yet be a problem yet. Photo: LinkedIn

This situation leads to a problem dubbed “model collapse,” in a paper titled “The Curse of Recursion: Training on Generated Data Makes Models Forget,” which could lead to a degradation of AI models. As models degrade, they would begin to hallucinate more often, fail to answer questions and performance would falter.

Although this might be a potential problem, some experts say it’s not a big problem yet. “I think that model collapse probably isn’t as much of a challenge for well-curated foundational models where there is a lot of care and feeding going into them,” said VAST Data Inc. CTO Andy Pernsteiner.

As of 2023, the synthetic data generation market was only $300 million, but it’s estimated to reach $2.1 billion by 2028, according to a report from MarketsAndMarkets. Many factors have been driving the market for synthetic data, especially opportunities in heavily regulated industries where data privacy and regulations make it difficult to get data or create gaps for testing and training performant AI models.

However, even as synthetic data becomes more prominent in AI models, real-world data will remain an important ground truth for training and preparing AIs and LLMs going forward, said Astronomer’s Hillion.

“You will always need real-world data, and so you will always be wrestling with the problem of managing sensitive issues such as privacy and copyright,” said Hillion. He noted that while synthetic data makes significant gains in model training, there will be an importance set on where data came from and lineage.

“Like where did this prediction come from?” he said. “Where did this model come from? Where did this dataset come from? You will always need ground truth — and that’s still not an easy problem to solve.”

AI algorithms and LLMs are only growing in size and complexity as companies such as Google, OpenAI, Anthropic, Meta and others continue to supply larger and smarter models. Gartner predicts that by this year 60% of data for AI would be synthetic to stay ahead of demand and to help resolve the challenges named above in privacy, volume, efficiency, security and scope. That’s likely to make synthetic data more critical than ever to drive the AI era in coming years.

THANK YOU