AI

AI

AI

AI

AI

AI

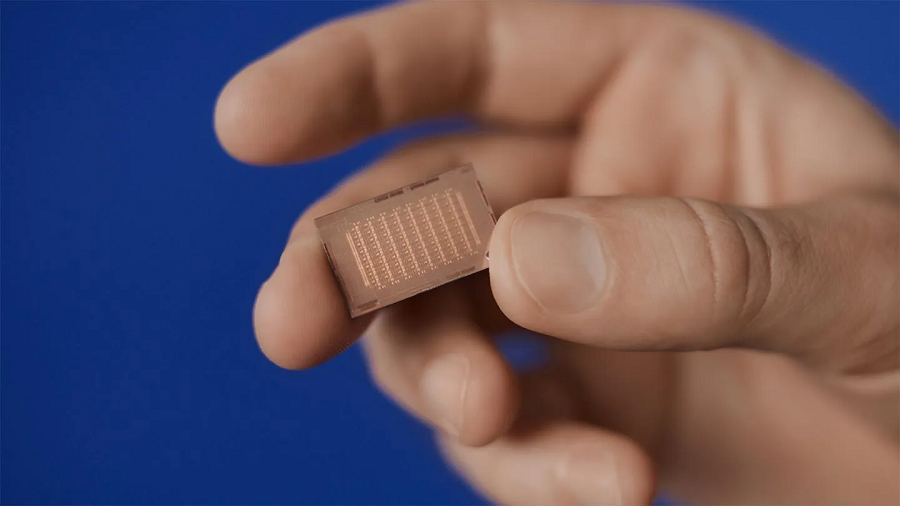

Meta Platforms Inc. today detailed a new iteration of its MTIA artificial intelligence chip that can run some workloads up to seven times faster than its predecessor.

The first version of the MTIA, or Meta Training and Inference Accelerator, made its debut last May. Despite the name, the chip isn’t optimized for AI training but rather focuses primarily on inference, or the task of running AI models in production. Meta built both the first-generation MTIA and new version detailed today to power internal workloads such as content recommendation algorithms.

The latest iteration of the chip retains the basic design of its predecessor, the company detailed. Meta also carried over some of the software tools used to run AI models on the MTIA. The company’s engineers combined those existing building blocks with hardware enhancements that significantly increase the new chip’s performance.

Like its predecessor, the second-generation MTIA comprises 64 compute modules dubbed PEs that are optimized for AI inference tasks. Each PE has a dedicated cache that it can use to store data. Placing memory close to logic circuits reduces the distance data has to cover while moving between them, which shortens travel times and thereby speeds up processing.

Meta originally made the PEs using Taiwan Semiconductor Manufacturing Co. Ltd.’s seven-nanometer process. With the second-generation MTIA, the company has switched to a newer five-nanometer node. It also expanded the cache integrated into each PE compute module from 128 kilobytes to 384 kilobytes.

The MTIA’s onboard cache is based on a memory technology called SRAM. It’s faster than DRAM, the most widely used type of computer memory, which makes it more suitable for powering high-performance chips.

DRAM is made of cells, tiny data storage modules that each comprise a transistor and a kind of miniature battery called a capacitor. SRAM, in contrast, uses a more complex cell design that features six transistors. This architecture makes SRAM significantly faster but also costs more to manufacture and limits the available storage capacity. As a result, the technology has few applications besides powering processors’ onboard cache modules.

“By focusing on providing outsized SRAM capacity, relative to typical GPUs, we can provide high utilization in cases where batch sizes are limited and provide enough compute when we experience larger amounts of potential concurrent work,” Meta engineers detailed in a blog post today.

The 64 PE compute modules in Meta’s MTIA chip can not only move data to and from their respective caches, but also share that data with one another. An on-chip network allows the modules to coordinate their work when running AI models. Meta says that the network provides more than twice as much as the module interconnect layer in the original MTIA, which speeds up processing.

According to the company, another contributor to the new chip’s increased performance is a set of improvements “associated with pipelining of sparse compute.” In AI inference, sparsity is a principle that states a sizable portion of the data a neural network processes often isn’t necessary to produce an accurate result. Some AI inference chips can remove this unnecessary data to speed up computations.

“These PEs provide significantly increased dense compute performance (3.5x over MTIA v1) and sparse compute performance (7x improvement),” Meta detailed.

In its data centers, the company plans to deploy the new MTIA chip as part of racks that are also based on a custom design. Every rack is divided into three sections that each contain 12 hardware modules dubbed boards. Each board, in turn, holds two MTIA chips.

Meta also developed a set of custom software tools to help its developers more easily run AI models on the processor. According to the company, several of those tools were carried over from the original version of the MTIA that it detailed last year.

The core pillar of the software bundle is a system called Triton-MTIA that turns developers’ AI models into a form the chip can run. It’s partly based on Triton, an open-source AI compiler developed by OpenAI that ships with its own programming language. Triton-MTIA also integrates with other open-source technologies including PyTorch, a popular AI development framework created by Meta.

“It improves developer productivity for writing GPU code and we have found that the Triton language is sufficiently hardware-agnostic to be applicable to non-GPU hardware architectures like MTIA,” Meta’s engineers detailed. “The Triton-MTIA backend performs optimizations to maximize hardware utilization and support high-performance kernels.”

THANK YOU