CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

The executive who helped turn spare computer capacity into the largest cloud business in the world returned to the keynote stage at AWS re:Invent today to announce Amazon.com Inc.’s bid to reshape the world of artificial intelligence models.

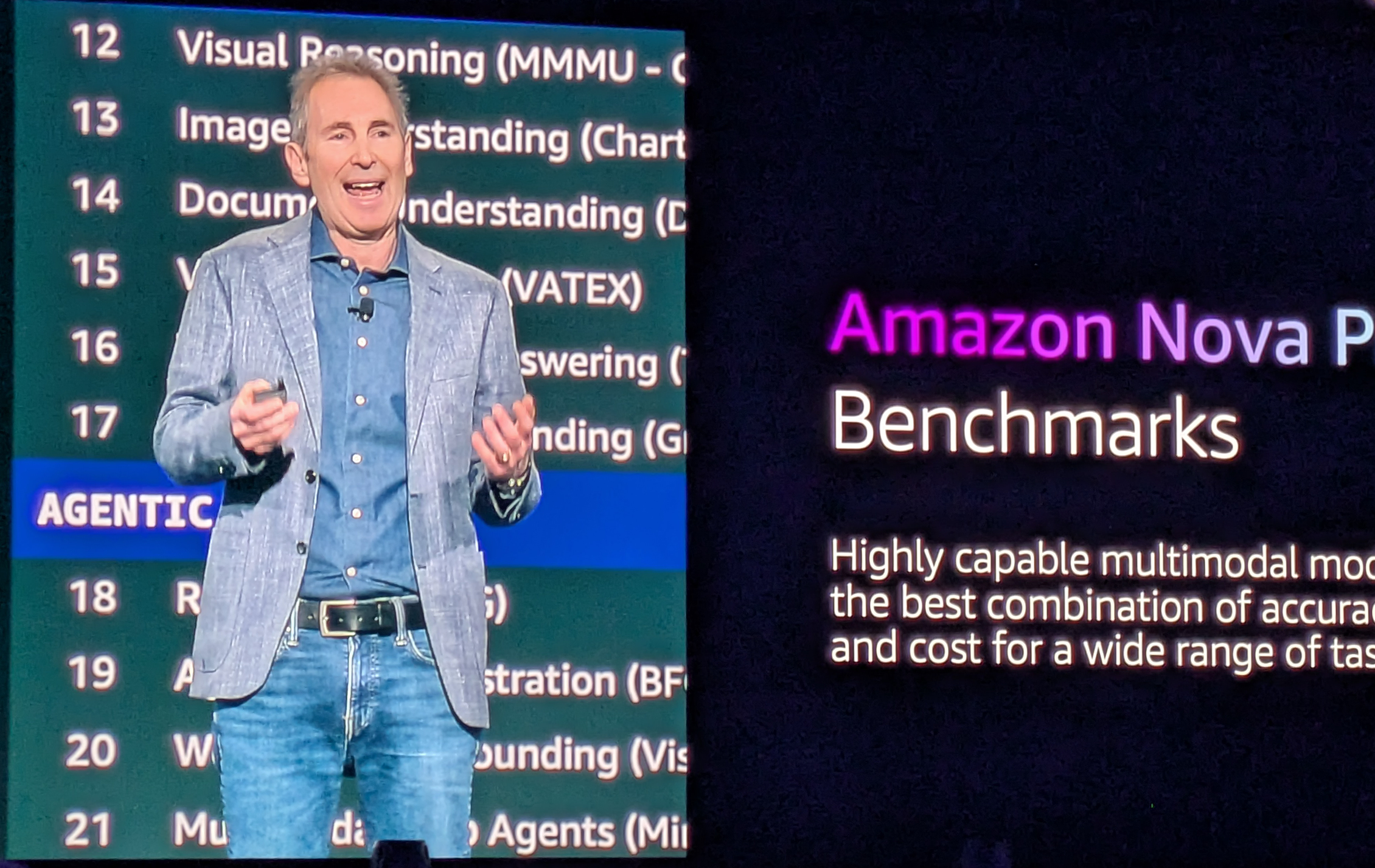

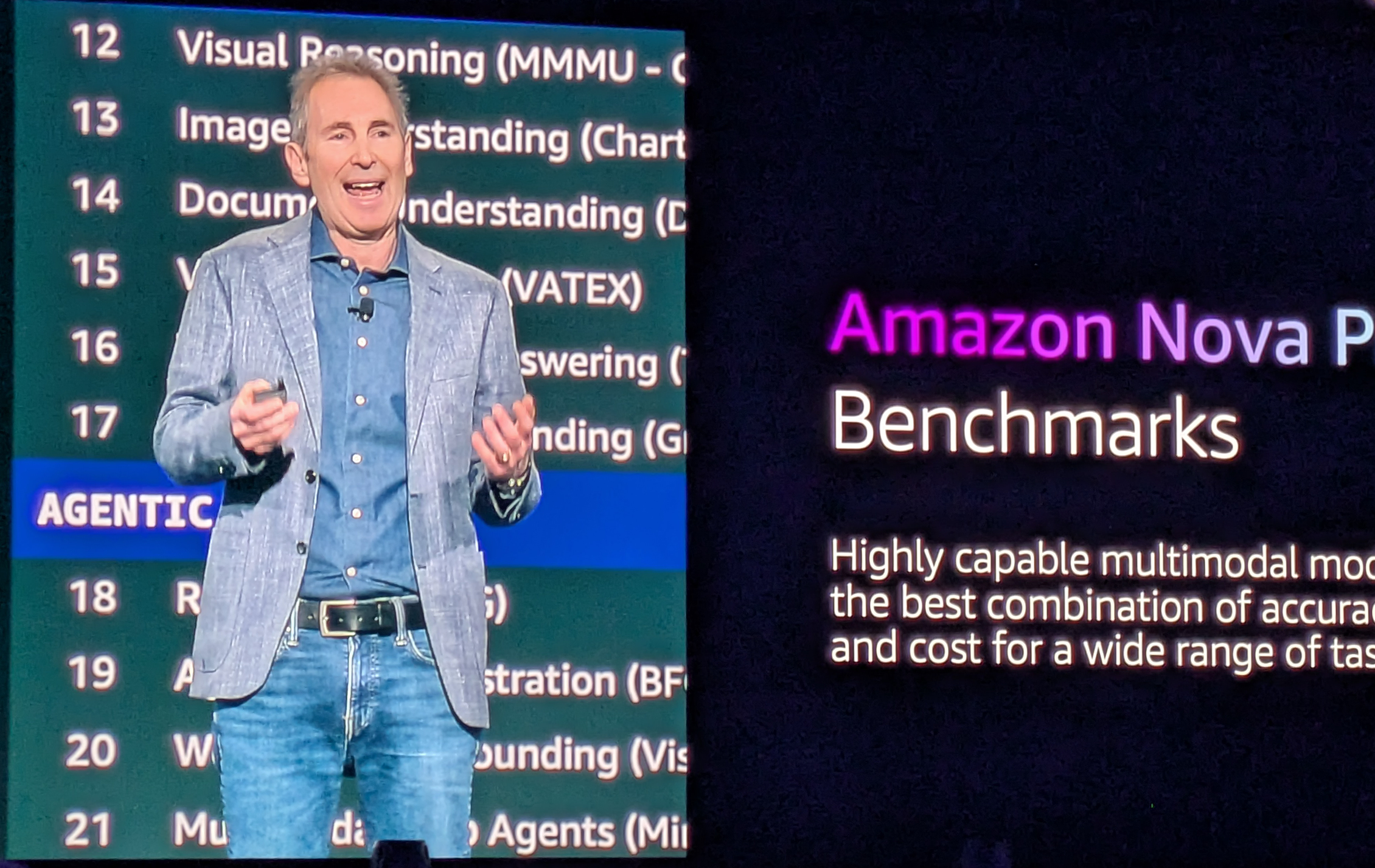

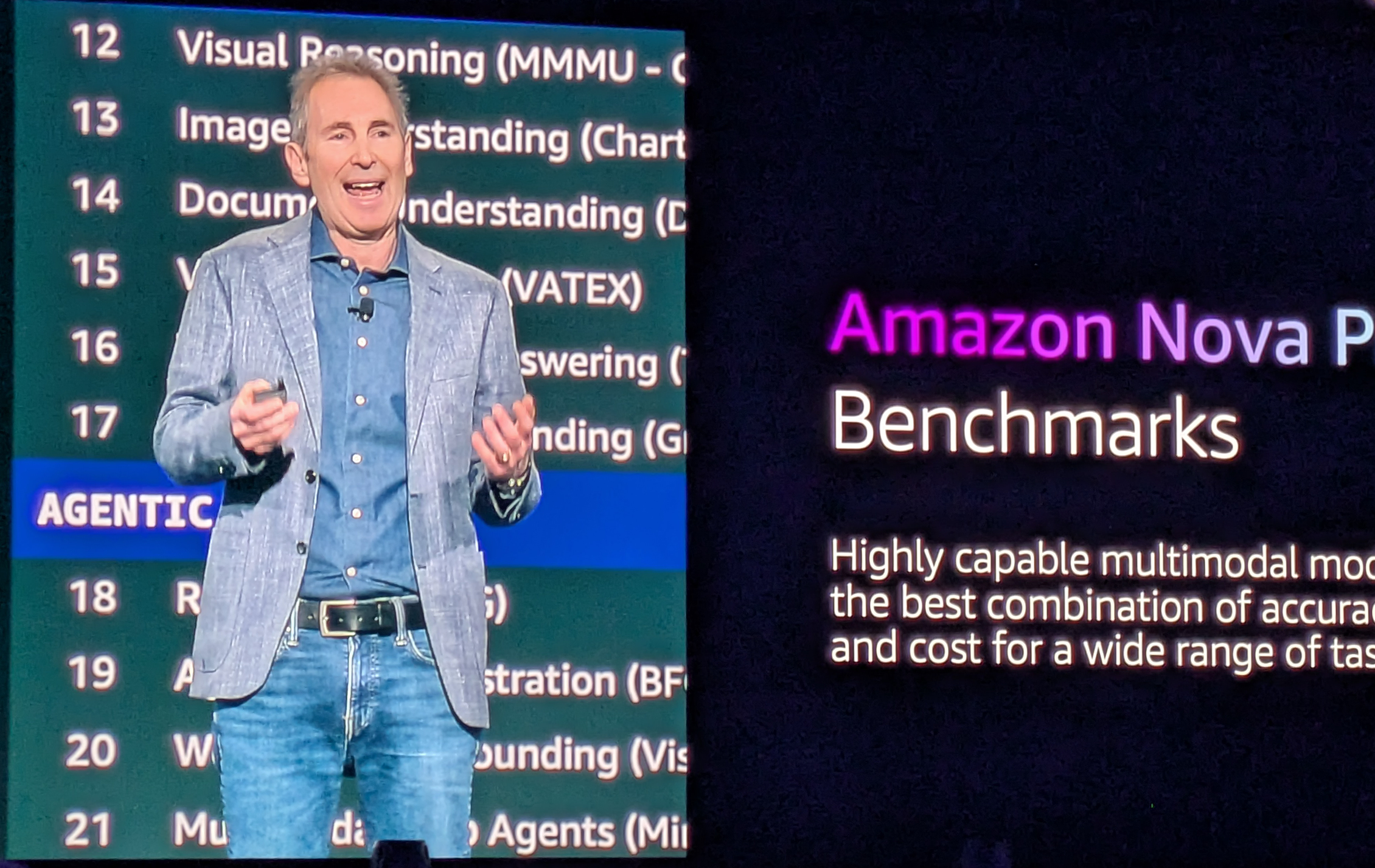

Andy Jassy (pictured), who succeeded Jeff Bezos to became chief executive of retail giant Amazon in 2021 after previously guiding Amazon Web Services Inc., made his first onstage appearance in three years at the AWS cloud conference in Las Vegas to announce the launch of six new foundation models. Branded as Amazon Nova, the offerings were positioned by Jassy as “new state-of-the-art foundation models that deliver intelligence and industry-leading price performance.”

The models will provide support for processing text, images and video in a range of multimodal tasks. The launch of new models stemmed from a growing appreciation on the part of Amazon for interest among its enterprise customers in a variety of AI options.

“This kind of surprised us,” Jassy said. “We keep learning the same lesson over and over again. There is never going to be one tool to rule the world.”

Jassy’s point around multiple tools was amplified by AWS CEO Matt Garman, who unveiled a number of new offerings during his nearly three-hour keynote presentation. Though Garman highlighted AWS advancements in compute, storage, developer tools and databases, it was the company’s news surrounding AI and how it will support enterprise interest in this rapidly advancing field that headlined the conference’s opening day.

Foremost among these was a set of enhancements for Amazon Bedrock, the company’s fully managed service for building and scaling generative AI applications with high-performing foundation models. The six new models announced today will all be integrated into Bedrock

New capabilities for Bedrock include Automated Reasoning checks to guard against AI hallucinations, tools for building and orchestrating multiple AI agents, and Model Distillation that can transfer knowledge from larger models to smaller ones faster with less cost.

A clear focus in today’s AI announcements from AWS was on inference, the process used by AI models to make predictions or conclusions. “My view is that generative AI inference is going to be a core building block for every single application,” Garman said. “Every app is going to use inference in some way. To do that, you will need a platform that can deliver inference at scale. This is why we built Bedrock.”

Amazon itself has been actively using Bedrock and assorted AI tools to run key aspects of its business, a point that Jassy reinforced during his appearance on Tuesday. The online retailer rebuilt a chatbot so that it could anticipate whether a customer call might be about a return and sense whether the ensuing dialogue light be leading to the onset of frustration.

There are two primary factors that are driving AI adoption, according to Jassy. “The most success we have seen in companies all over the world is in cost avoidance and productivity,” Jassy said. “As you get to scale in generative AI applications the cost of compute really matters.”

Scale and cost have emerged as key drivers behind the AWS approach to AI. As the corporate world spends billions of dollars to develop AI infrastructure, AWS is betting that workload performance will ultimately dictate the demands placed on cloud computing.

In a re:Invent presentation on Monday evening, Peter DeSantis, senior vice president of AWS Utility Computing, outlined how the company viewed the future of AI infrastructure as focused on boosting the capacity of a single system or “scaling up,” versus building identical systems for distributing workloads across multiple machines, a “scaling out” process.

“AI workloads are not scale-out workloads, they are scale-up workloads,” DeSantis said. “Our path to building larger models is to build more powerful servers.”

The company’s announcements this week have revealed how AWS plans to execute this vision through its in-house chip development efforts. AWS today announced general availability of Trainium2-powered Elastic Compute Cloud or EC2 instances and UltraServers that will enable users to train and deploy AI models with greater performance and cost efficiency.

“Trainium2 is not only our most powerful AI server, it’s also designed to scale up faster than any server we’ve ever had,” DeSantis noted.

Amazon’s year has been marked by cloud growth and the firm’s artificial intelligence services have been generating billions of dollars in annualized revenue, according to comments from Jassy in the company’s earnings review in October. Today’s releases are clearly designed to capitalize on what AWS hopes will be a wave of momentum as its extensive customer base seeks a return on investment in AI.

“We prioritize technology that we think is really going to matter for customers,” Jassy said. “We’re going to give you the broadest and best functionality you can find anywhere.”

THANK YOU