AI

AI

AI

AI

AI

AI

Nvidia Corp. late Monday announced the launch of the DGX Spark, a compact desktop computer optimized to run artificial intelligence models.

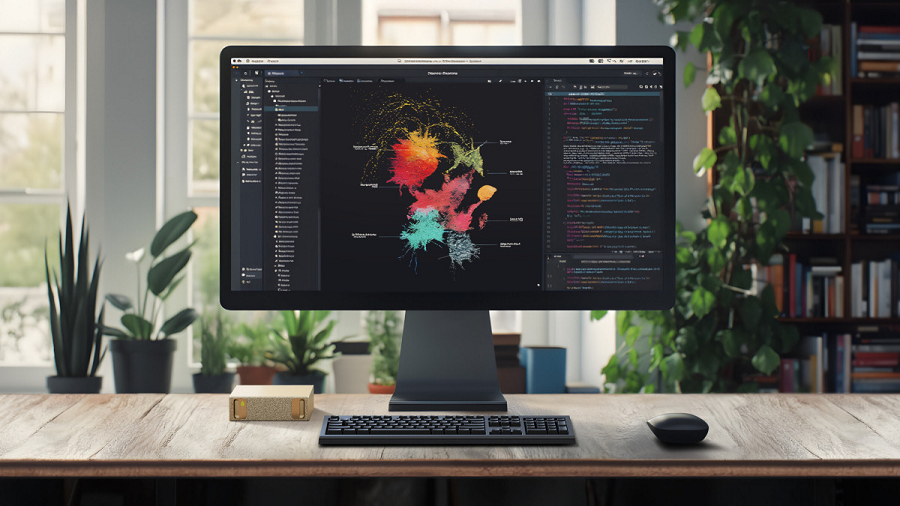

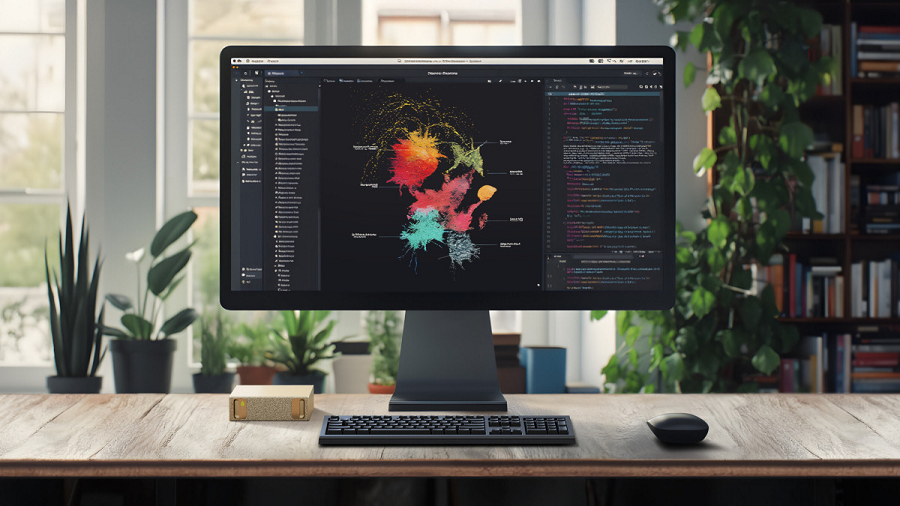

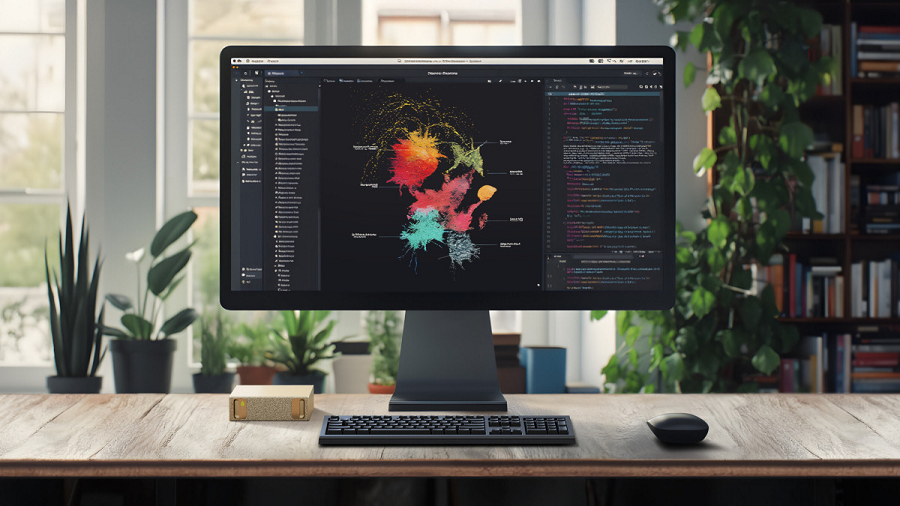

Software teams typically use cloud infrastructure to fine-tune neural networks. Setting up an off-premises training environment takes time and effort, which slows down development. The DGX Spark removes that bottleneck while avoiding the cybersecurity risks associated with spinning up new cloud infrastructure.

The device, which is a fraction of the size of a standard desktop, is powered by a processor called the GB10 Grace Blackwell Superchip. It contains a graphics processing unit and a 20-core central processing unit.

The DGX Spark’s GPU is based on Nvidia’s latest Blackwell chip architecture. Blackwell is significantly more power efficient than the company’s previous-generation Hopper architecture. It can also provide 30% higher performance when processing FP32 and FP64 numbers, units of data that are commonly used by scientific applications such as simulation tools.

The DGX Spark’s 20-core CPU, in turn, is based on Arm Holdings plc blueprints. Half its cores feature a performance-optimized design while the others trade off some speed for lower energy usage. Nvidia reportedly developed the CPU in collaboration with MediaTek Inc.

The DGX Spark’s GPU and CPU are linked together by an Nvidia-developed interconnect called NVLink-C2C. It can move data between the chips at a rate of 600 gigabits per second. Nvidia says that the technology is five times faster than PCIe, the interconnect technology typically used for such tasks.

The CPU and GPU are supported by a 128-gigabyte pool of shared LPDDR5x memory. When a workload is split across multiple chips, those chips usually have to create a separate copy of the data being processed in their respective caches. A shared memory pool can reduce the need for separate data copies and thereby boost processing efficiency.

Nvidia says that the DGX Spark provides one petaflop of performance when processing FP4 numbers, units of data AI models use to carry out calculations. One petaflop corresponds to a thousand trillion calculations per second. According to Nvidia, that’s enough to fine-tune models with up to 70 billion parameters.

The DGX Spark ships with a ConnectX-7 network card that powers two Ethernet ports. Those ports make it possible to link together two computers into a kind of miniature AI cluster. DGX Spark devices configured in that manner can perform inference with models containing up to 405 billion parameters.

Nvidia will ship the device with its AI software stack preinstalled. The bundle includes AI development tools and NIM models, neural networks optimized to run on the company’s hardware. The models are packaged into containers that also provide observability software, customization options and other features.

Nvidia will start shipping DGX Spark tomorrow for $3,999. Dell Technologies Inc., Acer Inc. and several other computer makers plan to launch their own versions of the device.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.