EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

Salesman Tom is about to hit the road for a monthlong journey to visit customers and sales prospects, a trip that will take him to 50 destinations across the United States.

Tom needs to optimize the trip by three priorities. He must first maximize the time he spends with each client. Second, he must complete the trip in the shortest possible distance. Finally, he needs to do it at the lowest possible cost.

This variation on the classic “traveling salesman problem” would take one of today’s off-the-shelf computer servers decades to solve definitively, if it could solve the problem at all. A quantum computer could knock it off in seconds.

Route optimization is one of the sweet spots of this technology, but so are modeling chemical compounds, spotting patterns in DNA sequences, optimizing financial portfolios and forecasting weather. Fleets of autonomous vehicles will be managed far more safely and efficiently by quantum computers than by traditional ones. The technology could revolutionize risk analysis in the insurance industry.

In short, the more complex the problem and the greater the number of variables involved, the better quantum computing looks. That’s one reason the field has acquired an almost mythical aura over the more than 35 years that scientists have pursued it.

Now, there’s mounting evidence that quantum computing is tantalizingly close to reality. Investment capital is flowing into the market, and some quantum computer developers are talking about showing prototypes of supercomputer-grade systems as soon as next year.

Quantum startup IonQ Inc. said last month that it has raised $22 million toward its goal of producing general-purpose quantum processors within 12 months. It’s the second startup in this field to score significant funding this year. Rigetti Computing Inc. said in March that it has raised $64 million and expects to demonstrate a machine next year that will outperform the world’s largest supercomputers in some tasks.

IBM Corp. announced an initiative to build a commercial quantum platform in March and followed that with a more-powerful version of its public cloud-based quantum processor in May. The move closely followed an announcement by D-Wave Systems Inc. that it sold a giant quantum machine to an unspecified customer for $15 million. And in July, a report said Google Inc. also plans to offer researchers access to its new quantum computing technology via the cloud.

The pace of activity this year is all the more remarkable given that, as recently as three years ago, experts were debating whether quantum computers could ever even be built. The consensus now is that it’s just a matter of time, and not that much time either. “The major obstacles toward coherent, capable systems are pretty much worked out,” said Andrew Bestwick, director of engineering at Rigetti. “The major challenges now are how to take something that’s been demonstrated on small systems and implement it in a highly scalable form.”

That could also open up a lucrative new market. Currently it’s small, but it’s growing quickly. Market Research Future expects quantum computer sales to surge 24 percent annually to nearly $2.5 billion in 2022. Market Research Media Ltd. is even more optimistic, forecasting $5 billion in annual sales in 2020.

The timing couldn’t be better. Moore’s Law, the doubling of the number of transistors on a square inch of silicon every two years, has propelled the computer industry for more than 50 years, but it appears to be finally running out of steam. Quantum computers could kick off a new era of growth in computing power.

It’s badly needed. The Internet of Things promises to make networks dramatically more complex, requiring entirely new approaches to network management, a task well-suited to quantum technology. Then there’s the explosion of IoT data that will require new approaches to analyzing it all. And organizations that are searching for new efficiencies and revenue in the quest for digital transformation will find that quantum opens up vast new opportunities.

Does that mean it’s time to hang a “for sale” sign on those Intel servers? Not yet, if ever. People on the front lines of quantum computer development say users can expect to see tangible benefits within the next few years, but the vaunted machines that can crack 256-bit encryption codes in seconds are still a decade or more away. D-Wave is the only company that’s currently shipping a commercial quantum computer, and experts dispute whether its technology is actually “true” quantum. IBM, Google and Microsoft Corp. are among the big players that have established their own initiatives, but the market is fragmented, leaderless and still squabbling over architectural details.

But it’s not too early to start thinking about the problems that quantum technology could tackle: tasks of exponential complexity such as optimizing transportation routes, mapping molecular interactions, optimizing stock market portfolios and forecasting the weather. That requires understanding how quantum computing is different.

The principles of quantum mechanics are so baffling to the average person that experts tend to fall back to describing the computing technology in terms of what it isn’t, which is traditional computing.

Mainstream digital computers are based on binary arithmetic, in which numbers are expressed as combinations of ones and zeros. Performing calculations using these bits, or binary digits, is painfully slow, but it has the advantage of lending itself well to transistors, which exist in either an on or an off state. When you throw enough transistors at a problem, they can do that binary arithmetic at blinding speed. That’s how binary digital computers work.

Image: Wikimedia Commons

They’re great at performing simple calculations against large quantities of data quickly, but they’re poorly adapted to solving problems with multiple interdependent variables, such as the traveling salesman problem. That’s where quantum computers shine.

Qubits are the quantum equivalent of bits, but they’re a lot more complex. Whereas a bit is either 0 or 1, a quantum bit can be 0, 1 or something else. That something else can be 1/2, 9/16ths, 123/128ths or any other point on an multidimensional axis. If a bit is a single horizontal line, then a qubit is a sphere with x, y an z axes and the capacity to represent data anywhere within the orb. This concept is commonly represented as a Bloch sphere (pictured). That “superpositioning” characteristic makes it possible for qubits to represent complex problem sets that binary computers can’t approach.

The other important distinction of quantum computers is something called “entanglement,” or the ability of qubits to correlate with each other so that each is aware of the state of all others. That means that quantum computers grow in power exponentially as qubits are added. So, in theory, a 200-qubit system is 2200 times as powerful as a 100-qubit system. By comparison, classic digital computers grow linearly. Experts generally agree that quantum computers of 30 qubits or less can still be outperformed by classic digital computers. But when densities get higher than 30 qubits, quantum starts to pull away.

“You’d need a fairly large [IBM] Power system to simulate a 30-qubit device. When you get to 40 or 45, you need the world’s largest supercomputer,” said Scott Crowder, vice president and chief technology officer of quantum computing, technical strategy and transformation at IBM.

But qubits also present some big challenges, not least of which is reliability. Computers make mistakes all the time for reasons that include hardware failure, environmental factors and variations in power. These errors are easily corrected in digital computers using simple validation techniques like checksums.

Qubits are far more complex and hence more fragile than bits. In some quantum models, qubits are prone to changing state quickly, resulting in frequent errors. The question of how to solve this so-called “coherence” problem has vexed developers for years. “If you have a one-second calculation and your coherence disappears in 100 microseconds, then you won’t have the power of quantum computing,” said David Moehring, CEO of IonQ. “The result will be wrong.” Compounding the problem is the interdependence of qubits, which makes measuring and isolating errors more difficult. In fact, the very process of measuring a qubit destroys the quantum state, said IBM’s Crowder.

IBM announced what it called a major breakthrough in solving problems related to qubit error correction and scalability in late 2015. It has been successful with an approach that uses qubits to fix each other’s mistakes, but the solution isn’t perfect, since 1,000 or more qubits are needed to keep watch over a single one. The problem not only inhibits performance problems but limits scalability.

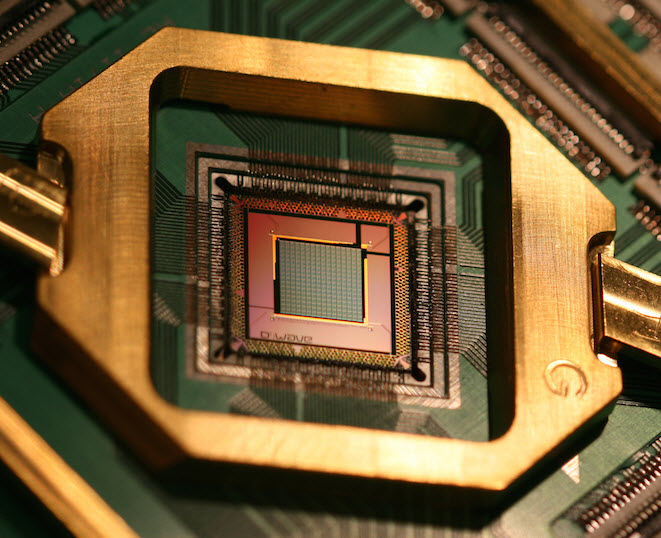

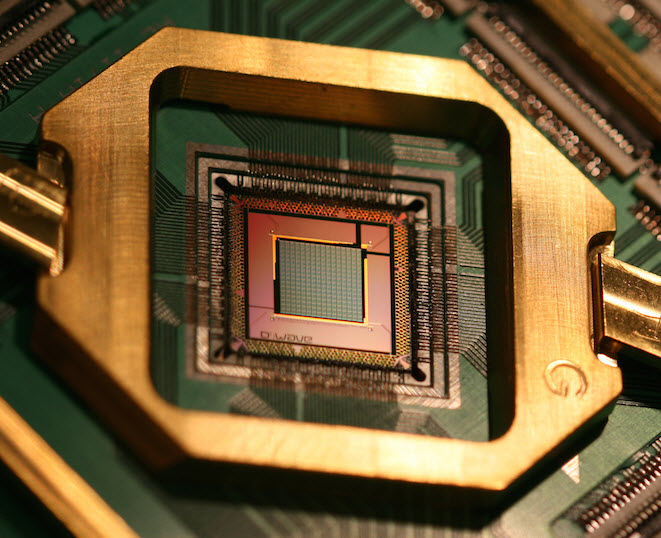

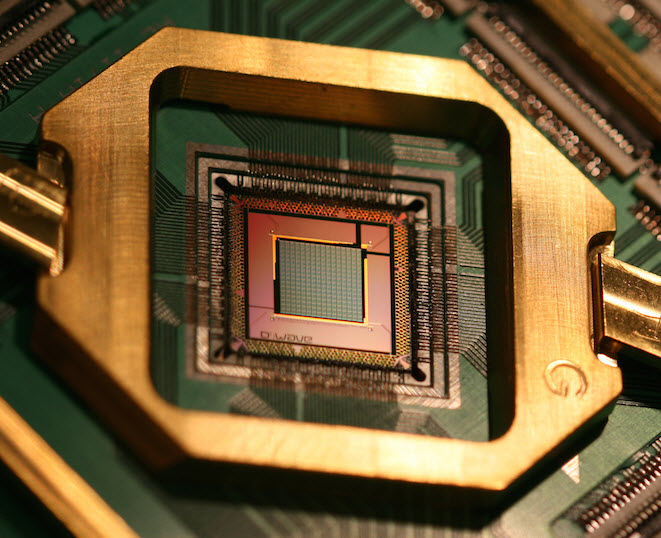

Image: D-Wave

Another problem developers are struggling to solve is how to manage heat. Most gate-based approaches require super-cooling, which limits them to data center environments. For example, D-Wave’s 700-cubic-foot processing engine (left) houses cryogenic refrigeration equipment that chills its quantum processor to a temperature that the company says is 180 times colder than interstellar space. IBM and Rigetti are also pursuing superconductor-based systems that require extremely low temperatures.

IonQ has a different approach called “trapped ion,” which uses lasers to cool and isolate individual ions. That makes qubits easier to control, and thus scale with greater predictability. The company predicts trapped-ion technology will enable its computers to operate at room temperature, although it hasn’t yet shown a prototype.

One common misconception about quantum computers is that they will displace digital ones. In fact, the two architectures each are well-suited for different kinds of problems. For most common data processing tasks involving basic arithmetic, digital computers will continue to be a superior solution for many years to come.

Quantum computers are better for problems in which complexity increases as an exponent of the number of variables. IonQ’s Moehring offers fertilizer production as a problem quantum computers will tackle that classical computers can’t. About 2 percent of the world’s energy resources go to fertilizer production, he said, an expensive process that nature handles much more deftly. Understanding how to duplicate the natural process requires molecular modeling, a problem that grows exponentially with the number of potential interactions. “Even for a molecule that’s modest in size, the world’s most powerful classical computer can’t model its interactions,” he said, “and they never will, because classical computers can’t scale enough.”

IBM Quantum Computing Lab (Image: IBM)

Another misconception is that there is only one kind of quantum computer. In fact there are several, with most commercial activity centered on three architectures.

The market is so new that little consensus has emerged on which approach is best, and participants still vigorously debate the definition and merits of “pure” quantum computing. The one company that is currently shipping a commercial quantum processor – D-Wave – has adopted quantum annealing, a model that inspires considerable debate.

“The weakness of what [D-Wave] is doing is that you need to map the problem to a fixed function,” said IBM’s Crowder. “It’s unclear to me that it will ever have an advantage over classical, computing, but it doesn’t really matter as long as they’re demonstrating business value.”

Rigetti’s Bestwick agreed. “It’s like a special purpose ASIC [application-specific integrated circuit] that can do one thing and one thing only,” he said.

D-Wave’s Brownell dismissed such criticisms as dodges by competitors to divert attention from the fact that they have no products. He said D-Wave’s approach is every bit as scalable and flexible as alternatives. D-Wave points to its 140 U.S. patents and more than 90 peer-reviewed published papers as evidence. “Others are trying to do what may be a more mathematically or theoretically pure version of quantum computing, but they are years away from even solving simple problems,” he said. “We’re the only ones that have real customers.”

As evidence of the viability of D-Wave’s approach, Brownell cited the continuing scalability gains the company has made. Its first machine, introduced in 2011, contained 128 qubits. The new 2000Q has 2,000, a 15-fold increase. “The machines we’re shipping today are hundreds of thousands of times more powerful than the ones we first shipped,” he said, citing Google’s 2015 finding that a D-Wave machine was 100 million times faster than a single-core computer in a Monte Carlo simulation.

Over the next three to five years, quantum computing is unlikely to be practical for applications other than hard-core science applications. But by that time, 50-qubit quantum gate processors should begin to creep into the market. IBM has said it will have commercial hardware within the next few years. “That doesn’t mean a lot of years,” Crowder said. “It really means soon. We’re at the cusp.”

IonQ’s Moehring believes the systems his company will build through 2022 “will be unlikely to solve very large molecular dynamics or optimization problems, but they will create the road map we need to discover these applications.” Rigetti’s Bestwick said his company’s first commercial systems will likely be hybrids that “use classical computer for the parts of the application that classical computers are good for, and use quantum computing for the parts that can’t be solved that way.”

In an information technology world that is currently focused on abstracting every possible hardware function to software, the quantum computer industry is still firmly grounded in big iron. There are no standards, no Intel-equivalent processor platform and no reference architectures. Every vendor is rolling its own solution and the software that goes with it. Does that mean one company will come to dominate quantum computing in the way that IBM did the entire computer industry in the 1980s? IBM’s Crowder doesn’t think so.

“Companies like IBM that provide the full stack need to play a role, but we’ll also need third parties who deeply understand the verticals for enterprise customers,” he said. “We’ve put a lot of effort into ecosystem development.” IBM says it already has more than 50,000 users running quantum experiments around the world.

Writing algorithms specifically for quantum processors will require specialized skills, Crowder said, but he expects that application developers will be able to make the switch to quantum fairly easily. “You won’t need to be a quantum expert to leverage the code on a quantum computer,” he said. “You’ll use Python notebooks from GitHub to do that.”

Until those platform issues are worked out, applications will be written largely by hand. Andrew Schoen, a principal at venture capital firm New Enterprise Associates, said it’s far too early to place any bets on packaged software. “It would be like investing in Amazon before Fairchild Semiconductor was founded,” he said.

But as a lead investor in IonQ, NEA is putting its money where its mouth is. “The role of venture capital is to invest in people and companies that have a chance to dramatically improve the future in a way that creates a substantial business,” Schoen said. “Quantum computing fits squarely within that framework.”

If current trends continue, it will increasingly fit within the processing frameworks of mainstream businesses as well. Thirty-seven years after a quantum mechanical model was first published, salesman Tom might soon be ready to start that ultimate road trip.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.