EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

There is no one teaching style that works perfectly for every student, and it turns out that the same can be said for neural networks, the computing systems that power many of today’s smartest artificial intelligence programs.

Enterprises can waste a lot of time and money if they pick the wrong way to train their neural nets, but DeepMind Technologies Ltd. may have found a solution to that problem. The Alphabet Inc.-owned AI company published a new research paper today that outlines a way for researchers to quickly choose the best training method using the neural net itself.

“Neural networks have shown great success in everything from playing Go and Atari games to image recognition and language translation,” Max Jaderberg, a research scientist at DeepMind, said in a blog post. “But often overlooked is that the success of a neural network at a particular application is often determined by a series of choices made at the start of the research, including what type of network to use and the data and method used to train it.”

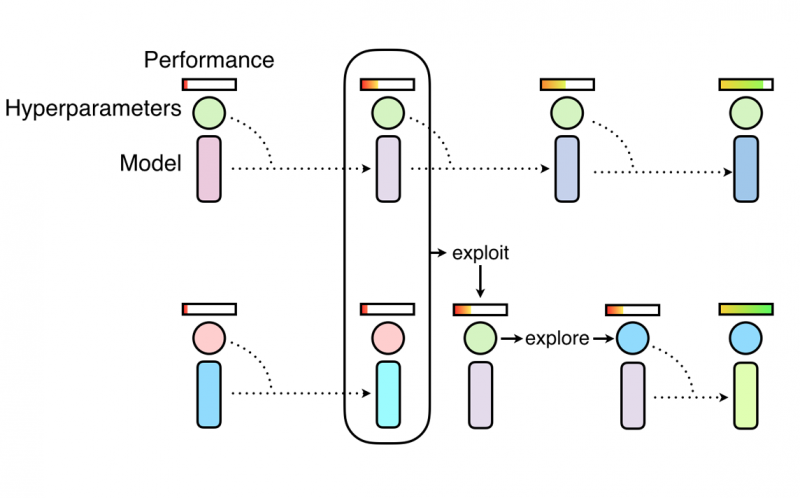

Jaderberg said that researchers currently make these choices, which are called hyperparameters, by either hand tuning them or by using automated processes that can be too random and require significant computational resources. He explained that DeepMind’s new method, which it calls Population Based Training or PBT, finds settings that are the best fit for a neural net without draining resources or requiring human intervention.

If a neural net is like a student, then DeepMind’s new method works a bit like cloning that student several times and teaching each clone in a slightly different way. As some students start pulling ahead of the rest, the ones who lag behind switch over to the more effective learning styles with a few random tweaks and then the process starts again. Eventually, the neural net settles the style that works best for it.

Image: DeepMind Technologies Ltd.

DeepMind tested its new method out on several of its existing AI research projects, including DeepMind Lab, Atari and StarCraft II. According to Jaderberg, PBT quickly found training styles that “delivered results that were beyond state-of-the-art baselines.”

The company also used PBT on one of Google’s machine translation neural networks, which Jaderberg said are usually trained using carefully hand tuned settings. DeepMind’s PBT method found settings that “match and even exceed existing performance, but without any tuning and in the same time it normally takes to do a single training run.”

Jaderberg said that DeepMind believes that this is “only the beginning for the technique,” and the company will continue exploring new ways to improve neural nets with PBT.

THANK YOU