NEWS

NEWS

NEWS

NEWS

NEWS

NEWS

Too many well-established, well-meaning, talented storage executives are approaching flash as a faster high-performance tier 1 disk rather than as a revolutionary new technology that changes the entire game, writes Wikibon Co-founder and CTO David Floyer in his latest Wikibon Premium Alert.

He quotes practitioners as saying, for example, “It is key that the important data is stored on more expensive, higher performing storage systems while less critical data is stored on less expensive, lower performance storage,” and “I don’t think tiering will go away anytime soon, but flash changes something for sure.”

This mindset, he writes, is out of date and even dangerous to the enterprise in the long run. Tiering is mainly a product of the severe bandwidth and IOPS limitations of spinning magnetic disk. NAND flash is a totally different animal, not only an order of magnitude faster but also providing huge amounts of IO.

Because of the limitations of magnetic media, data centers typically maintain 10-15 copies of databases to support various activities including data warehousing, analytics, Web front-ends, development and the like. These copies normally reside on lower-tier storage, and they are absolutely necessary in a spinning disk world. Trying to share magnetic data directly between transactional and data warehouse applications, he writes, “would result in melted disk heads.”

Capacity flash costs more up front, so deduplication and compression are normally used to bring the effective cost down to that of high performance disk. But flash is more than faster storage. It also brings so much I/O that it eliminates the need for most physical copies of databases. Early adopters, Floyer reports, support 120 developers, all with full read/write access, on one database copy with no loss of performance. Instead of physical copies, all the subsidiary uses of the production database can be provided with logical snapshots that draw on the single main database. The result is all users have much higher performance and the need for tiering is eliminated. The less active data, he argues, will reside on the flash along with the active data.

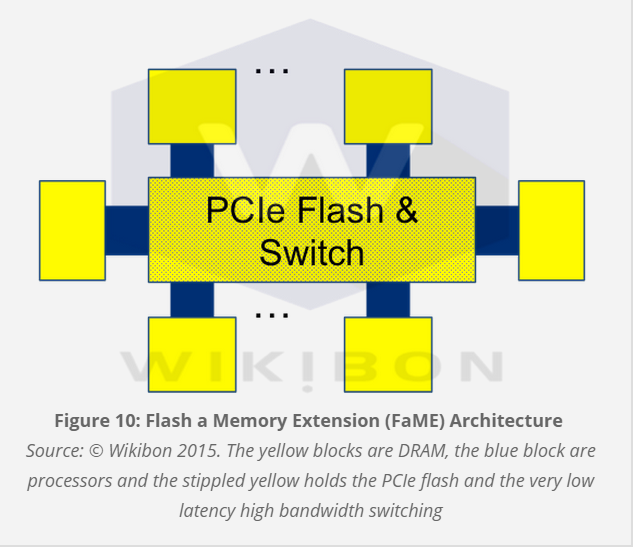

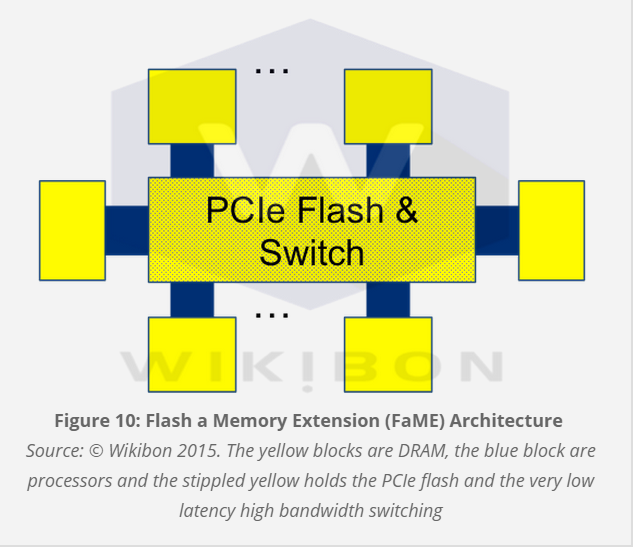

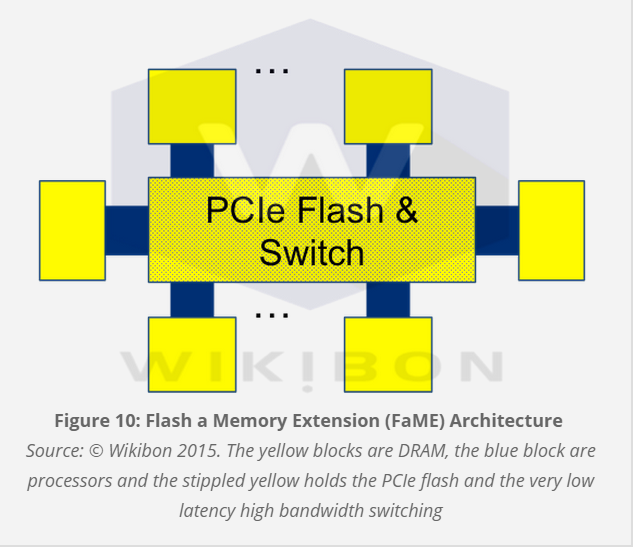

New functionality is required for this architecture (see image above). Strong automated QoS is needed to manage performance conflicts. Catalogs are required to manage logical snapshots, physical placement and backups. Scale-out with eight or more processors will provide more compute and sharing than dual-processor controllers, and automated management will require its own system of intelligence.

The most important challenge, however, is not technology. It is leadership from the top. CIOs must change the mindsets of practitioners and normal processes. Floyer says, however, that all-flash datacenters will be a strategic imperative by 2016. They will reduce the IT budget and lay the foundation for a migration to Systems of Intelligence.

Floyer’s full alert, along with other analysis of the revolutionary impact of flash on the datacenter and a video of Floyer’s analysis of storage directions for the next five years, is available on the Wikibon Premium Web site.

THANK YOU