INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

The University of California at San Diego has adopted a cloud-first strategy that involves retiring three mainframes, shifting as many computing workloads as possible to the cloud and abandoning on-premises software in favor of software-as-a-service wherever possible.

“I long ago realized that the CIO should be more of a supply chain leader than someone who keeps track of hardware specs,” said Vince Kellen, UCSD’s chief information officer. “For most of our business problems, hardware doesn’t matter.”

UCSD’s Kellen: “For most of our business problems, hardware doesn’t matter.” Photo: UCSD

A growing number of information technology managers feels the same way. For more than 60 years, both the potential and limitations of computing have been defined by processors, memory, storage and other components fashioned from metal and silicon. The central role of the IT organization has long been to protect and optimize those precious assets, using armies of systems administrators and people with screwdrivers who keep the data center lights on.

About 20 years ago, virtualization began the process of abstracting away the nuts and bolts of hardware, making infrastructure a single amorphous entity managed by software. Cloud computing has furthered the process over the last decade, making hardware an abstract resource that, for customers, is increasingly someone else’s problem to manage.

But in a surprising twist, the cloud is actually unleashing a flood of new hardware innovation — starting with the foundational silicon chip on which all computers and the cloud itself are built.

“This is really a golden era of semiconductors,” Victor Peng, president and chief executive at programmable logic circuit and software veteran Xilinx Inc., said at a recent event called “The Renaissance of Silicon” presented by the Silicon Valley thought leadership forum Churchill Club. Added Sanjay Mehrotra, president and CEO of chipmaker Micron Technology Inc., “There has never been a more exciting time in the past 40 years.”

Wikibon’s Miniman: IT managers treat infrastructure like pets; cloud vendors treat it like cattle.” Photo: SiliconANGLE

But it’s about far more than silicon. It’s a common perception that cloud infrastructure providers think of hardware as a commodity to be purchased cheaply and daisy-chained together in an infinitely scalable blob to be managed by sophisticated software. “IT managers treat infrastructure like pets,” quipped Stu Miniman, senior analyst at Wikibon, a sister company of SiliconANGLE. “Cloud vendors treat it like cattle.”

But the cloud has also introduced some thorny problems that can’t be solved with software alone. That has prompted cloud providers to invest billions of dollars in hardware-based solutions to the limitations of remote infrastructure.

The cloud’s inherent latency disadvantages, along with the delays involved in shuttling large volumes of data to and from cloud storage, are among the factors driving new investments in silicon-based network acceleration. The rise of the cloud-fueled “internet of things” is also sparking development of new types of low-power devices that sit at the edge of the network.

Most important, the growing use of cloud-based artificial intelligence technologies such as machine learning and deep learning is driving investments in hardware architectures that can deliver on those applications’ insatiable appetite for processing power and memory.

“The things we take for granted today, like instant-booting PCs, smart phones, jaw-dropping gaming videos, blazing fast in-memory databases and massively capacious storage systems would be far more limited or impossibly expensive without advances in hardware,” said Charles King, principal analyst at Pund-IT Inc.

“Ever since I started in this business, people have told me hardware is dead,” said David Vellante, chief analyst at Wikibon. “That prediction has yet to materialize.”

In essence, it’s not whether hardware matters. It’s where.

The result is that hardware considerations are shifting away from the user and into the back-end infrastructure, which today is the cloud. That suits a lot of IT managers just fine.

“Our five-year plan is to put almost everything in the cloud for scale,” said Doug Saunders, CIO at waste disposal, collection and recycling company Advanced Disposal Services Inc. “I don’t want to be in the hardware business.”

The 20-year IT veteran said he has had more than his fill of installing, tuning and securing servers, a process that can take as much as three months from initial order. “You hear all the time that IT is slow,” he said. “One of the reasons is hardware.”

The appeal of software-defined management is so strong that even makers of on-premises hardware are de-emphasizing specs such as clock speeds, CPU core counts and storage capacity in favor of simplicity and ease of management.

Most customers care little about the number of cylinders or the size of the engine block in their car and they want to think of computers in the same way, said Lauren Whitehouse, director of software-defined and cloud group marketing at Hewlett-Packard Enterprise Inc. “They may not want to manage it anymore, but they care about the outcomes,” she said, pointing to rapid growth in her company’s hyperconverged and composable products, which remove much of the detail work from administering hardware.

Dell’s Pendekanti seeks to deliver “simpler, consistent operations and software infrastructure across on-premises, edge and public cloud.” Photo: SiliconANGLE

Dell Technologies Inc.’s recently introduced cloud platform “helps customers address their hybrid cloud needs with a unique approach… to provide simpler, consistent operations and software infrastructure across on-premises, edge and public cloud locations,” said Ravi Pendekanti, senior vice president of product management for Dell EMC Server & Infrastructure Systems.

That’s a windfall for IT organizations, which have traditionally employed teams of technicians to install and configure hardware and administrators to fine-tune performance and optimize utilization. Today, those jobs are giving way to positions centered on such disciplines as service level management, contracting and cloud orchestration. Global enterprise IT spending on operational staffing is expected to fall from $315 billion in 2015 to $142 billion in 2026, according to Statista.

Even in the rarified community of users of high-performance computing equipment such as labs and academic institutions, “there’s a growing body of engineers who are hardware-agnostic,” said Bob Sorensen, vice president of research and technology at Hyperion Research Holdings LLC. “They’d rather just get up and running with a virtualized environment or with containers.”

The bottom line is that “racking, stacking and turning knobs shouldn’t be what IT managers spend their time on,” said Wikibon’s Miniman. “It should be about managing everything via software.”

But the quest to make hardware transparent to users is driving a lot of behind-the-scenes activity around silicon. One reason is that the core technologies that have driven PC and server performance in the past simply aren’t moving very quickly anymore. Disk drives hit their theoretical performance peak years ago and speed improvements in flash storage are already reaching the point of diminishing returns.

More fundamental is that microprocessors are hitting a wall. For more than 40 years chips based on the X86 architecture have roughly doubled in performance every 18 to 24 months under the principle known as Moore’s law. The physical limitations of miniaturization are now being reached, however, making further progress slow and expensive.

![Google's Heredia: "CPUs have not been able to provide the power to solve [machine learning] problems.” Photo: LinkedIn](https://d15shllkswkct0.cloudfront.net/wp-content/blogs.dir/1/files/2019/07/Heredia-300x300.jpg)

Google’s Heredia: “CPUs have not been able to provide the power to solve [machine learning] problems.” Photo: LinkedIn

General-purpose microprocessors “were built to run many different types of workloads,” Heredia said, but as growth has shifted to special-purpose compute workloads such as machine learning, “CPUs have not been able to provide the power to solve those problems.”

That means the computer industry must look elsewhere to find the momentum that has driven its growth for decades. “Hardware still matters, just a different kind of hardware,” King said.

Witness the surging market for graphics processing units, the outboard chips that are wildly popular in applications of machine learning software. Global GPU shipments are expected to grow at a better than 30% annual rate through 2024 to reach $80 billion in revenue, according to Global Market Insights Inc.

Stock in market leader Nvidia Corp. more than quadrupled in the two years before a collapse in the cryptocurrency mining market dragged down earnings last October. Nevertheless, the long-term outlook is strong.

“Our top-line messaging is that Moore’s Law has largely ended, so compute performance has hit a plateau at the CPU level,” said Justin Boitano, senior director of enterprise and edge computing solutions at Nvidia.

Nvidia and other GPU makers are fulfilling the demand from cloud and systems makers for new hardware architectures that de-emphasize microprocessor performance in favor of outboard engines that are well-suited to the parallel processing needs of machine learning workloads. Machines built to process machine learning workloads farm out most of the work to GPUs, which process data in parallel and feed results back to the central processor, which doesn’t even need to be that fast.

This approach is yielding orders-of-magnitude leaps in performance for some workloads. OpenAI LP, the artificial intelligence startup that last week received a massive $1 billion investment from Microsoft Corp., last year estimated that the amount of compute power used in its largest AI training runs grew more than 300,000-fold between 2012 and 2018, 3.5-month doubling time that far exceeds the pace of Moore’s Law.

The action isn’t just in GPUs. Low-power, reduced-instruction-set-computing Arm microprocessors that dominate the mobile phone market are finding new uses in internet of things devices and even Amazon Web Services Inc. EC2 instances.

Intel and others are also placing big bets on persistent memory, a new type of memory that combines the data retention properties of storage with the speed of DRAM. Persistent memory is particularly useful in hyperscale scenarios and is the type of technology that illustrates the shifting hardware priorities brought about by cloud computing. “This will be revolutionary technology,” said Kit Colbert, chief technology officer of the cloud platform business unit at VMware Inc.

![Hyperion's Sorensen: "There’s a growing body of [high-performance computing] engineers who are hardware-agnostic." Photo: LinkedIn](https://d15shllkswkct0.cloudfront.net/wp-content/blogs.dir/1/files/2019/07/Bob-Sorensen-Hyperion-Research-300x300.jpg)

Hyperion’s Sorensen: “There’s a growing body of [high-performance computing] engineers who are hardware-agnostic.” Photo: LinkedIn

The big three cloud providers – Amazon, Microsoft and Google LLC — are collectively investing billions of dollars in custom hardware to juice the performance of their cloud platforms or tune their services for specific uses, such as AI development. Cloud companies love AI. Machine learning and deep learning processes can consume massive amounts of data and as much processing power as the cloud provider can deliver.

Google is betting much of its cloud strategy on processing AI workloads. To that end, it has designed a family of microprocessors called tensor processing units that it says delivers better performance than GPUs at lower cost.

TPU performance is improving by leaps and bounds and so far hasn’t run up against the physical limitations that are tugging on the X86. In 2015 it cost over $200,000 to train a deep learning model on ResNet 50, a multilayer neural network used in image processing, said Google’s Heredia. “Today it’s less than a cup of coffee.”

Other cloud makers are in the hardware game as well. Microsoft’s Project Olympus is an initiative to build a set of server building blocks for its cloud platform based on the Open Compute Project initiated by Facebook Inc. AWS’s ARM-based Graviton processors, which have been under development since 2015, are now in mainstream use on the cloud giant’s EC2 instances.

AWS’ Hamilton: “Most compute workloads have stubbornly stayed on general-purpose processors.” Photo: SiliconANGLE

“Hardware specialization can improve latency, price/performance and power/performance by as much as 10 times and yet, over the years, most compute workloads have stubbornly stayed on general purpose processors,” James Hamilton, an AWS distinguished engineer, wrote in a recent blog post. Amazon is installing more than a million of the specialized chips per year, focusing them on machine learning workloads.

A separate Amazon initiative called Nitro combines hardware and software components to eliminate virtualization overhead. Amazon consumes “millions of the Nitro ASICs every year or so, even though it’s only used by AWS,” Hamilton wrote. The hardware, which removes such overhead as network packet encapsulation/decapsulation, EC2 security group enforcement and routing from microprocessors, would be impractical for most IT organizations to implement.

Xilinx’s Peng said “domain-specific architectures” are increasingly the answer to increasingly demanding AI and other workloads, as he explained at the recent Renaissance of Silicon event:

The need for specialized processors hasn’t escaped Intel’s notice, as evidenced by its $16.7 billion acquisition of field-programmable gate array maker Altera Corp. in 2015, and more recent purchases of specialty silicon firms like Nervana Systems, Omnitek B.V. and Movidius Ltd.

Not that microprocessors are going away. Worldwide shipments of Intel Corp. X86-based servers grew 15.4% in 2018, according to International Data Corp., but the research firm noted that much of that growth is from demand by cloud service providers.

While the days of fiddling with DIP switches and hot-swapping disk drives may be numbered, the IT organization’s involvement with hardware is hardly at an end. The wave of IoT devices is introducing thousands of new platforms, each with its own underlying hardware considerations.

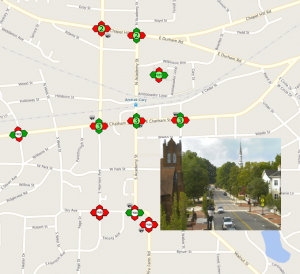

Cary, North Carolina’s smart traffic initiative spans 112 traffic cameras, 100 miles of fiber optic cable and 199 traffic signal controllers. Photo: Town of Cary

“All the IoT vendors have their own solutions,” said Peter Kennedy, chief technology officer of the Town of Cary, North Carolina. “When it comes to hardware, this is a very fragmented space right now.”

The town is moving every piece of infrastructure it can to the cloud and shifting the roughly 25% that’s left on-premises to hyperconverged platforms, but it’s also installing smart parking meters, smart water meters, opioid sensors in the water supply and even rodent traps that send an electronic alert when tripped.

Latency and data volume limitations prevent the town from processing all this new data in the cloud. That means installing new edge devices as collection and filtration points. “That’s a whole different class of hardware,” Kennedy said. They’re self-contained devices and each vendor’s is different. The standards are very, very new.”

The bottom line for him: “Hardware is more important than it has been in the past.”

Traditional IT skill sets such as system administration don’t necessarily lend themselves to this new class of devices, said Advanced Disposal Services’ Saunders. His company is currently outfitting 6,000 trucks with a half-dozen cameras each for safety and compliance monitoring.

“You need teams who are in a mindset of how to innovate and drive new revenue,” he said. “That’s a different mindset than sitting at a desk and monitoring 87 routers.” The bright side is that the opportunities to change the business through intelligent devices is so great that young IT professionals are drawn to the work.

Cloud providers are addressing the edge market with dedicated services and hardware, including on-premises infrastructure that mimics their cloud stacks, but with the number of devices expected to grow roughly tenfold over the next decade, it’s a safe bet that the IoT revolution will keep hardware front-and-center.

The upshot: IT organizations — even those people with the screwdrivers — will have plenty of hardware to handle for years to come.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.