AI

AI

AI

AI

AI

AI

Google LLC today expanded its cloud platform with the launch of Cloud TPU Pods, a new infrastructure option aimed at large artificial intelligence projects that require vast amounts of computing power.

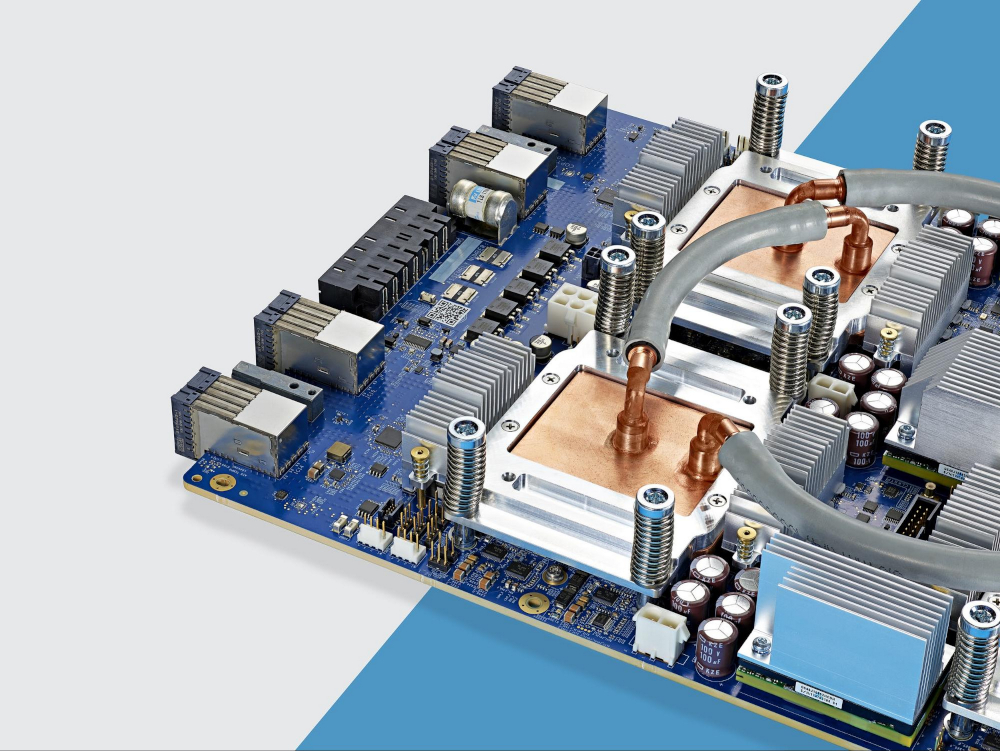

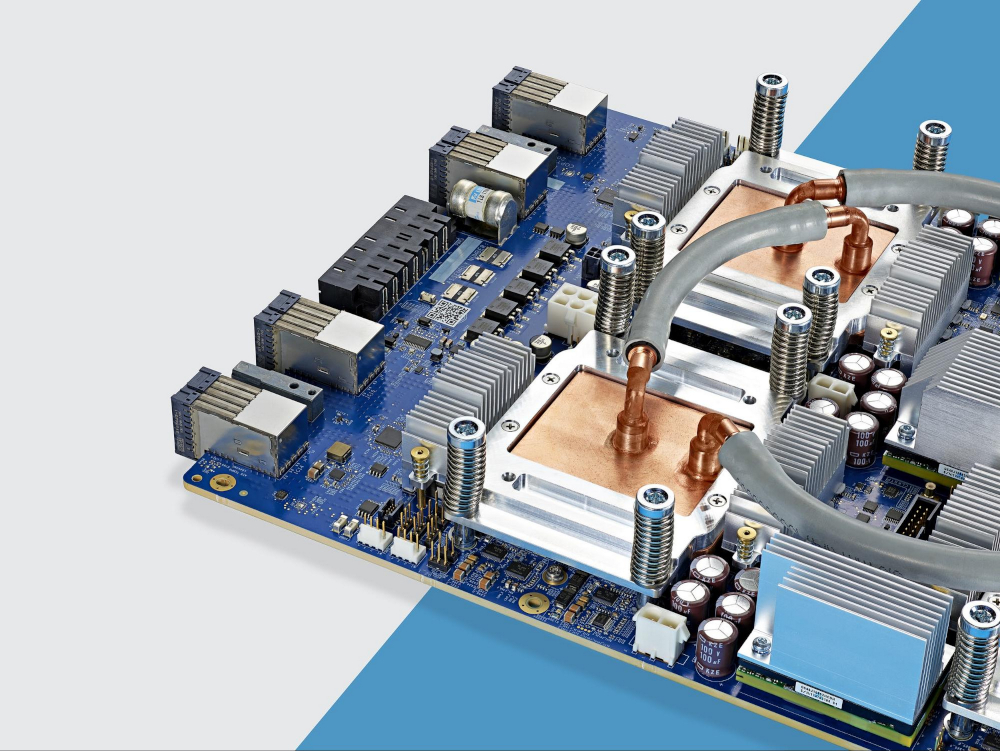

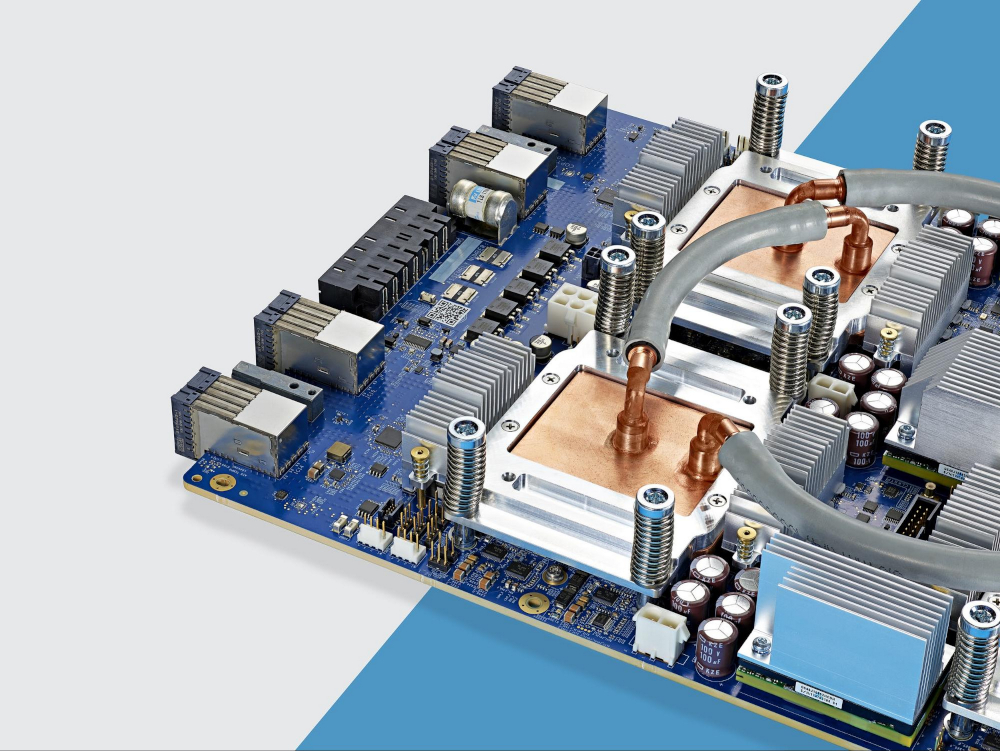

A Cloud TPU Pod is essentially a set of server racks running in one of the search giant’s data centers. Each rack is loaded with Google’s Tensor Processor Units (pictured), custom chips built from the ground up for AI applications. The company uses them to support a wide range of internal services, including its search engine and Google Translate.

On Google Cloud, TPUs have so far only been been available for rent individually. They offer a number of advantages over the graphics cards that companies normally use for AI projects, including potentially higher speeds. A benchmark test released in December showed that TPUs can provide 19 percent better performance than comparable Nvidia Corp. hardware when performing certain types of tasks.

A single Cloud TPU Pod includes either 256 or 1,024 chips depending on the configuration. The 256-chip version uses the second-generation TPUs that Google debuted back in 2017 and has a peak speed of 11.5 petaflops. The 1,024-chip configuration, in turn, offers a massive 107.5 petaflops of performance on the top end thanks to the fact it uses Google’s newer third-generation TPUs.

These figures put the offering in supercomputer territory. A single petaflop equals a quadrillion computing operations per second and Summit, the world’s most powerful supercomputer, has a peak speed of 200 petaflops.

Cloud TPU Pods can admittedly only hit their top speeds when processing less complex data than what systems such as Summit normally handle, but they’re still powerful. Google makes available the hardware through application programming interfaces that allow AI teams to work with the TPUs as if they were a single logical unit. Alternatively, developers may split up a Pod’s computing power among several applications.

“It’s also possible to use smaller sections of Cloud TPU Pods called ‘slices,’” Zak Stone, Google’s senior product manager for Cloud TPUs, wrote in a blog post. “We often see ML teams develop their initial models on individual Cloud TPU devices (which are generally available) and then expand to progressively larger Cloud TPU Pod slices via both data parallelism and model parallelism.”

Cloud TPU Pods are currently in beta. Early customers include eBay Inc. and Utah-based biotechnology firm Recursion Pharmaceutical Inc., which uses the offering to run tests on molecules with potential medical value.

THANK YOU