INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Intel Corp. today quietly announced its first dedicated artificial intelligence processor at a special event in Haifa, Israel.

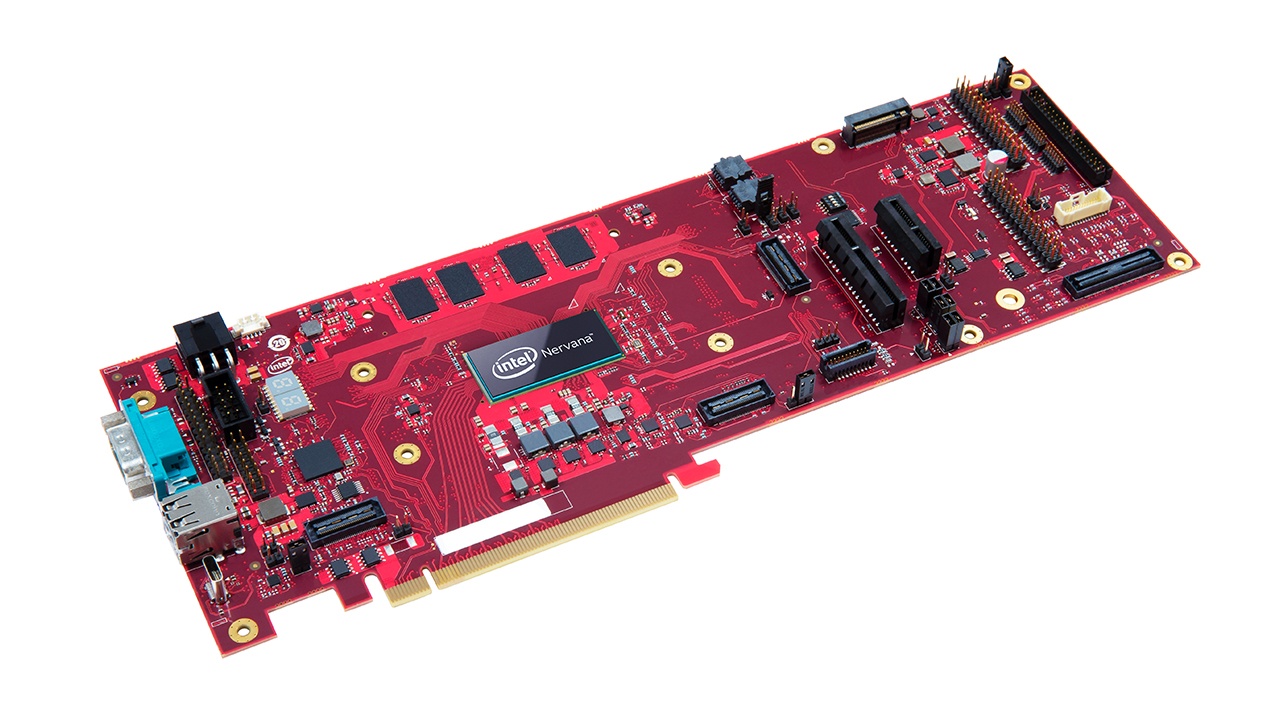

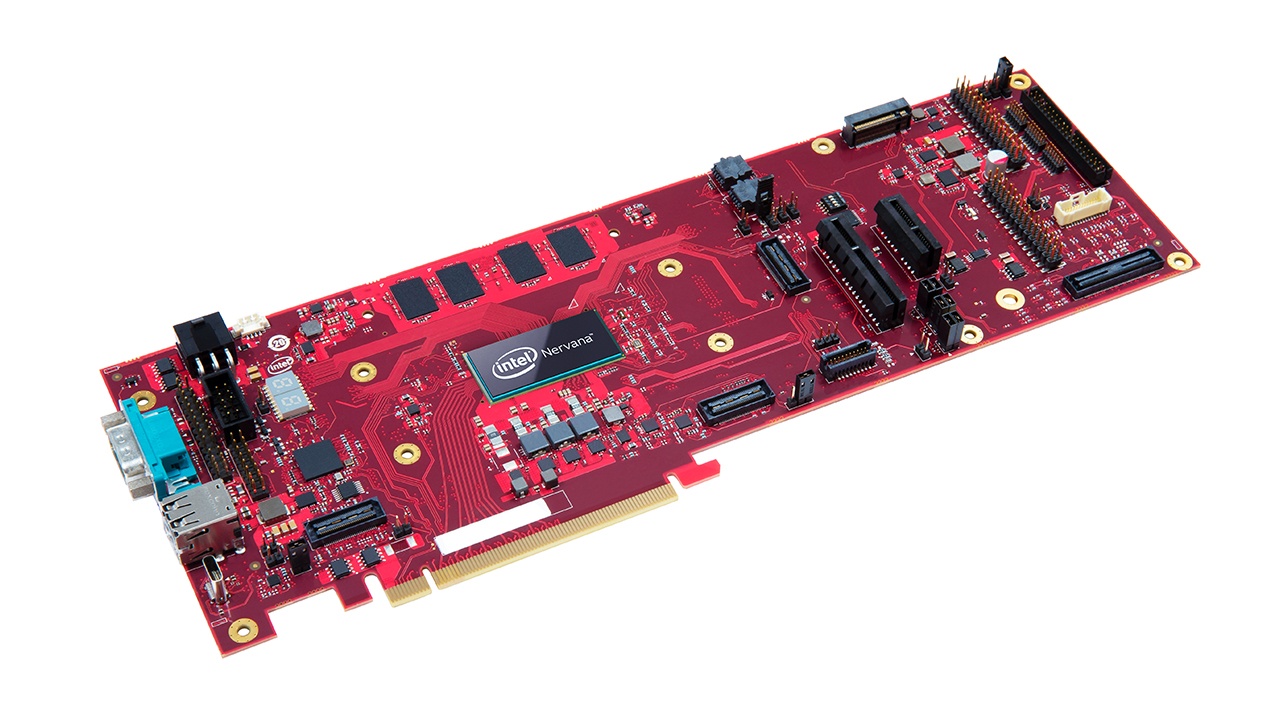

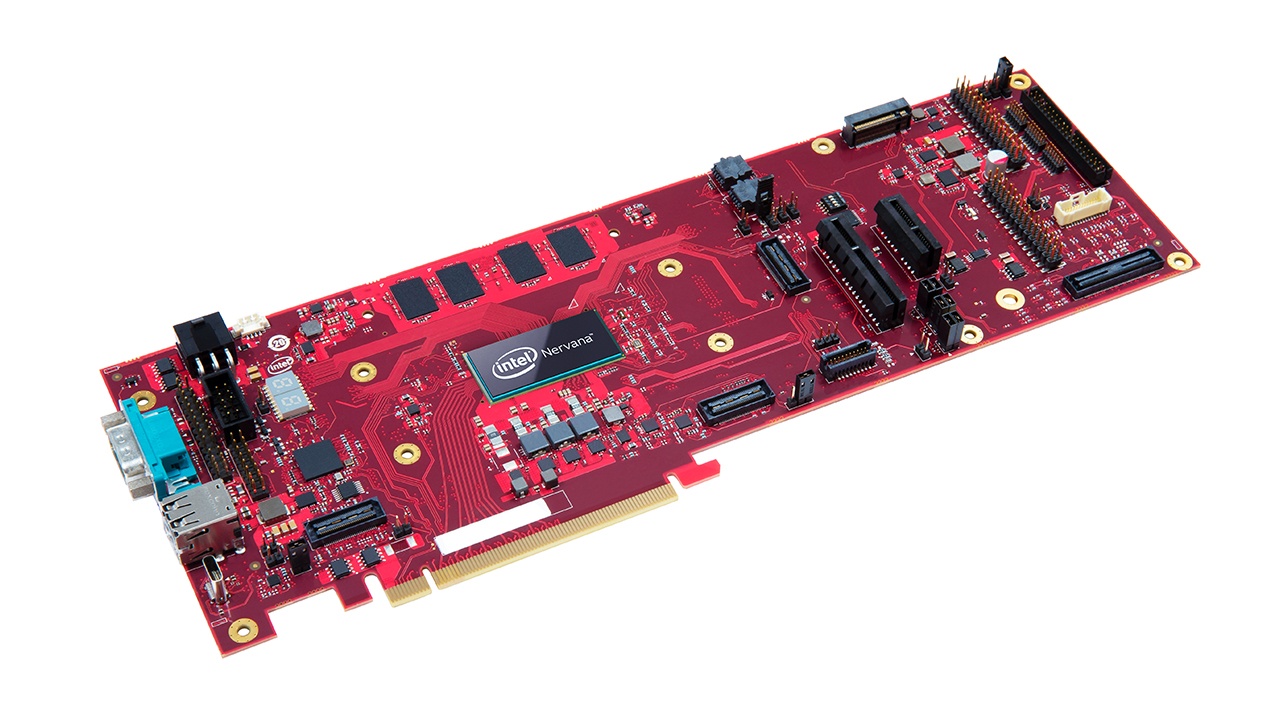

The Nervana Neural Network Processor for Inference (pictured), also known as “Springhill,” was developed at Intel’s labs in Haifa, and is said to be designed for large data centers running AI workloads. It’s based on a modified 10-nanometer Ice Lake processor and is capable of handling intensive workloads while using only minimal amounts of energy, Reuters reported.

Intel said several customers, including Facebook Inc., have already began using the chip in their data centers.

The Nervana NNP-I chip is just one component of Intel’s wider “AI everywhere” strategy. The chipmaker has gone for an approach that involves using a mix of graphics processing units, field-programmable gate arrays and customized application-specific integrated circuits to handle the various complex tasks in AI. These tasks include creating neural networks for speech translation and object recognition, and running the trained models via a process called inference.

It’s this last process that the Nervana NNP-I chips are meant to handle. The chip is small enough that it can be deployed in data centers via a so-called M.2 storage device, which then slots into a standard M.2 port on the motherboard. The idea is that Intel’s standard Xeon processors can be offloaded from inference workloads and focus on more general compute tasks alone.

“Most inference workloads are completed on the CPU even though accelerators like Nvidia’s T-Series offer higher performance,” said Patrick Moorhead of Moor Insights & Strategy. “When latency doesn’t matter as much and raw performance matters more, then accelerators are preferred. Intel’s Nervana NNP-I is intended to compete with discrete accelerators from Nvidia and even Xilinx FPGAs.”

Intel said the Nervana NNP-I chip is essentially a modified 10-nanometer Ice Lake die with two computer cores and its graphics engine stripped out in order to accommodate 12 Inference Compute Engines. Naturally, these are designed to help speed up the inference process, which is the implementation of trained neural network models for tasks such as speech and image recognition.

Constellation Research Inc. analyst Holger Mueller said this ls an important release for Intel, since it had largely sat on the sidelines with regard to AI inference.

“Intel is pulling expertise in power and storage and looking for synergies in its processor suite,” Mueller said. “Since Springhill is deployed via a M2 device and port, which is something that was called a coprocessor a few decades ago, this effectively offloads the Xeon processor. But we’ll have to wait and see how well Springhill can compete with more specialized, typically GPU-based processor architectures.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.