AI

AI

AI

AI

AI

AI

Intel Corp. and Georgia Institute of Technology announced today that they’ll lead a program team for the U.S. Defense Advanced Research Projects Agency to develop defenses against attacks and trickery directed at artificial intelligence systems.

Intel will be the prime contractor for the four-year, multimillion-dollar joint effort known as Guaranteeing Artificial Intelligence Robustness against Deception, or GARD, which will improve cybersecurity defenses against deception attacks affecting machine learning models.

Although adversarial attacks against ML systems are rare, the number of systems that use ML and AI to operate continue to expand.

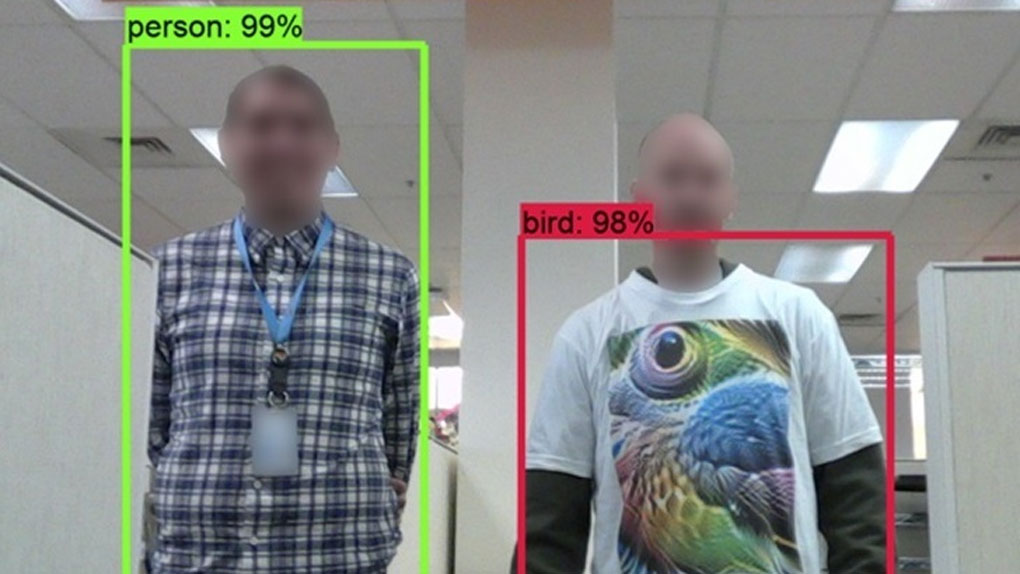

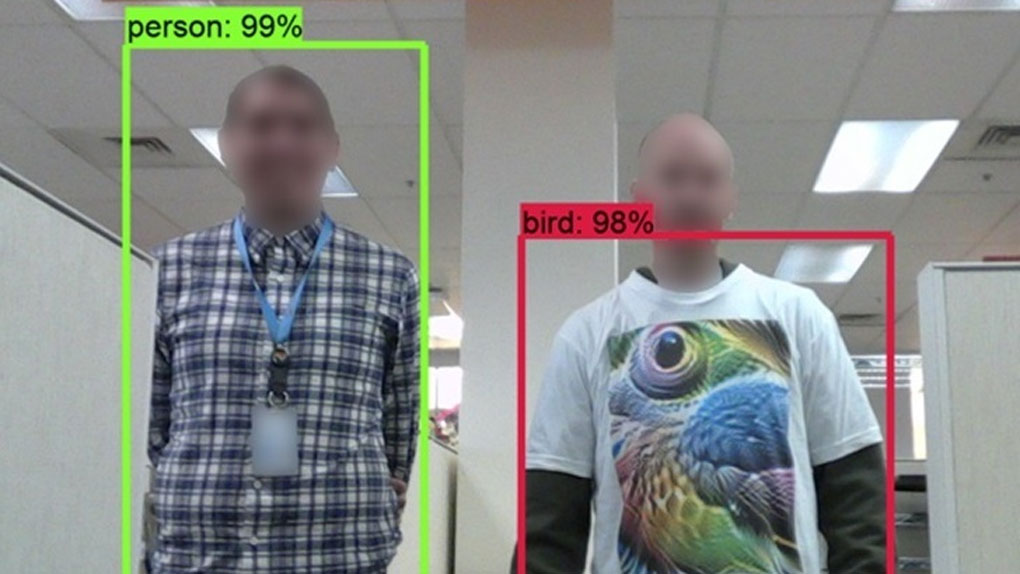

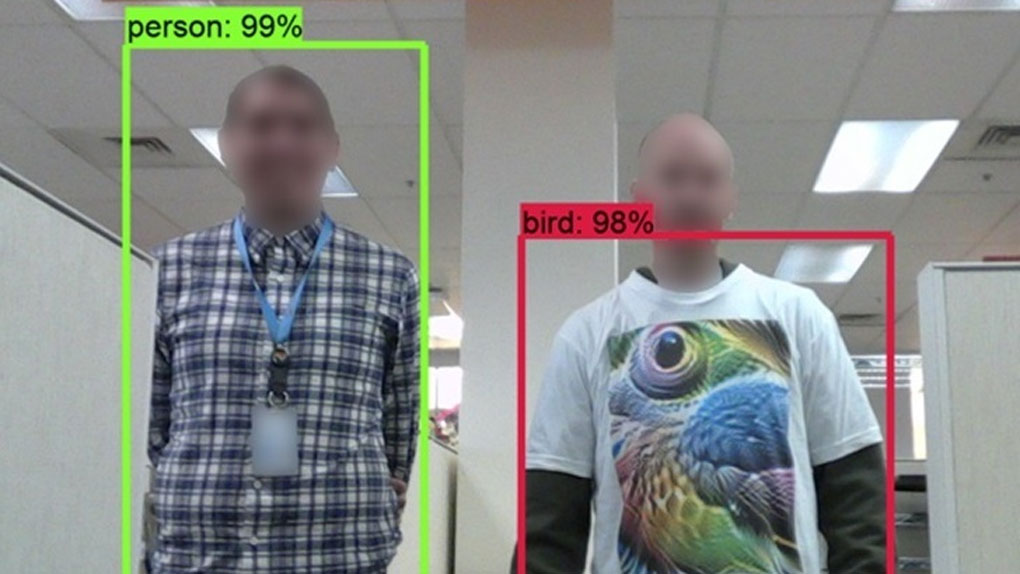

These technologies are an important part of semi-autonomous and autonomous systems such as self-driving cars. As a result, it is necessary to continue to prevent AI misclassifications of real-world objects – for example, the difference between stop signs and people – because of the malicious insertion of information into the learning model or subtle modifications to the objects themselves designed mislead identification.

“Intel and Georgia Tech are working together to advance the ecosystem’s collective understanding of and ability to mitigate against AI and ML vulnerabilities,” said Jason Martin, principal engineer at Intel Labs. “Through innovative research in coherence techniques, we are collaborating on an approach to enhance object detection and to improve the ability for AI and ML to respond to adversarial attacks.”

The objective of the GARD team will be to help better equip these systems with the tools and enhancements needed to protect against these attacks.

Examples of these potential attacks have been surfacing for years, including a report from Tencent’s Keen Security Lab in March 2019 that revealed how researchers were able to use stickers placed on the road could trick Tesla’s Model S into switching lanes and into oncoming traffic. In 2017, researchers showed that 3D-printed turtle, with a specially designed shell pattern, could trick Google’s AI image recognition algorithm into guessing it was a rifle.

Since then, researchers have been examining ways to trick autonomous vehicles using modified road signs. One such attempt involved using a single piece of black tape to fool a semi-autonomous car camera into misinterpreting a speed limit sign to be much greater than it was.

The problem could include other unknown flaws in AI interpretation software such as led to the death of Elaine Herzberg, who was killed by an Uber self-driving car as she was crossing Mill Avenue in Tempe, Arizona, in March 2018. The onboard camera saw her crossing six seconds before the vehicle struck her and investigators later identified a flaw making it difficult for the AI to identify people outside of crosswalks.

The vision of GARD is to take previous and current research into flaws and exploits that affect computer AI and ML vision systems and establish a theoretical foundation that will help identify system vulnerabilities and characterize edge cases to enhance robustness against errors and attacks.

During the first phase of GARD, Intel and Georgia Tech will enhance object recognition using spatial, temporal and semantic coherence for both still images and videos. That means that the AI and ML systems will be trained in extra context about what can be expected in any given situation and designed to flag or reject unlikely image scenarios. The idea is to develop AI with a better sense of “judgment” by involving more factors from its environment that explain images.

For example, an attacker could attempt to fool an AI into thinking that a stop sign is not a stop sign by altering it in a way that its look is slightly off. For a human, the stop sign would retain its red color and octagonal shape and even if the word “STOP” was changed or obliterated, most drivers would stop. An AI might misinterpret the modified sign and keep going. With extra contextual awareness – training in identifying an intersection, the shape of the sign, the color of the sign – the AI could instead reject the misclassification like a human driver would.

Overall, the work of Intel and Georgia Tech is intended to lead to better image recognition systems that will increase safety for autonomous vehicles and better classification of objects for ML systems across numerous industries.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.