INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Nvidia Corp. today launched a new, high-performance graphics processing unit for artificial intelligence training and inference based on its new Ampere architecture.

The new chip, called the Nvidia A100, is said to provide a 20-times performance boost compared to its predecessor, the Volta GPU, making it ideal for AI, data analytics, scientific computing and cloud graphics workloads, the company said.

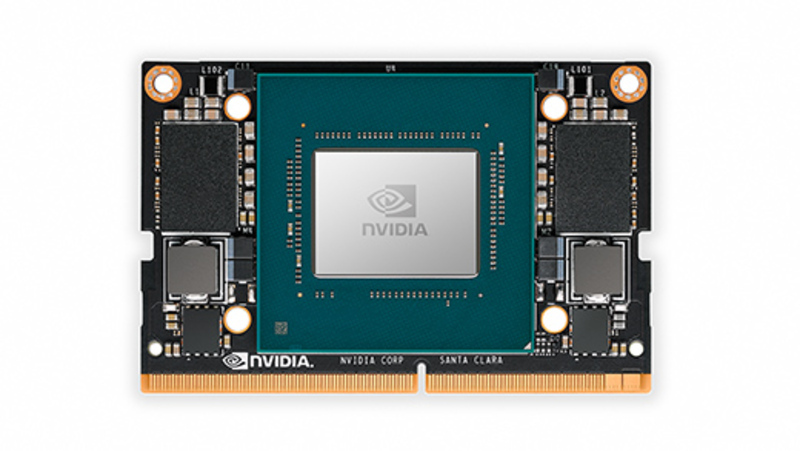

The Nvidia A100 (pictured) was announced at the company’s annual GPU Technology Conference online today, alongside a new AI system, the Nvidia DGX A100. The company is also expanding its EGX Edge AI platform ecosystem with two new products, including the EGX A100 converged accelerator and the EGX Jetson Xavier NX micro-edge server, giving customers more options based on their performance and cost requirements.

Paresh Kharya, director of product management for data center and cloud platforms, said during a press briefing that customers nowadays require 3,000 times the compute performance of the company’s original Volta AI architecture to train the largest AI models. But those customers also need varying amounts of compute performance to power the different types of AI-powered interactions, he said.

“Because of immense diversity of software, data center hardware has become fragmented,” Kharya said. “It’s impossible to optimize this kind of data center for high efficiency.”

Because of that, Nvidia has decided to reimagine the GPU with its new A100, Kharya said. The new chip not only boosts performance massively, but also unifies AI training and inference acceleration within a single architecture. It also enables massive data center scalability, including scale-up capabilities for AI training and data analytics, and scale-out for AI inference.

The A100 Ampere chip is the largest GPU Nvidia has ever made, made up of 54 billion transistors, the company said. It also packs third-generation tensor cores, and features acceleration for sparse matrix operations, which are especially useful for AI inference and training. In addition, each GPU can be segmented into several instances for different inference tasks. In addition, Nvidia’s NVLink interconnect technology means its possible to use multiple A100 GPUs for larger AI training workloads, Kharya said.

The A100 GPU is available to ship now, and will make its debut in the new Nvidia DGX A100 third-generation integrated AI system (pictured, below) that was also launched today. That system incorporates eight A100 GPUs, providing a massive 320 gigabytes of memory in total, making it the world’s most advanced AI system, the company said. It’s said to be able to deliver up to 5 petaflops of AI performance, effectively consolidating the power and capabilities of an entire data center into a single, flexible platform.

The DGX A100 platform also can handle multiple, smaller AI workloads, as it can be partitioned into 56 instances thanks to the A100’s multi-instance feature, officials said.

“This is the first time we’ve accelerated the workloads of data center AI training and inference and data analytics,” Nvidia’s Chief Executive Jensen Huang told SiliconANGLE in a press call. “I expect DGX A100 to be in every single cloud. The future of computing is really data center scale.”

Nvidia said one of the first customers to use the $200,000 DGX A100 platform is the Department of Energy’s Argonne Laboratory, which is hoping the improved AI and computing power will help researchers better understand and fight COVID-19.

Also adopting the platform is Oracle Corp., who said the new GPUs will soon be available on its Oracle Cloud Infrastructure platform for high performance computing workloads such as oil exploration and DNA sequencing.

The A100 GPU also features in Nvidia’s new EGX A100 converged accelerator, which will go on sale later this year and enable real-time processing and protection of streaming data from edge sensors. As for the EGX Jetson Xavier NX micro-edge server (pictured, below), Nvidia claims it is the smallest, most power AI supercomputer for microservers ever made. It packs the power of an Nvidia Xavier system-on-a-chip into a single, credit card-sized module, and is designed to stream data from multiple high-end resolution sensors, such as security cameras in a convenience store, the company said.

“The fusion of IoT and AI has launched the ‘smart everything’ revolution,” Huang said in a statement. “Large industries can now offer intelligent connected products and services like the phone industry has with the smartphone. NVIDIA’s EGX Edge AI platform transforms a standard server into a mini, cloud-native, secure, AI data center. With our AI application frameworks, companies can build AI services ranging from smart retail to robotic factories to automated call centers.”

Nvidia also announced that the automaker BMW Group had adopted its new Nvidia Isaac robotics platform to incorporate AI robots at its automotive factories. The new platform is also powered by Nvidia’s Ampere A100 GPUs, and will be used by BMW to enhance its logistics factory flow so it can build custom configured cars more efficiently and rapidly. The plan is to deploy the new system at BMW’s factories worldwide, the company said.

“BMW Group’s use of NVIDIA’s Isaac robotics platform to reimagine their factory is revolutionary,” Huang said. “It’s leading the way to the era of automated factories, harnessing breakthroughs in AI and robotics technologies to create the next level of highly customizable, just-in-time, just-in-sequence manufacturing.”

The Nvidia Isaac robotics platform is powered by the DGX AI System, and also makes use of Nvidia’s Quadro ray-tracing GPUs to render synthetic machine parts in extremely accurate detail to enhance robotics training.

In addition, Nvidia announced a key performance breakthrough with its Nvidia Clara healthcare platform, in addition to a number of new partnerships and capabilities that it says will help the medical community better track, test and treat COVID-19.

On the performance side, Nvidia said its Clara Parabricks computational genomics software has achieved a new speed record by analyzing the entire human genome DNA sequence in under 20 minutes. The company also announced the availability of new AI models it developed in partnership with the National Institutes of Health to help researchers study COVID-19 chest CT scans, and build new tools for measuring and detecting infections. The new AI models were built using the Nvidia Clara application framework for medical imaging, and are available now in the latest release of Clara Imaging at the NGC software hub.

The company also found time to announce a new Clara Guardian application framework, which uses intelligent video analytics and automatic speech recognition technologies to enable medical workers to perform vital sign monitoring while limiting their exposure to infected patients.

With reporting from Robert Hof

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.