INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Heads up, Intel Corp.: The future of enterprise computing is taking shape, and it doesn’t necessarily have an x86 processor at its core.

That’s the conclusion of David Floyer, chief technology officer at SiliconANGLE sister research firm Wikibon, in an extensive new analysis. Floyer says processors based upon the reduced instruction set-based Arm designs that are ubiquitous in mobile phones and tablets will take on an increasingly large volume of enterprise workloads and power 72% of new enterprise servers by the end of this decade.

Unlike x86 chips, which are designed and manufactured by almost exclusively by Intel and Advanced Micro Devices Inc., the Arm processors are produced by more than a dozen semiconductor based upon designs licensed from Arm Holdings Plc, a U.K.-based subsidiary of Japan’s SoftBank Group Corp. Arm designs have also been adapted by the likes of Amazon Web Services Inc. and Google LLC as well as consumer mobile phone producers such as Apple Inc. and even automotive manufacturers such as Tesla Motors Inc.

As a result, “Arm-based processors already account for 10 times the number of wafers made by the worldwide fabs, compared to x86,” Floyer writes. That has not only brought the cost of Arm-based processors down but enabled new designs to flourish.

“The performance of Arm-led systems now equals or exceeds traditional x86 systems,” Floyer writes. He expects Arm processors and their ecosystems to “dominate the enterprise heterogeneous compute market segment over the next decade.”

Heterogeneous computing is a relatively new form of system design that combines more than one kind of processor on a chip. These can include conventional central processing units, graphics processing units, application-specific integrated circuits and field-programmable gate arrays, as well as a new processor type called a neural processing unit that’s specially designed for machine learning.

Mobile device manufacturers have been using heterogeneous computing hardware in their products for several years, but data centers have been dominated by traditional complex instruction-set chip architectures that are optimized to process serial tasks at high speed. All that is about to change, though, thanks to the rise of edge computing, Floyer asserts.

Edge architectures place much of the intelligence at the far reaches of the network where data is collected. Processing is mostly done in real time and only a small amount of data typically traverses the network to a central cloud. A popular example of an edge network is self-driving cars, which pack processors into the vehicle to make split-second decisions and only pass summary or exception data over the network.

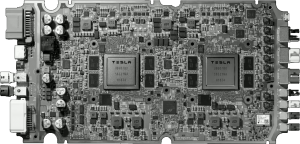

Tesla’s self-driving onboard computer (Photo: WikiChip)

Such systems are based on matrix workloads, a term Wikibon coined to describe applications that involve processing enormous amounts of real-time data, such as in the autonomous vehicle example. Matrix workloads are processed in parallel by necessity, making them ill-suited to serial-oriented traditional computing architectures.

Arm-based heterogeneous computer architectures, however, are a good fit. They’re based on flexible, low-latency, high-bandwidth connections between processors and limited amounts of intermediate storage. Instead of conventional dynamic random-access memory, they use static random-access memory, which is pricier than DRAM but much faster and consumes up to 99% less power. Because matrix workloads process information in small batches and throw much of it away, SRAM’s cost and capacity limitations aren’t a significant issue.

But applications of Arm architectures will be much broader than just at the edge of the network, Floyer asserts. In a comparison of matrix and traditional workloads on an Apple Arm-based iPhone 11 versus a personal computer running Intel’s latest Ice Lake processor, Floyer found the Apple architecture was only 5% slower when running a traditional workload but cost more than 70% less. When running a matrix workload, the iPhone performed 50 times faster at 99% less cost. The four-year cost of power of the Arm-based system was also 99% lower.

And Arm is pulling away from x86 thanks to the disaggregation of processor design and manufacturing, Floyer asserts. For example, by using an Arm design, Tesla was able to build an onboard computer capable of processing 1 billion pixels per second from eight cameras as well as streaming data from radar, 12 ultrasonic sensors, GPS and multiple internal vehicle sensors — all in three years. “Without disaggregation, it would have taken more than six years with enormous risks of failure,” Floyer writes.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.