AI

AI

AI

AI

AI

AI

Qualcomm Inc. today shared technical details about the Cloud AI 100, an artificial intelligence chip with which it hopes to seize upon the growing enterprise demand for machine learning hardware.

Qualcomm’s entry into the AI chip segment could put more pressure on market leader Nvidia Corp. in a time when it also faces rising competition elsewhere. Notably, Intel Corp. is currently preparing to launch a series of data center graphics cards.

Qualcomm announced the Cloud AI 100 early last year but didn’t provide information about its capabilities. What has changed since, the company says, is that an initial set of units has been successfully manufactured and sent out to early users. The chipmaker expects to start volume shipments in 2021.

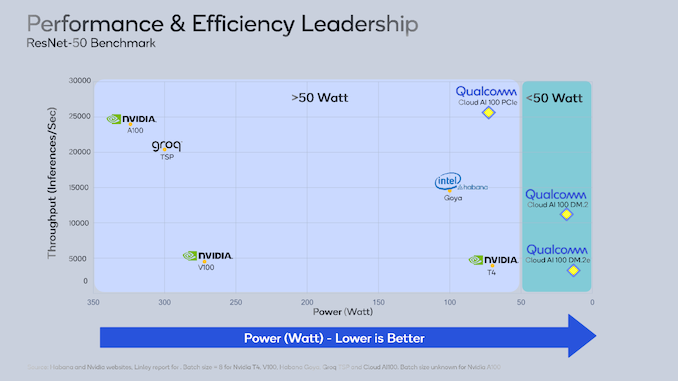

Qualcomm designed the Cloud AI 100 for inference, which means running machine learning models once they’re fully trained and processing live data. The chip has 16 cores made using a seven-nanometer process that can perform up to 400 trillion calculations per second on INT8 values, a data format commonly used by neural networks. Qualcomm says the chip can in some scenarios outperform Nvidia’s most powerful AI graphics card, the 100, as well as products from Intel and others.

The exact performance provided by the Cloud AI 100 will likely vary among workloads. The chip has 144 megabytes of onboard memory, an unusually large amount that will speed up models by reducing the need to move data constantly to and from external memory drives. However, it has less bandwidth than Nvidia’s rival A100, which will be a tradeoff for bandwidth-heavy workloads.

The exact number of calculations that users can perform using Qualcomm’s chip will also depend on which version they buy. The company is offering Cloud AI 100 in one of three accelerator devices (pictured) that can be attached to servers. The most powerful accelerator can handle 400 trillion calculations per second, while the two smaller versions can handle up to 100 trillion and 50 trillion, respectively.

Data centers aren’t the only type of environment where Qualcomm hopes to see its new chip deployed. “Imagine a giant retailer that can restock aisles upon aisles of products by using AI to signal that a shelf is empty,” John Kehrli, a senior director of product management at Qualcomm, wrote in a blog post. He listed the industrial sector as another focus area: “A Qualcomm Cloud AI 100-equipped edge box can identify numerous people and objects simultaneously and ensure that protective equipment is being worn at all times.”

San Diego-based Qualcomm makes most of its revenue from handset chips. Smartphones and data centers are a world apart, but the introduction of the Cloud AI 100 doesn’t mark the first time that a firm best known for making consumer device processors has jumped into the AI infrastructure market. The most notable example is Nvidia, which marketed its graphics processing units mainly to video game enthusiasts before expanding its focus to machine learning.

Given how successful Nvidia’s bet on AI has proven, it’s no surprise to see the rising interest in this segment from Qualcomm and other players.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.