AI

AI

AI

AI

AI

AI

Google LLC announced a range of improvements for its Maps service at its virtual I/O developer conference today, including the use of artificial intelligence to improve traffic routing and pedestrian directions.

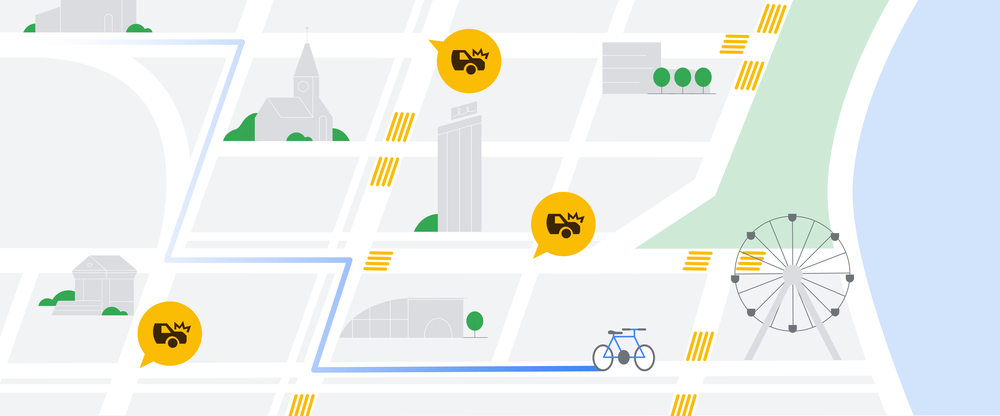

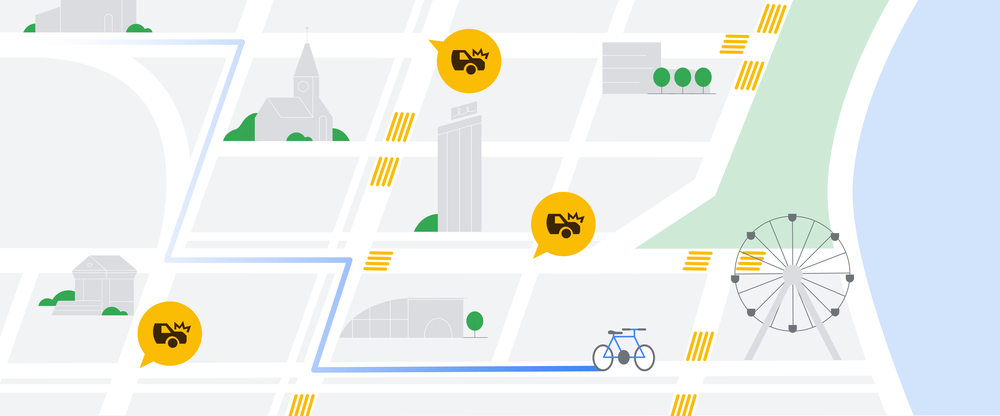

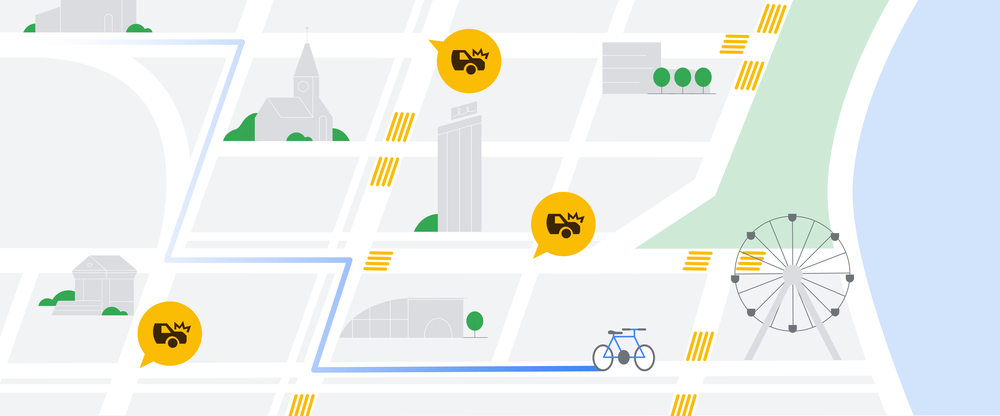

The routing adjustments involve applying AI to teach maps to identify and forecast when people are hitting their brakes. Google uses data from “hard-brake events,” moments that cause drivers to decelerate sharply and are known indicators of car crash likelihood, to suggest alternative routes when available.

By using the data, Google claims, there is a potential to eliminate more than 100 million hard-braking events in routes driven with Google Maps each year.

The data used by the AI comes from two sources: smartphones using Google Maps and in-car devices using Android Auto. The smartphone data provides the bulk of available data for analysis but has one issue: Phones are often not in a fixed position.

“Mobile phone sensors can determine deceleration along a route, but this data is highly prone to false alarms because your phone can move independently of your car,” Russel Diker, direct of product for Google Maps, said in a blog post. “This is what makes it hard for our systems to decipher you tossing your phone into the cupholder or accidentally dropping it on the floor from an actual hard-braking moment.”

Although data on Android Auto may not be as prevalent, the AI can use the more reliable data from it and then apply machine learning and training models to the mobile data to make it possible to spot actual deceleration from fake braking.

On the pedestrian side, Google uses AI to deliver more detailed street maps that show accurate road width and details about where sidewalks, crosswalks and pedestrian islands to make it easier to navigate. Google launched the service in August with support for street maps in London, New York and San Francisco, and the service is now being expanded to 50 more cities.

The challenge here is that there’s often no consistency between two cities, even when it comes to pedestrian crossings. Using machine learning models, Google is treating mapmaking “like baking a cake — one layer at a time.” Along with existing data, the AI uses Street View, satellite and aerial images to index not only roads, addresses and buildings but also all objects in a scene.

Additional features to Google Maps announced at I/O include enhanced Live View, an augmented reality feature that adds helpful details about shops and restaurants around a user. Google Maps has also enhanced its live “busyness” information down to a more granular level, including being able to spot busy neighborhoods at a glance.

Finally, there’s a new personalized map tailoring feature that customizes results in Google Maps to highlight the most relevant places based on time of day and whether the user is traveling or not. In one example, for a user in New York City at 8 a.m. on a weekday, Google Maps will show coffee shops instead of restaurants, while those away on the weekend will be shown local landmarks and tourist attractions.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.