CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

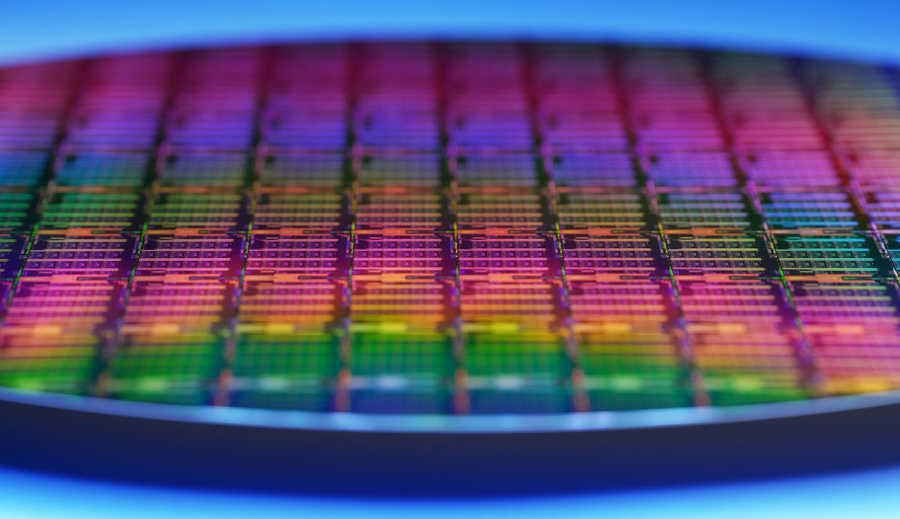

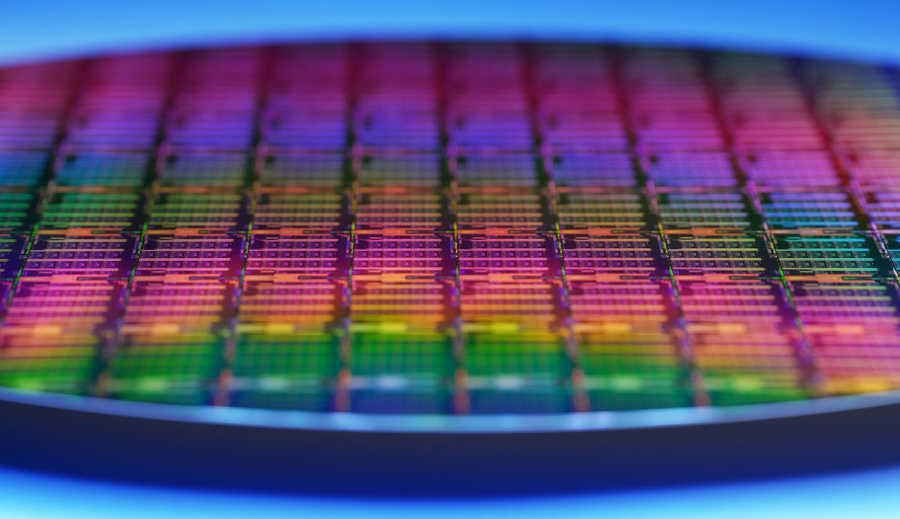

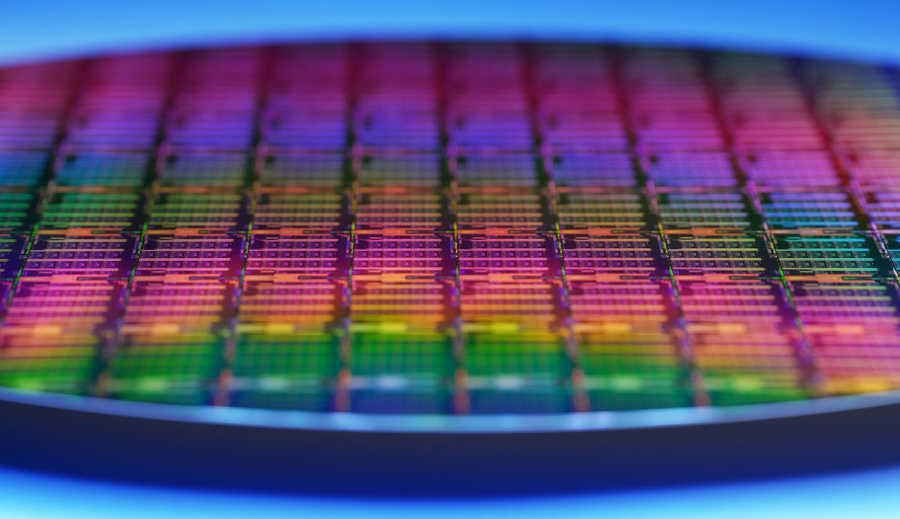

Intel Corp. today detailed its work on a new type of chip, the infrastructure processing unit, that’s aimed at boosting the efficiency of cloud providers’ data centers by offloading computing tasks from their servers’ primary central processing units.

The company says multiple cloud providers have already deployed the technology.

Running an application requires a server not only to carry out computations for the application itself but also to perform a variety of auxiliary functions. The server must coordinate the network over which the application exchanges data with other workloads, manage requests to the storage hardware where business records are kept and perform a variety of other supporting tasks. Those supporting tasks often use up a significant portion of a machine’s total processing capacity.

Intel says its infrastructure processing units, or IPUs, can free up processing capacity spent on such auxiliary computations.

Intel provides the IPUs in the form of a network device that companies can attach to their servers. Once attached, the chips take over auxiliary tasks such as managing storage infrastructure and networking from a server’s central processing unit. The result is that more of the CPU’s clock cycles are available for applications.

The IPU attached to a server can perform the tasks more efficiently than a CPU because it has specialized circuits specifically optimized for low-level infrastructure operations. According to Intel, its IPUs can take over multiple infrastructure functions, including storage virtualization, network virtualization and security. Both the hardware and the oftware in the chips are programmable, which allows Intel’s tech-savvy hyperscale clients to customize them for their requirements.

The amount of processing power that can be freed up by offloading auxiliary computations from the server’s main CPU is significant. When running a software container application, for instance, the task of sharing data among the application’s subcomponents can by itself take up a big portion of the available processing capacity. Intel today cited research from Google LLC and Facebook Inc. that found such communications overhead uses 22% to 80% of the available CPU cycles.

The new IPUs are described as an evolution of Intel’s existing SmartNIC line of network devices, which offer similar features. “The SmartNIC enables us to free up processing cores, scale to much higher bandwidths and storage IOPS, add new capabilities after deployment, and provide predictable performance to our cloud customers,” said Andrew Putnam, a principal hardware engineering manager at Microsoft Corp. The tech giant also uses Intel’s new IPUs.

Intel says cloud providers can apply the efficiency benefits provided by the chips in several ways. “Cloud operators can offload infrastructure tasks to the IPU,” explained Guido Appenzeller, chief technology officer of Intel’s Data Platforms Group. “Thanks to the IPU accelerators, it can process these very efficiently. This optimizes performance and the cloud operator can now rent out 100% of the CPU to his guest, which also helps to maximize revenue.”

Another benefit is the possibility of providing more infrastructure customization options to a cloud provider’s customers. By moving the sensitive software that controls tasks such as managing network and storage from a server’s internal CPU to the IPU, a cloud provider can afford to give customers greater access to the CPU without introducing additional risks. Greater access to cloud hardware, in turn, could potentially enable enterprises to customize their infrastructure-as-a-service environments to a greater degree than is possible today.

“The IPU allows the separation of functions so the guest can fully control the CPU,” Appenzeller said. “So if I’m a guest, I can bring my own hypervisor, but the cloud [provider] is still fully in control of the infrastructure and it can sandbox functions such as networking, security and storage. It’s a big step forward, a little like having a hypervisor in hardware that provides the infrastructure for your customers’ workloads.”

Intel’s first IPUs, which have been deployed by Microsoft and other cloud operators, are based on field-programmable gate arrays. FPGAs are partly customizable chips that semiconductor engineers can optimize at the hardware level for a specific set of tasks. That allows the chips to run those tasks more efficiently than general-purpose processors that don’t have such optimizations.

Intel is also testing a newer IPU design that uses an application-specific integrated circuit rather than an FPGA. ASICs are chips created from scratch for specific tasks, which theoretically makes it possible to optimize the designs to an even greater degree than with FPGAs.

“The IPU is a new category of technologies and is one of the strategic pillars of our cloud strategy,” Appenzeller said. “It expands upon our SmartNIC capabilities and is designed to address the complexity and inefficiencies in the modern data center.”

Chips that offload low-level infrastructure tasks from a server’s main processor to increase efficiency are also a pillar of rival Nvidia Corp.’s cloud strategy. Nvidia offers data processing units under the BlueField brand that, like Intel’s IPU, promise to accelerate storage, network and security tasks. The newest addition to the production line, the BlueField-3, is described as capable of performing computations that would normally otherwise require up to 300 CPU cores.

Indeed, Intel is hardly alone with its IPU, Patrick Moorhead, president and principal analyst at Moor Insights & Strategy, said in a Forbes article he wrote, mentioning Marvell Semiconductor Inc. and even Amazon Web Services Inc.’s Annapurna acquisition years ago.

“Intel knows the data center and says it’s the only IPU built in collaboration with hyperscale cloud partners,” he said. “This would mean that Intel would be able to actively innovate and deliver a product that is already addressing real-world problems.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.