Nvidia brings highly realistic, walking, talking AI avatars to its Omniverse design tool

Nvidia Corp. is expanding its hyper-realistic graphics collaboration platform and ecosystem Nvidia Omniverse with a new tool for generating interactive artificial intelligence avatars.

The company also announced a new synthetic data-generation engine that can generate physically simulated synthetic data for training deep neural networks. The new capabilities, announced at Nvidia GTC 2021 today, are designed to expand the usefulness of the Omniverse platform and enable the creation of a new breed of AI models.

Nvidia Omniverse is a real-time collaboration tool that’s designed to bring together graphics artists, designers and engineers to create realistic, complex simulations for a variety of purposes. Industry professionals from aerospace, architecture, construction, media and entertainment, manufacturing and gaming all use the software.

The platform has been available in beta for a couple of years, and today it finally launches into general availability.

It’s launching with the enticing new Nvidia Omniverse Avatar capability, which is said to combine Nvidia’s speech AI, computer vision, natural language understanding, recommendation engine and simulation technologies. Developers can create hyper-realistic, interactive characters with ray-traced 3D graphics that can see, speak, converse on a wide range of topics and understand what people say to them.

Nvidia says it sees Omniverse Avatar as a platform for creating a new generation of AI assistants that can be customized for almost any industry. For instance, users might create avatars that can be used to take orders at a restaurant, help people conduct banking transactions, book appointments at a hospital, dentist or even a salon, and perform many more tasks.

“These virtual worlds are going to help the next era of innovations,” Richard Kerris, vice president of the Omniverse Platform, told reporters in a briefing.

The powerful capabilities of Omniverse Avatar were highlighted by Nvidia Chief Executive Jensen Huang during his keynote at GTC, where he showed a video demonstrating two of his colleagues engaging in a real-time conversation with a toy replica of himself (pictured), discussing topics such as biology and climate science. A second, more practical demo showed off a customer service avatar at a restaurant that was able to see, converse with and understand two human customers as they ordered veggie burgers, french fries and drinks.

Omniverse Avatar also works with Nvidia’s DRIVE Concierge AI platform, as demonstrated in a third example. There, a digital assistance popped up on the car’s dashboard screen to help the driver choose the most suitable driving mode to reach his destination on time, while performing a separate request to set a reminder when the vehicle’s range drops below 100 miles.

“The dawn of intelligent virtual assistants has arrived,” Huang said. “Omniverse Avatar combines Nvidia’s foundational graphics, simulation and AI technologies to make some of the most complex real-time applications ever created. The use cases of collaborative robots and virtual assistants are incredible and far reaching.”

Enhancing AI models with synthetic data

Omniverse Avatar will undoubtedly make it easier to build more powerful chatbots, but for other types of AI models, developers can sometimes be hard-pressed to do so for lack of information needed to train them. Nvidia is hoping to fix that with the new Omniverse Replicator, which is a tool that can generate synthetic data sets that can then be used to train neural networks to perform a range of tasks.

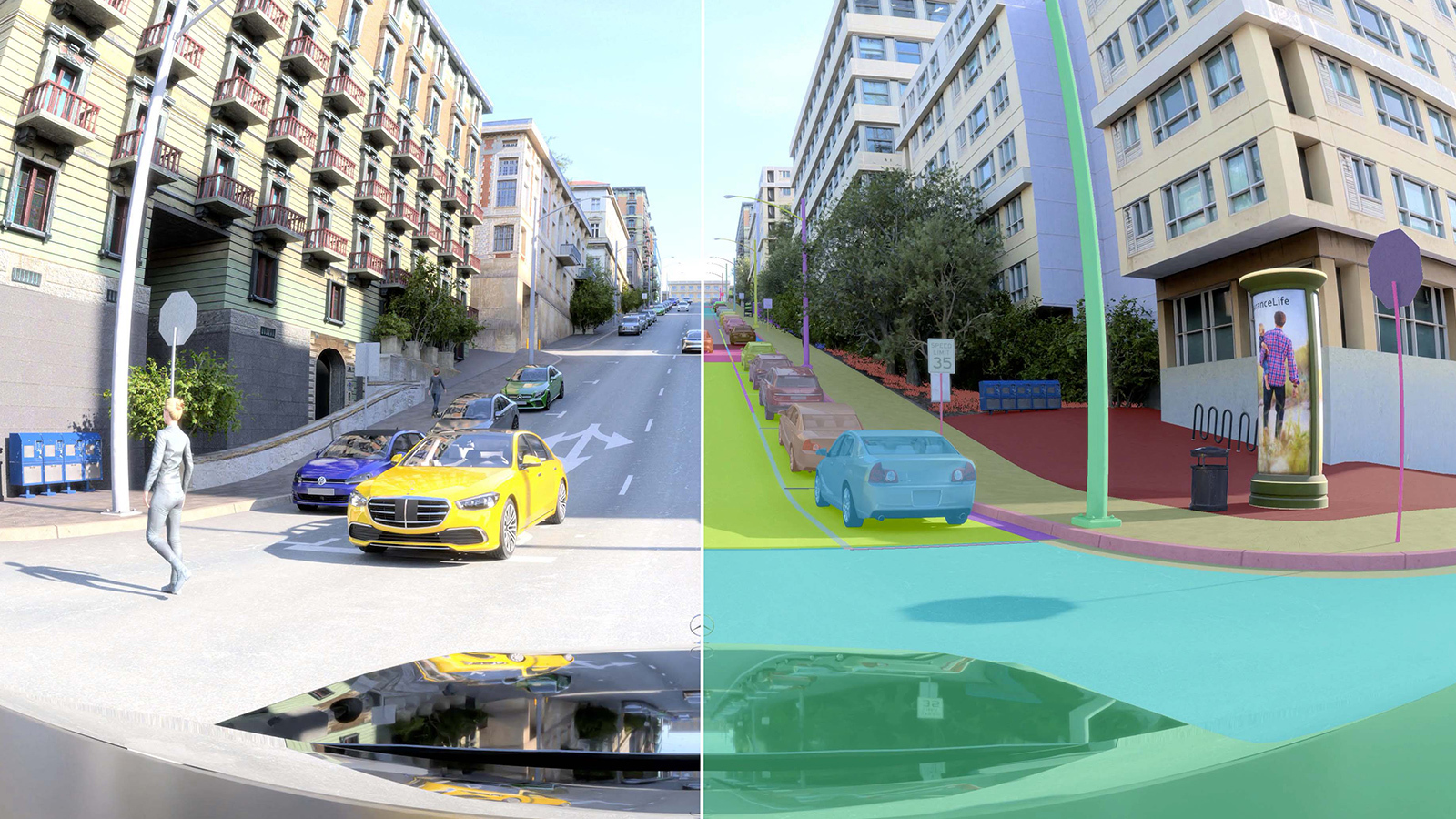

At launch the Omniverse Replicator offers two applications for generating synthetic data, which is annotated information that computer simulations or algorithms create as an alternative to real-world data that may be lacking. They include a replicator for Nvidia DRIVE Sim (pictured, below), which is a virtual world that’s used to build digital twins of autonomous vehicles, and another for Nvidia Isaac Sim, which is a platform for creating digital twins of manipulation robots.

Nvidia said the replicators can be used by developers to bootstrap new AI models, fill real world data gaps and label ground truth in ways that humans cannot. The data generated in these virtual worlds can cover a wide range of scenarios, Nvidia said, including rare or dangerous conditions that may be difficult or dangerous to replicate in the real world.

The synthetic data will enable autonomous vehicles and robots to master skills that can then be applied in the physical world, Nvidia believes.

Rev Lebaredian, Nvidia’s president of simulation technology and Omniverse engineering, said synthetic data will be essential for the future of AI. “Omniverse Replicator allows us to create diverse, massive, accurate datasets to build high-quality, high-performing and safe AI,” he said. “While we have built two domain-specific data-generation engines ourselves, we can imagine many companies building their own with Omniverse Replicator.”

Today’s announcement wasn’t limited to avatars and synthetic data. In addition to the two new capabilities, Nvidia announced new features for the platform, including integration with Nvidia CloudXR, an enterprise-class streaming framework. The idea is to make it so users can interactively stream their Omniverse experiences to mobile augmented reality and virtual reality devices, Nvidia said.

Elsewhere, Omniverse VR now supports full-image, real-time ray-traced VR, which means developers can build VR-capable tools on the platform. Omniverse Remote meanwhile now provides AR capabilities and virtual cameras, making it possible for designers to see the assets they create fully ray-traced via iOS and Android devices.

Other new features include Omniverse Farm, which enables design teams to use multiple workstations or servers to provide additional power for jobs like rendering, synthetic data generation and file conversion.

Finally, nondesigners are in for a little treat too. Nvidia said there’s a new app in Omniverse Open Beta called Omniverse Showroom, where nontechnical users can play with tech demos of Omniverse that showcase the platform’s real-time physics and rendering technologies.

With reporting from Robert Hof

Images: Nvidia

A message from John Furrier, co-founder of SiliconANGLE:

Your vote of support is important to us and it helps us keep the content FREE.

One click below supports our mission to provide free, deep, and relevant content.

Join our community on YouTube

Join the community that includes more than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and many more luminaries and experts.

THANK YOU