INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Nvidia Corp. announced at its virtual GTC 2022 event today that the first products and services based on its next-generation graphics processing unit, the Nvidia H100 Tensor Core GPU, will roll out next month.

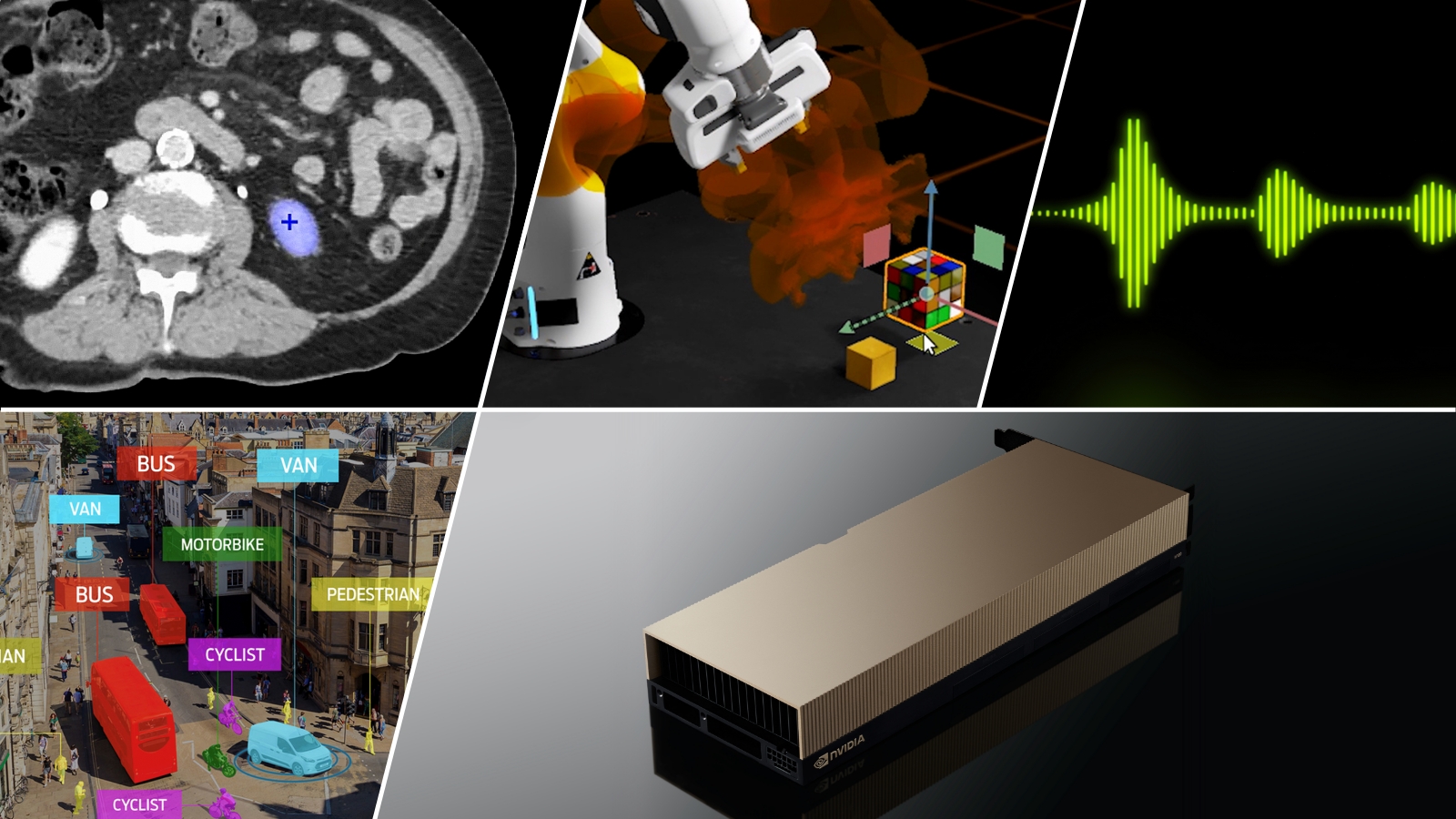

The Nvidia H100 Tensor Core is the most powerful GPU the company has ever made. Now in full production, it’s based on the new Hopper architecture and is packed with more than 80 billion transistors. It also has new features such as a Transformer Engine and more scalable NVLink interconnect, enabling it to power larger artificial intelligence models, recommendation systems and other kinds of workloads.

When the chip was first announced in April, Nvidia said it is so powerful that just 20 of them could theoretically be used to sustain the world’s entire internet traffic. That makes the H100 ideal for the most advanced AI applications, including performing inference on data in real time.

The H100 GPUs are the first to support PCIe Gen5 and they also use HBM3, which means they pack more than 3 terabytes of memory bandwidth. However, it’s the Transformer Engine that’s likely to interest many enterprises. It’s said to be able to accelerate Transformer-based natural language processing models by up to six times what the previous-generation A100 GPU could do.

In addition, the H100 GPUs feature a second-generation secure multi-instance GPU technology that makes it possible to partition the chip into seven smaller, fully isolated instances to handle multiple workloads simultaneously. Other capabilities include support for confidential computing, which means encryption of data while it’s being processed, and new DPX instructions to power accelerated dynamic programming.

That’s a technique that’s commonly used in many optimization, data processing and omics algorithms. The H100 GPU can do it 40 times faster than the most advanced central processing units, Nvidia promised.

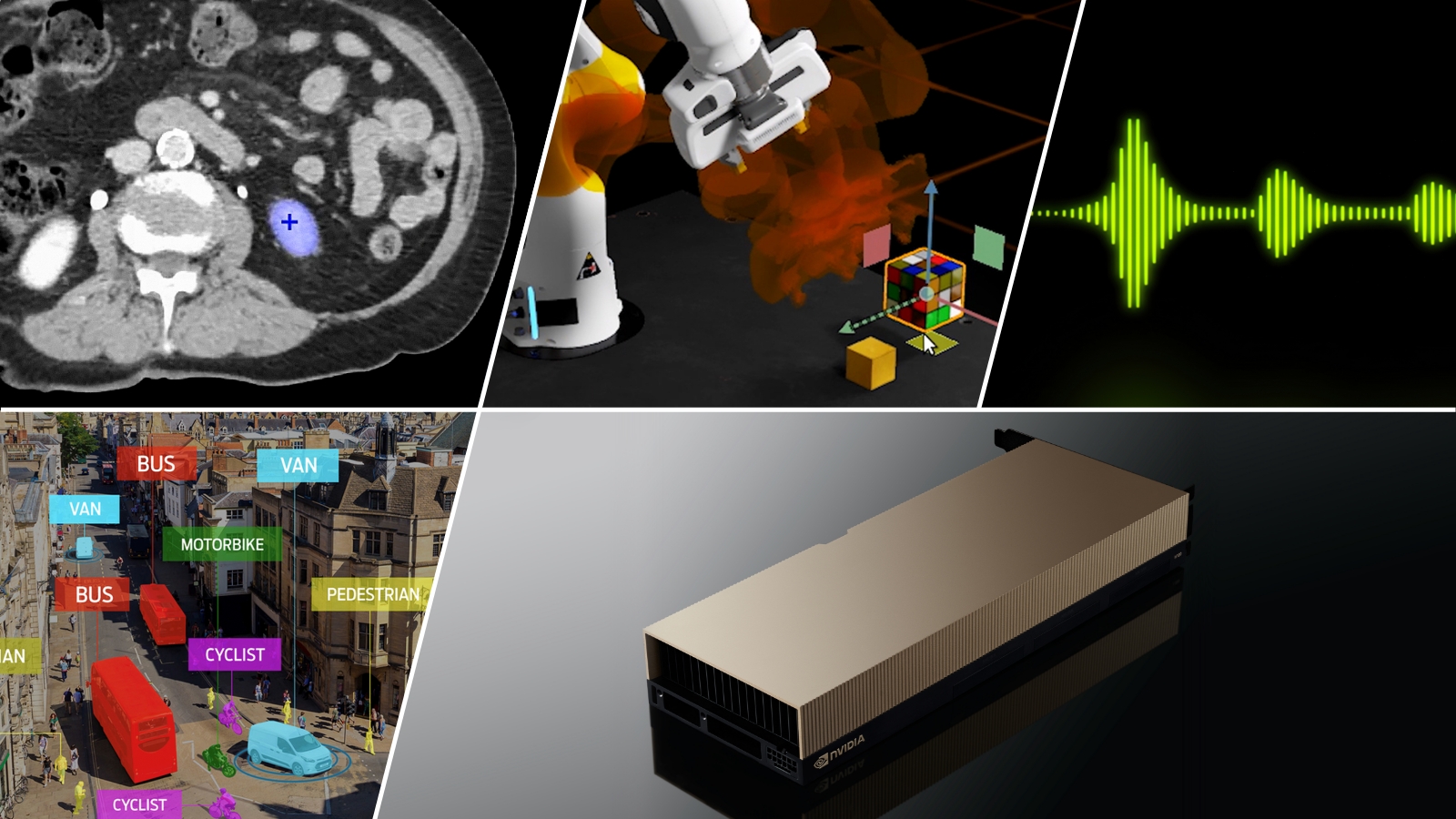

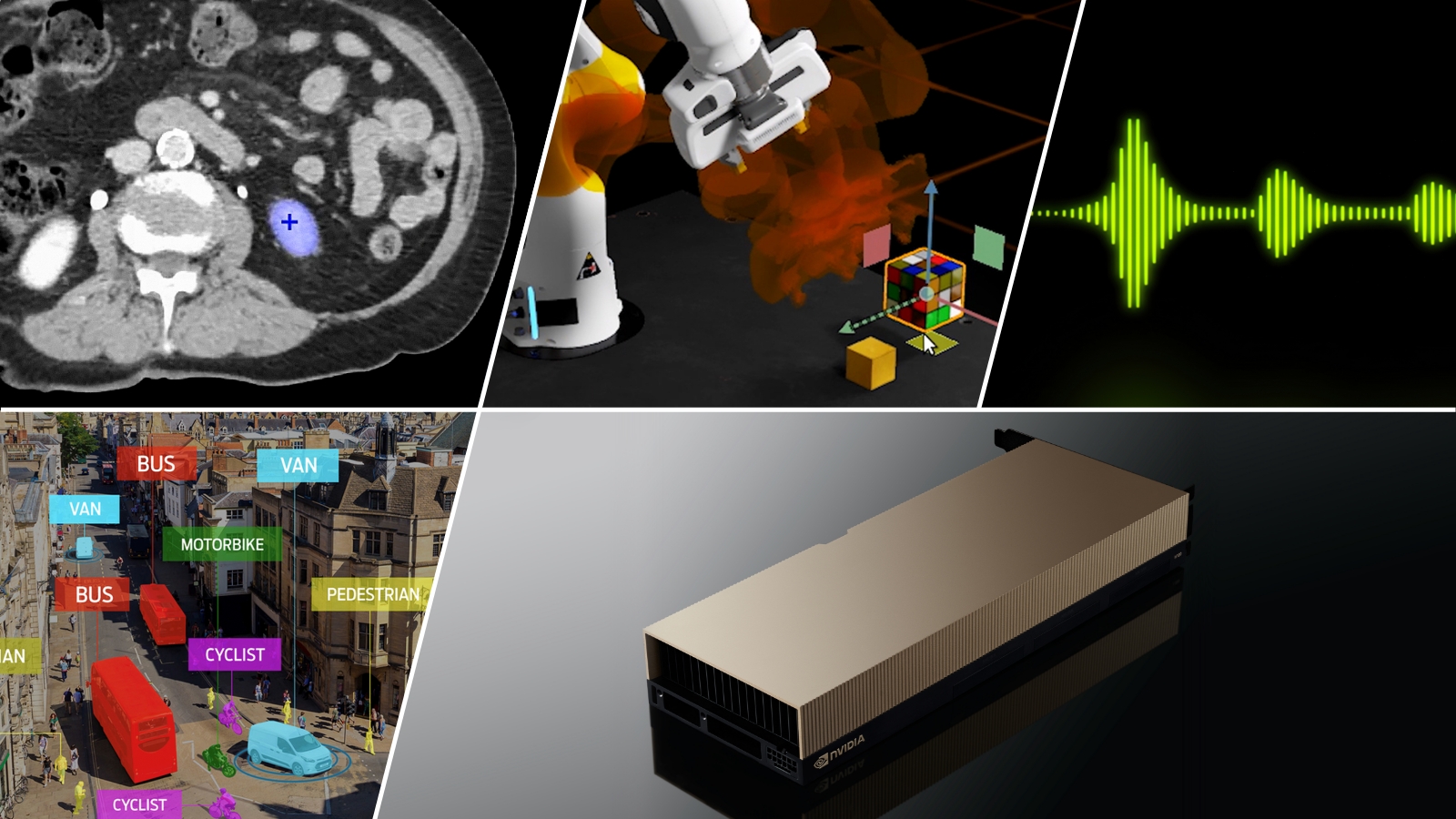

Nvidia founder and Chief Executive Jensen Huang said at GTC that he sees Hopper as “the new engine of AI factories” that will drive significant advances in language-based AI, robotics, healthcare and life sciences. “Hopper’s Transformer Engine boosts performance up to an order of magnitude, putting large scale AI and HPC within reach of companies and researchers,” he said.

One interesting detail is that all new mainstream servers powered by the Nvidia H100 will be sold with a five-year license for Nvidia AI Enterprise. It’s a software suite that’s used to optimize the development and deployment of AI models and provides access to AI frameworks and tools for building AI chatbots, recommendation engineers, vision AI and more.

Nvidia said the H100 GPUs will be widely available next month on Dell Technologies Inc.’s latest PowerEdge servers, through the Nvidia LaunchPad service that provides free, hands-on labs for companies to get started with the hardware. Alternatively, customers can order the new Nvidia DGX H100 systems, which come with eight H100 GPUs and provide 32 petaflops of performance at FP8 precision.

Each DGX system is powered by Nvidia’s Base Command and AI Enterprise software stacks. They enable deployments ranging from a single node to an entire Nvidia DGX SuperPOD for more advanced AI workloads such as large language models.

Moreover, the H100 GPUs will be available in a variety of third-party server systems sold by companies that include Dell, Atos SE, Cisco Systems Inc., Fujitsu Ltd., Giga-Byte Technology Co. Ltd., Hewlett Packard Enterprise Co., Lenovo Ltd. and Super Micro Computer Inc. in the coming weeks.

Some of the world’s leading higher education and research institutions will also get their hands on the Nvidia H100 to power their advanced supercomputers. Among them are the Barcelona Supercomputing Center, Los Alamos National Lab, Swiss National Supercomputing Centre, Texas Advanced Computing Center and the University of Tsukuba.

Not far behind will be the public cloud computing giants. Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure services will be among the first to deploy H100-based instances early next year, Nvidia said.

“We look forward to enabling the next-generation of AI models on the latest H100 GPUs in Microsoft Azure,” said Nidhi Chappell, general manager of Azure AI Infrastructure. “With the advancements in Hopper architecture coupled with our investments in Azure AI supercomputing, we’ll be able to help accelerate the development of AI worldwide”

Analyst Holger Mueller of Constellation Research Inc. said today’s announcement shows us Nvidia’s desire to become the platform for all enterprise AI operations with its Hopper architecture. “Not only will it be available on all of the leading cloud platforms, but also on-premises platforms,” Mueller said. “This gives companies the option to deploy AI-powered next-generation applications virtually anywhere. Given the power of Nvidia’s chips it’s a very compelling offering as there will be much uncertainty in the next decade over where to operate enterprise workloads.”

Finally, Nvidia said, many of the world’s leading large AI model and deep learning frameworks are currently being optimized for the H100 GPUs, including the company’s own NeMo Megatron framework, plus Microsoft Corp.’s DeepSpeed, Google LLC’s JAX, PyTorch, TensorFlow and XLA.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.