AI

AI

AI

AI

AI

AI

Meta Platforms Inc. today debuted Make-A-Video, an internally developed artificial intelligence system that can generate brief videos based on text prompts.

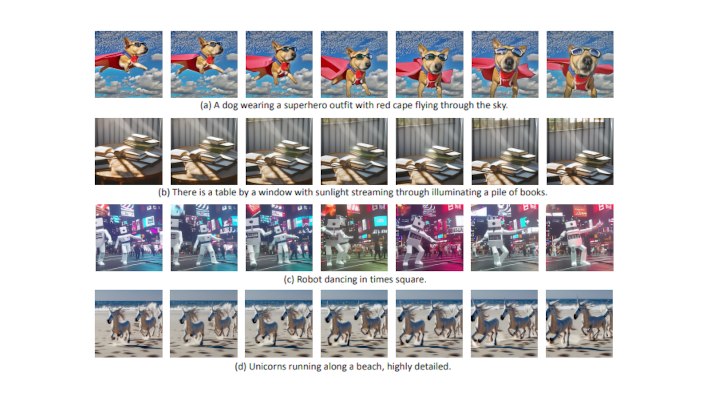

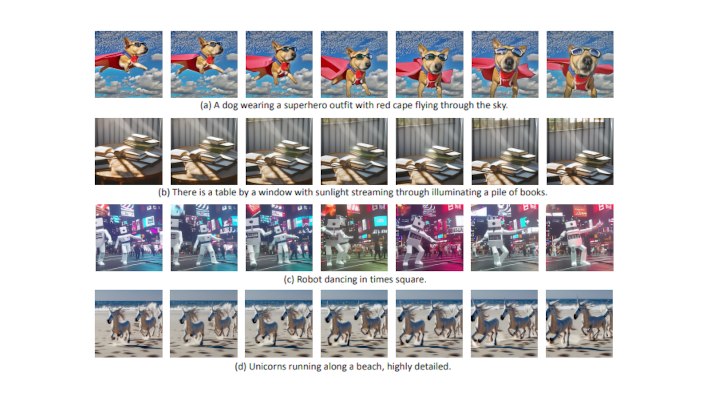

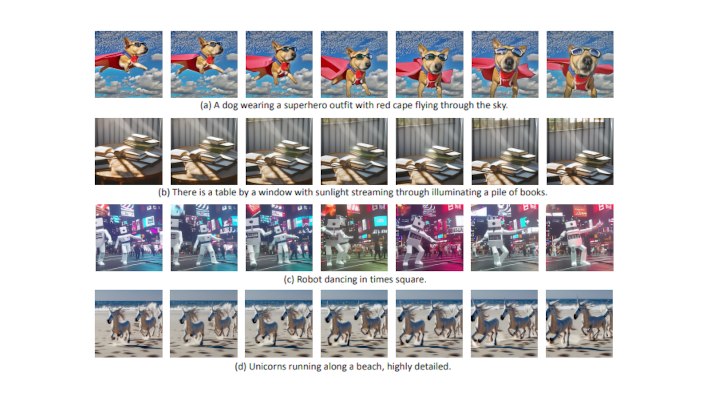

Make-A-Video can take a few words or lines of text as input and use them to generate a clip that is a few seconds long. According to Meta, the AI system is also capable of producing video based on existing footage or images. Meta researchers shared several clips that they have created using the system in a blog post published this morning.

“We gave it descriptions like: ‘a teddy bear painting a self-portrait,’ ‘a baby sloth with a knitted hat trying to figure out a laptop,’ ‘a spaceship landing on mars’ and ‘a robot surfing a wave in the ocean.’” detailed Meta Chief Executive Officer Mark Zuckerberg. “It’s much harder to generate video than photos because beyond correctly generating each pixel, the system also has to predict how they’ll change over time.”

Make-A-Video comprises not one but multiple neural networks, Meta detailed in a research paper. The neural networks were trained using training datasets that contained several million videos and 2.3 billion images. According to Meta, its researchers used a combination of manual and automated evaluation methods to check the reliability of the AI training process.

The first component of Make-A-Video is a neural network that takes a text prompt as input and turns it into an embedding. An embedding is a mathematical structure that AI systems can process more easily than other types of data.

After the text prompt is turned into an embedding, it’s passed to several other neural networks that turn it into a video through a multistep processing workflow. Those neural networks were originally designed to generate images rather than video, Meta detailed in the research paper. Meta adapted them to generate video by adding so-called spatiotemporal layers.

Layers are the basic building blocks of neural networks. A neural network includes multiple layers, each of which comprises numerous artificial neurons, pieces of code that carry out computations on data. When an artificial neuron completes a computation, it sends the results to another artificial neuron for further analysis and the process is repeated numerous times until a result is produced.

The spatiotemporal layers that Meta incorporated into Make-A-Video enable the system to turn a text prompt into a brief clip with 16 frames. The clip is then sent to another neural network, which adds 60 more frames to produce the final video.

Meta asked a group of project participants to compare Make-A-Video with earlier AI systems. “Raters choose our method for more realistic motion 62% of the time on our evaluation set,” Meta’s researchers detailed. “We observe that our method excels when there are large differences between frames where having real-world knowledge of how objects move is crucial.”

Another notable feature of Make-A-Video is that some of the neural networks it includes can be trained using a method known as unsupervised learning. The method is generally not supported by similar AI systems.

With unsupervised learning, researchers can train an AI system on significantly more data than would otherwise be possible. Training neural networks using more data enables them to perform more complex computing tasks. “This large quantity of data is important to learn representations of more subtle, less common concepts in the world,” Meta’s researchers detailed.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.