AI

AI

AI

AI

AI

AI

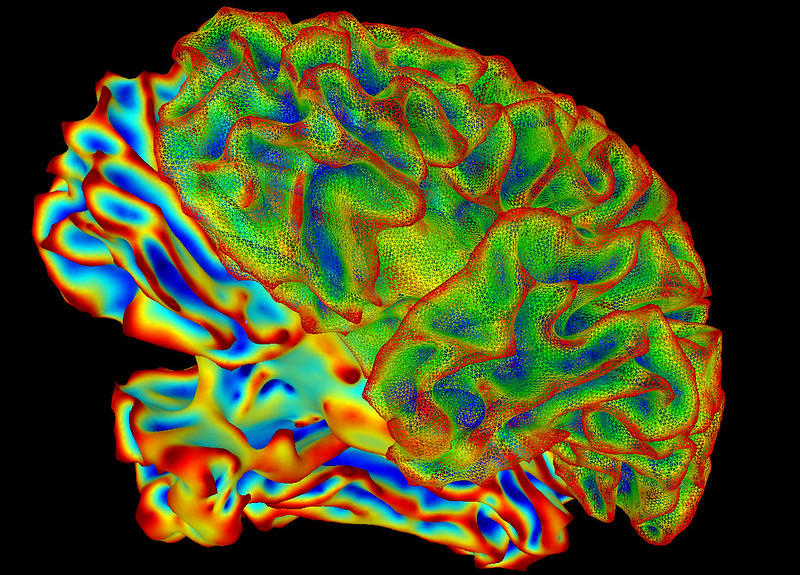

Researchers at the University of Texas at Austin have been using artificial intelligence in combination with fMRI scans to translate brain activity into continuous text.

The results were published today in the Nature Journal under the title, “Semantic reconstruction of continuous language from non-invasive brain recordings.” The scientists explained how this is a non-invasive technique, the first of its kind that can recognize not just small sets of words or phrases but streams of words.

The decoder was trained by letting people listen to podcasts within an fMRI scanner – a machine that recognizes brain activity. No surgical implants were involved, which is why this is such an interesting breakthrough. The participants each listened to 16 hours of podcasts while inside the scanner, and the decoder, using GPT-1, the precursor to ChatGPT, was trained to turn the participants’ brain activity into meaning. In short, it became a mind reader.

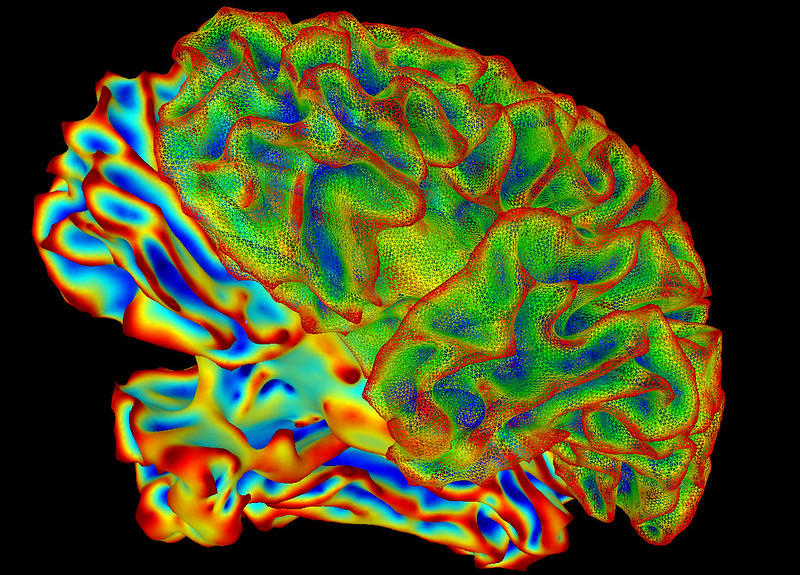

In the past, there has been some success in this area when implants have been used. Such technology might be useful for people who have lost the ability to speak or for people who have lost the use of their limbs, to “write” virtually. “This isn’t just a language stimulus,” Alexander Huth, a neuroscientist at the university, explained to the New York Times. “We’re getting at meaning, something about the idea of what’s happening. And the fact that that’s possible is very exciting.”

It’s not perfect, but in tests, it wasn’t always far off. When the decoder heard the words, “I don’t have my driver’s license yet,” those thoughts were translated to, “She has not even started to learn to drive yet.” In another case, the decoder heard the words, “I didn’t know whether to scream, cry or run away. Instead, I said: ‘Leave me alone!’” This decoding of the brain activity for this complex sentence was, “Started to scream and cry, and then she just said: ‘I told you to leave me alone.’”

In other experiments, the participants were asked to watch videos that didn’t have any sound at all, and this time the decoder was able to describe what they were seeing. There’s a long way to go, and the researchers ran into issues at times, but still, other scientists working in this field have called the breakthrough “technically extremely impressive.”

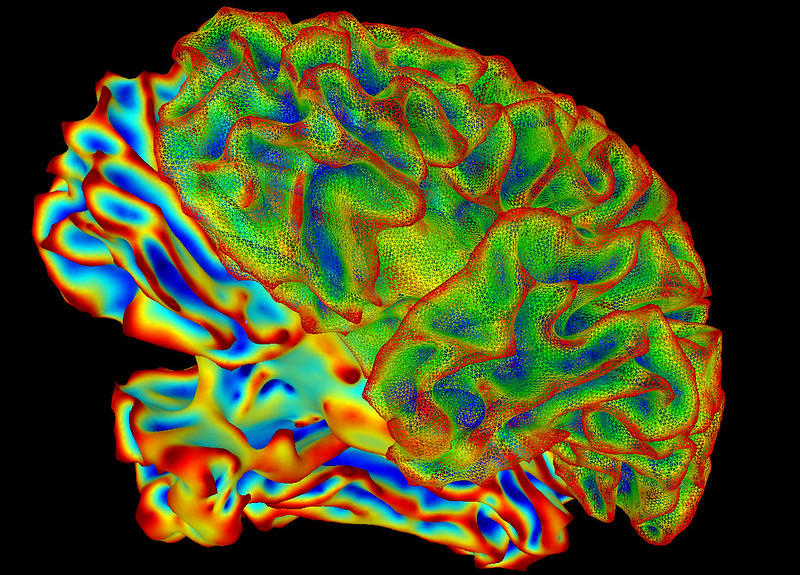

It may also be a cause for concern, given that mind-reading can sound somewhat dystopian when you consider its myriad uses other than helping disabled people. The CIA spent decades trying to control and read minds under its secret Project MKUltra.

“We take very seriously the concerns that it could be used for bad purposes and have worked to avoid that,” said one of the authors of the paper, addressing this issue. “We want to make sure people only use these types of technologies when they want to and that it helps them.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.