CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

We’ve been skeptical about repatriation as a notable movement but anecdotal evidence suggests that it is happening in certain pockets. Even though we still don’t see cloud repatriation broadly showing in the numbers, certain examples have caught our attention. In addition, the potential impact of AI raises some interesting questions about where infrastructure should be physically located and causes us to revisit our premise that repatriation is an isolated and negligible trend.

In this Breaking Analysis we look at a number of sources, including the experiences of 37signals, which has documented its departure from public clouds. We’ll also examine the relationship between repatriation and SRE Ops skill sets. As always we’ll look at survey data from our partners at ETR, a recent FinOps study published by Vega Cloud and revisit the Cloud Repatriation Index, which we believe is breaking a three-year trend.

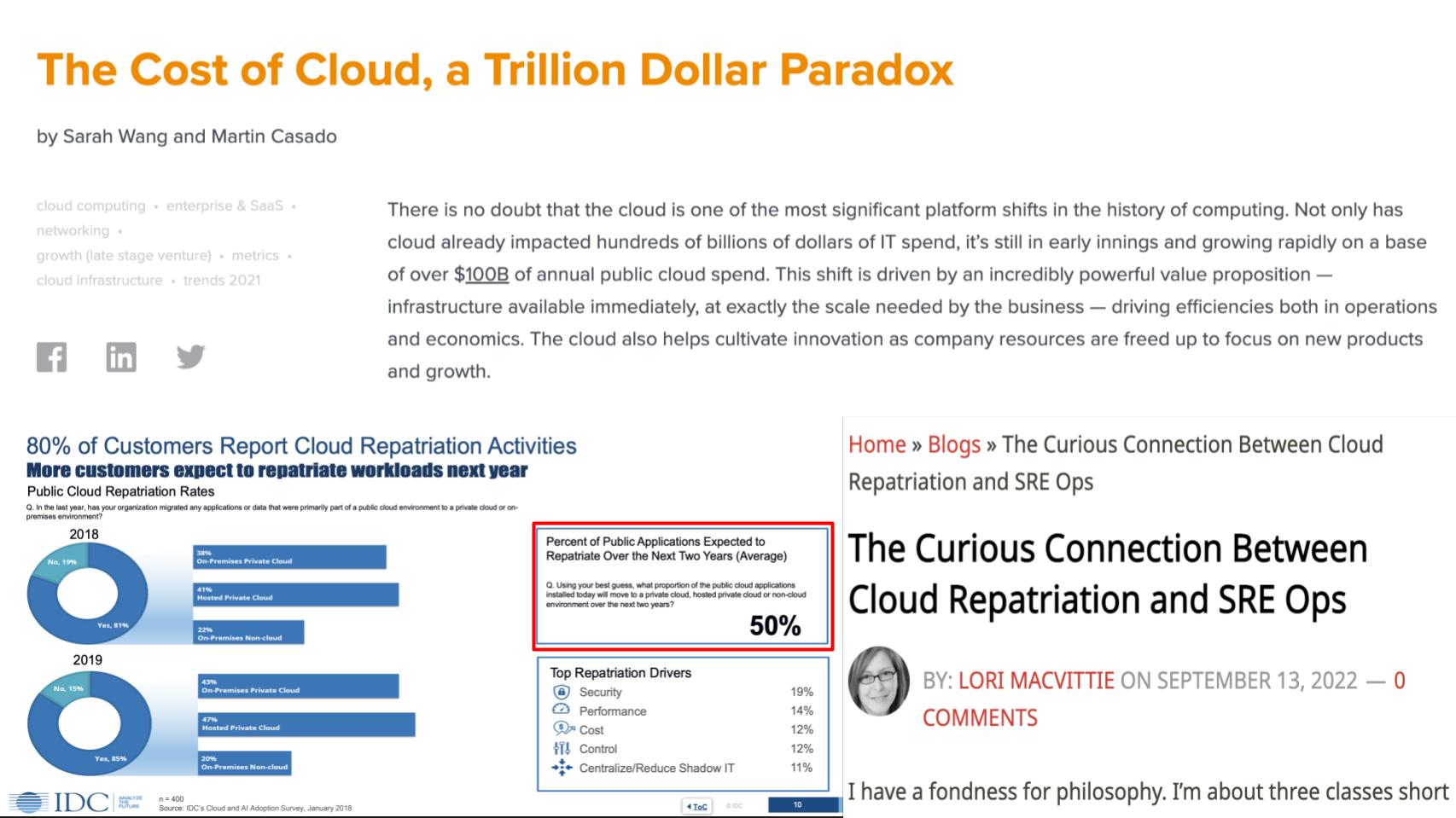

Many credible sources have published on the topic.

On the bottom left, IDC published a report in 2018 that said over the next two years users expected 50% of public cloud applications to repatriate. We found that to be an astoundingly large figure. This study was done by Michelle Bailey and Matt Eastwood, two highly respected and credible analysts. We know their work and from what we can tell, this wasn’t a sponsored study.

The most discussed report continues to be the somewhat controversial but widely referenced work from Sarah Wang and Martin Casado of Andreessen Horowitz. The post posited that cloud expenses would become an increasingly large component of cost of goods sold for cloud native software-as-a-service companies at scale. And these costs would become so dilutive to profits that it would either force repatriation or a large discount concession from cloud providers. The authors used the Dropbox case study as an example of the potential savings from repatriation where the company saved $75 million by moving infrastructure back on prem.

You’ll find some evidence of this COGS issue in Snowflake’s Form 10-K. In the most recent March 2023 filing there’s the following note:

In January 2023, we amended one of our third-party cloud infrastructure agreements [presumably AWS] effective February 1, 2023. Under the amended agreement, we have committed to spend an aggregate of at least $2.5 billion from fiscal 2024 to fiscal 2028 on cloud infrastructure services.

And Snowflake is contractually obligated to pay any difference between the commitment and what they use during that term.

Sounds onerous, right?

But as you read on, you’ll find this tidbit:

The remaining non-cancelable purchase commitments under the agreement prior to the January 2023 Amendment, the aggregate amount of which was $732.0 million as of January 31, 2023, is not reflected in the table above as the Company is no longer required to fulfill such commitments.

So two points here that we’ve made before and will reiterate.

We see the same trend happening within the broader customers base where buyers are locking into longer terms with savings plans but committing to a specific spend amount.

So though it might make sense to do some things on-premises, such as what Zscaler or CrowdStrike Holdings do, as was reported by Wang and Casado, so far repatriation from SaaS companies such as Datadog and Snowflake has not been a factor.

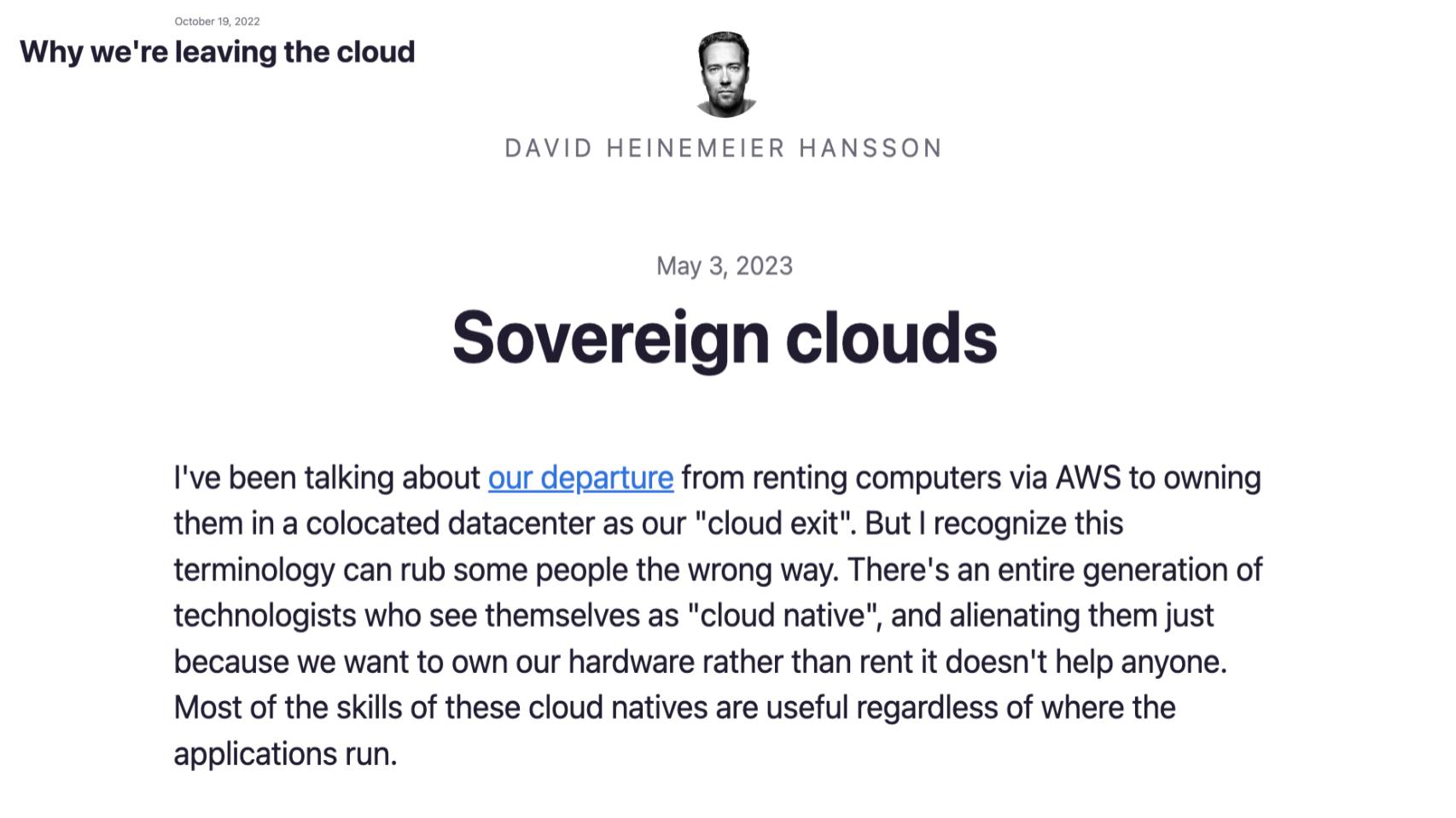

Let’s not call final victory for cloud just yet. Lori MacVittie put out an interesting post late last year describing the relationship between site reliability engineering or SRE skills and cloud repatriation. Her point was not that SRE causes repatriation, rather that SRE skills make it easier for companies to operate on-prem infrastructure using a cloud operating model. She cited an F5 report that showed a significant jump from 2021 to 2022 in customers repatriating apps. And she asked the right question:

It’s not whether repatriation happens, we know it does… the question is what percentage of workloads are actually moving back?

It doesn’t appear to be 50% based on the data we have, but her point is that many more customers today have SRE Ops capabilities and are in a better position to repatriate… if there’s a business case.

Which brings us to the 37signals repatriation case study.

David Heinemeier Hansson is the co-founder of 37signals, a much-admired software company with popular products such as Basecamp and Hay. He has published a couple of posts on the company’s departure from cloud and he used this term sovereign cloud, as an alternative to private cloud or on-prem. VMware has used this term for years, as has CUBE analyst and colleague David Nicholson. The implication is cross-cloud and hybrid services are emerging that will require control planes that live outside of a limited number of public clouds. Moreover, according to Nicholson, at the end of the day “it’s all IT,” implying that the off-prem and on-prem worlds are converging and reaching a state of quasi-equilibrium.

David Heinemeier Hansson is the co-founder of 37signals, a much-admired software company with popular products such as Basecamp and Hay. He has published a couple of posts on the company’s departure from cloud and he used this term sovereign cloud, as an alternative to private cloud or on-prem. VMware has used this term for years, as has CUBE analyst and colleague David Nicholson. The implication is cross-cloud and hybrid services are emerging that will require control planes that live outside of a limited number of public clouds. Moreover, according to Nicholson, at the end of the day “it’s all IT,” implying that the off-prem and on-prem worlds are converging and reaching a state of quasi-equilibrium.

Listen to VMware’s Chris Wolf give his perspective on so-called sovereign cloud.

Remember, 37signals’ founders are well-known iconoclasts and anti-establishment types. But their economic argument is nonetheless sound. Specifically, renting infrastructure for a midsized firm such as 37signals is more expensive today than when it was starting out with new products in the cloud. The other key point aligns with Lori MacVittie’s premise that the skills overlap between what it takes to run software in the cloud versus on-prem is 80% to 90% correlated. With some differences of course, such as:

Heinemeier Hansson claims these differences are minor and easier than learning Kubernetes.

Maybe so. But many, or most, midsized companies don’t have the developer talent of 37signals and would rather invest elsewhere.

Below is a picture of the 37signals sovereign cloud:

Look at those lovely shrink-wrapped boxes on a wooden pallet! You can see the infrastructure it bought on the right. 37signals is geeking out and having fun with hardware. Check out the Dell R7625s with two AMD EPYC 9454 CPUs running at 2.75GHz with 48 cores/96 threads. According to 37signals, this adds 4,000 vCPUs to its on-premises fleet. With 7 terabytes of RAM and 384TB of Gen 4 NVMe storage, moving to Gen 5 they’ll get to ~13 gigabits per second soon.

Yeah, so cool. Sovereign cloud. Go for it if you have the chops.

Let’s take a look at the data and see if there’s any evidence of repatriation.

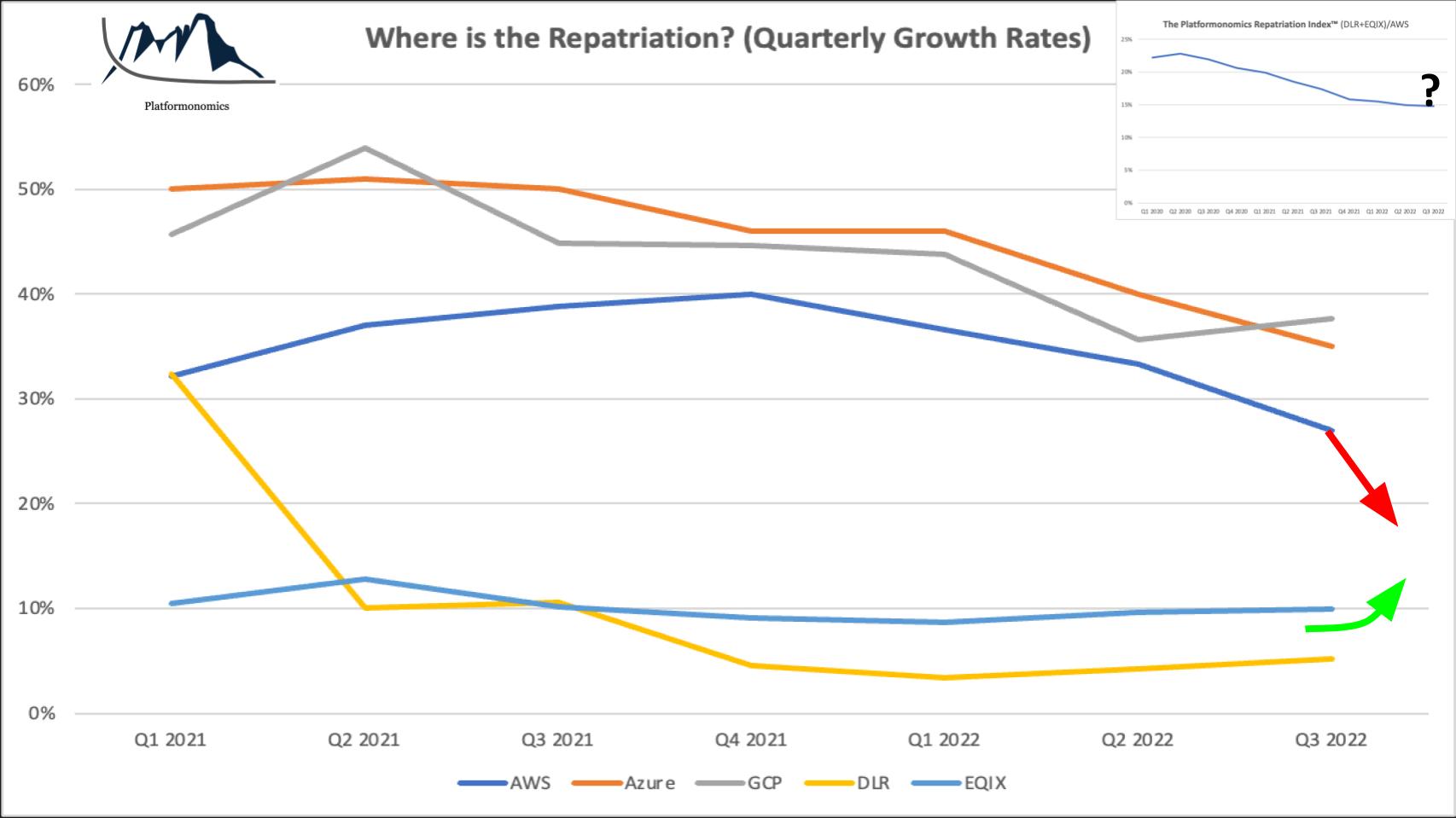

Our friend over at Platformonomics, Charles Fitgzerald, created what he calls the Cloud Repatriation Index.

In the chart above, he plots the growth rates of the big three cloud players against those of Equinix and Digital Realty. And in the upper right, we show Fitzy’s repatriation index, which takes the revenue of the two leading colocation providers and divides by Amazon Web Services Inc.’s cloud revenue. That blue line in the upper right has been on a steady downward trend for three years. But you’ll notice in the chart below, the growth rates based on 2023 projections are converging… so that flattening repatriation curve is likely ticking up the way it was back in early 2020.

We’ll keep an eye on that because both big colocation players are forecasting strong revenue growth. Equinix’ guide is for 14% to 15% revenue growth, which could exceed AWS’ growth rate. Digital Realty is expected to grow revenues slightly below Equinix but in the low teens, so combined they’re converging with the growth rates of AWS.

So based on the likely next rev of Charles’ repatriation math, we’re likely to see some convergence.

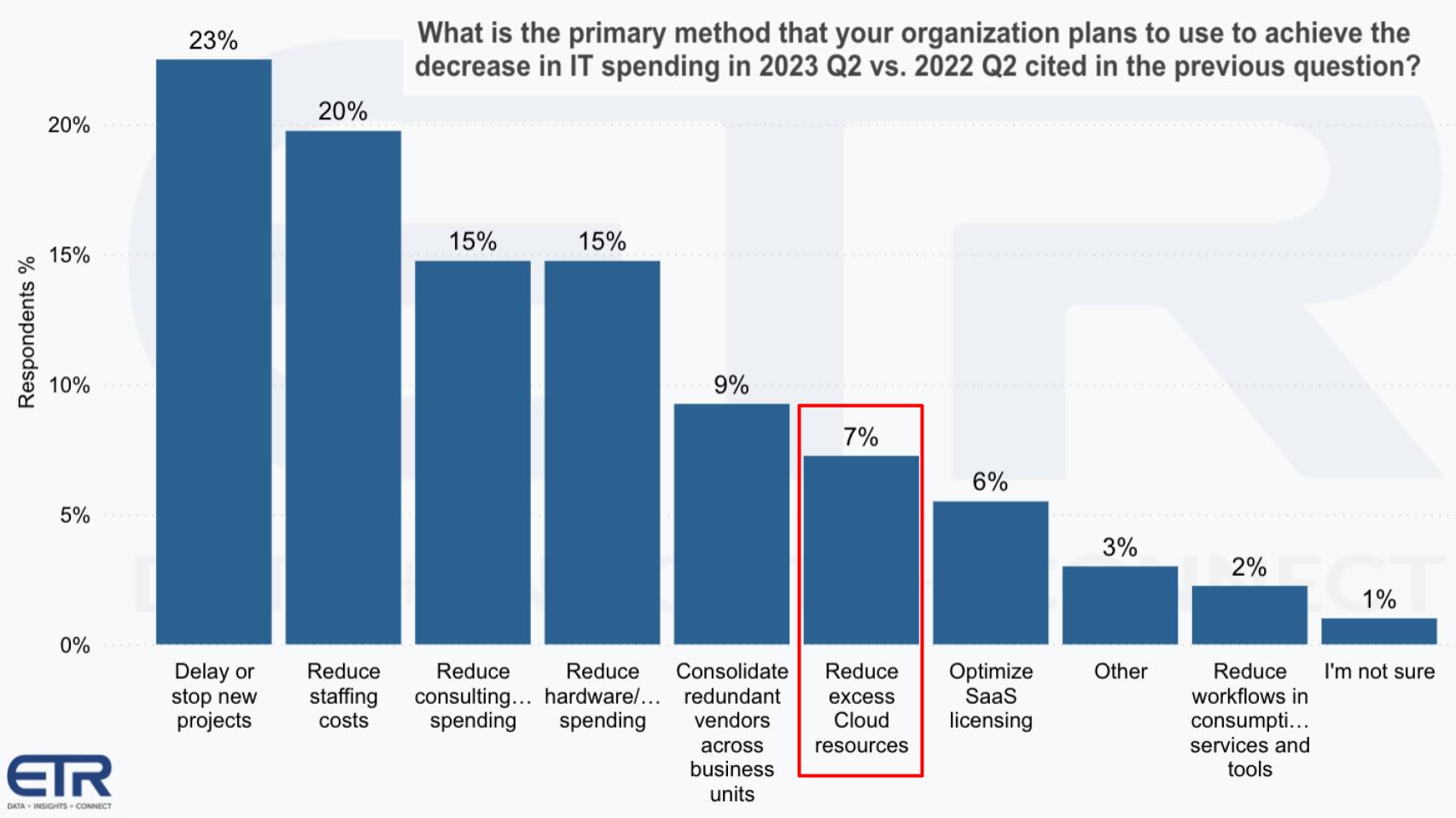

Let’s bring in some ETR survey data and take a look at whether there are signs that repatriation is happening to a large degree.

In this recent drill-down, ETR asked 400 respondents that said they were significantly cutting costs how they were doing so. And you can see above they’re delaying projects, cutting staff, reducing consulting spend, holding off on hardware purchases, consolidating redundant vendors – by the way that was No. 1 last quarter… and finally, reducing excess cloud resources.

Reducing excess cloud resources might include some repatriation. We asked ETR to look into this and dig into the open-ended comments and repatriation didn’t come up specifically. But that doesn’t mean it’s not happening in pockets. It just doesn’t appear to be “thing 1,” as Stu Miniman likes to say.

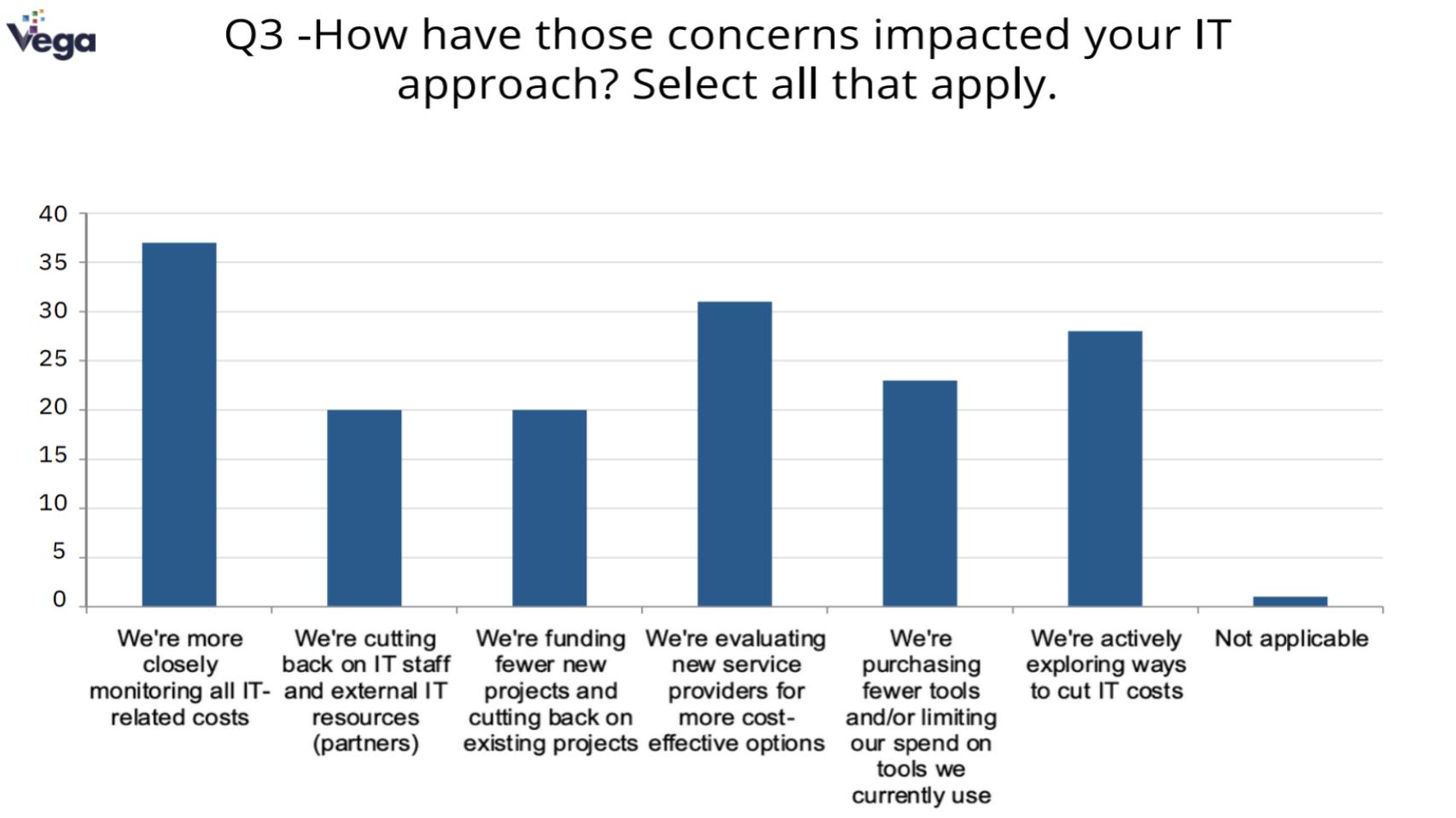

We recently received an inbound from Vega Cloud, a firm specializing in cutting cloud costs. And it just ran a survey on FinOps. The chart below shows, actions taken by customers concerned about cloud costs.

Somewhat consistent with the ETR data, we see staff cuts, project delays, vendor alternatives, and the like, but no specific evidence of repatriation.

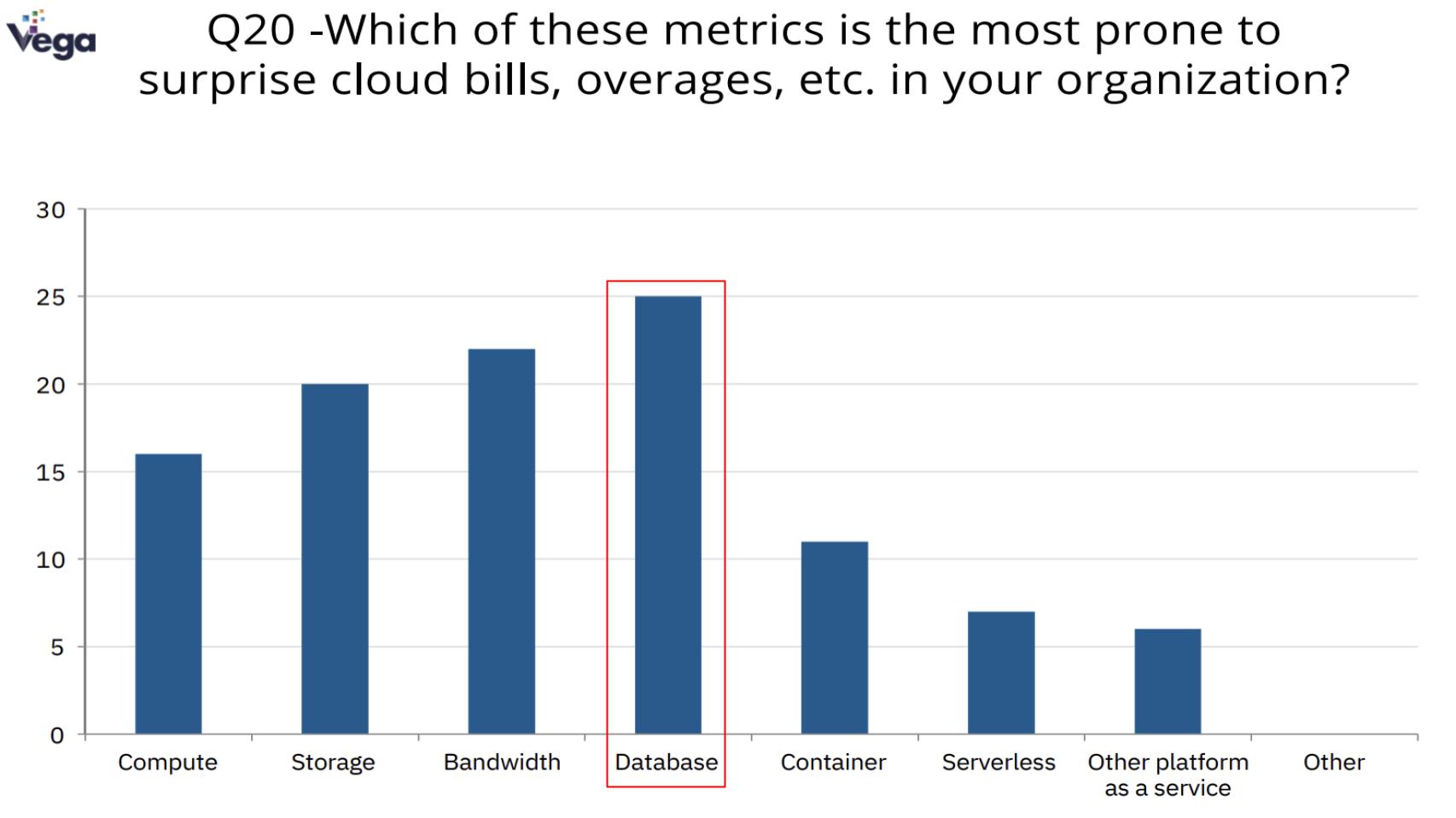

One notable call-out in the Vega survey was a question about which line items on the cloud bill were giving them the most heartburn from overages relative to expectations.

We would have thought compute and bandwidth would be the big culprits, but database was No. 1. We wonder how many Snowflake customers were in this sample because Snowflake is a very popular database. And our understanding is it charges customers for consumption as a bundle. So the Snowflake customer doesn’t see granularity of storage or compute… just a Snowflake consumption bill. We asked the folks who did the survey but they don’t have that level of detail.

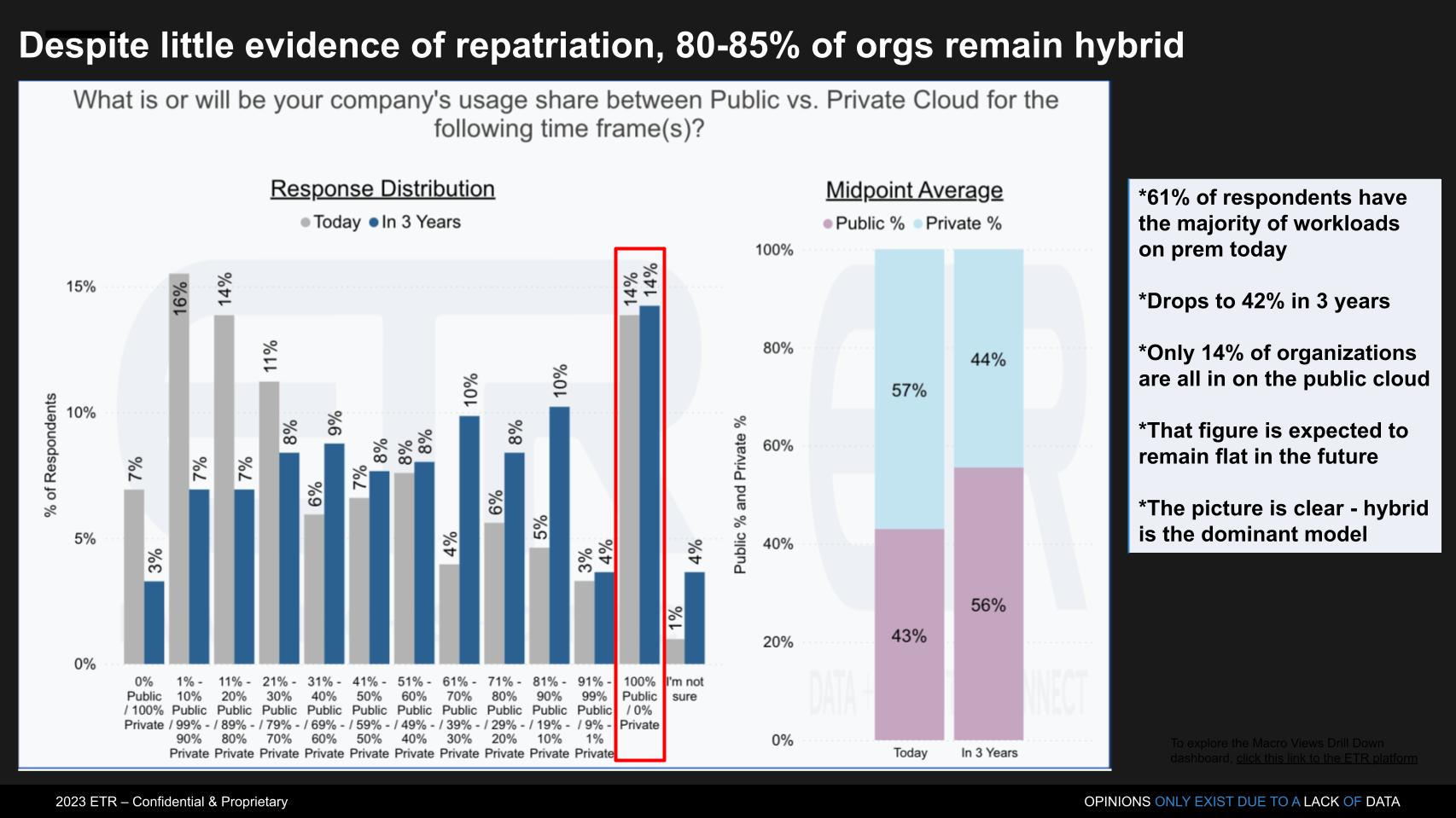

The last bit of ETR data we’ll look at is from a survey on cloud adoption relative to on-prem, and the preponderance of hybrid cloud.

We’ve shared the data above before but will take a different angle today. It’s from another ETR drill-down late last year that breaks down the percent usage between public and private cloud. The key points are:

So first, if there’s repatriation happening at a large scale, it’s not clear form these numbers. The second point is the picture is crystal-clear: Hybrid is the dominant model for the foreseeable future.

The other question we pondered is how will AI affect this picture.

First of all, foundation models such as GPT are a tide that will lift all compute and infrastructure boats, in our opinion. Automation combined with massive compute and data requirements will enable and require greater spending on servers, storage and networking.

As an example, it was interesting to see the stock market’s tepid reaction to AMD’s quarter this past week. The company beat estimates despite the challenging environment and soft PC market. And the stock dropped. But when it was announced that Microsoft is helping finance AI chips for AMD, its stock popped. And though a lot of that is hype around ChatGPT, it’s also recognition that we’re entering a new era where AI is going to drive massive demand for data center hardware.

Which begs the question: Where’s that hardware going to live? In the cloud or on-prem? Where are OpenAI’s servers? Well, we know they’re in Azure, but they’re evidently also in Ohio on a supercomputer where the training is done. We asked ChatGPT and got a vague answer, but when you look at AI, there’s clearly an affinity between it and high-performance computing.

And many of those HPC workloads will land on-prem. For example, if you’re a university and have a supercomputing center, you’ll want to show that off to prospective students and donors, versus having resources hidden away in a remote cloud. Renting infrastructure is economically viable for bursty use and unpredictable swings in demand, but like the cloud-versus-on-prem argument for mainstream apps, similar arguments apply in HPC. That’s especially so with large scale implementations – that is, more than 50,000 cores. Some supercomputers have millions of cores.

There are also legitimate concerns about leaking intellectual property. We recently saw Samsung Electronics and other organizations ban ChatGPT after an employee put proprietary code into the software, not realizing the risks. That makes AWS’ announcement of Bedrock interesting. AWS is not building a free service like Google Search or Bing or OpenAI. Rather, it’s offering large language model services and tools so you can build your own — presumably making IP leakage less likely.

But then, of course, there’s Alexa.

And the last point is, as Matt Baker said on our LinkedIn post last week:

This is batting practice for AI, the game will be played at the edge and in the world around us. Adaptation and inferencing do not favor centralized models, e.g. public cloud.

We agree. AI inferencing is going to explode at the edge. Not in today’s public cloud. Now, how the public cloud players approach this will be interesting with autonomous vehicles, robots, satellites and other edge infrastructure.

So yes, we’re smacking GPT balls out of the park before the first inning has even started.

Let the games begin and we’ll see what happens.

Many thanks to Alex Myerson and Ken Shifman on production, podcasts and media workflows for Breaking Analysis. Special thanks to Kristen Martin and Cheryl Knight, who help us keep our community informed and get the word out, and to Rob Hof, our editor in chief at SiliconANGLE.

Remember we publish each week on Wikibon and SiliconANGLE. These episodes are all available as podcasts wherever you listen.

Email david.vellante@siliconangle.com, DM @dvellante on Twitter and comment on our LinkedIn posts.

Also, check out this ETR Tutorial we created, which explains the spending methodology in more detail. Note: ETR is a separate company from Wikibon and SiliconANGLE. If you would like to cite or republish any of the company’s data, or inquire about its services, please contact ETR at legal@etr.ai.

Here’s the full video analysis:

All statements made regarding companies or securities are strictly beliefs, points of view and opinions held by SiliconANGLE Media, Enterprise Technology Research, other guests on theCUBE and guest writers. Such statements are not recommendations by these individuals to buy, sell or hold any security. The content presented does not constitute investment advice and should not be used as the basis for any investment decision. You and only you are responsible for your investment decisions.

Disclosure: Many of the companies cited in Breaking Analysis are sponsors of theCUBE and/or clients of Wikibon. None of these firms or other companies have any editorial control over or advanced viewing of what’s published in Breaking Analysis.

THANK YOU