SECURITY

SECURITY

SECURITY

SECURITY

SECURITY

SECURITY

A new report sponsored by the European Commission has found that social media has played a key role in the spread of Russian-backed disinformation campaigns since their war with Ukraine began.

“Over the course of 2022, the audience and reach of Kremlin-aligned social media accounts increased substantially all over Europe,” the researchers stated in the report, “Digital Services Act: Application of the Risk Management Framework to Russian disinformation campaigns.”

The work was done by the nonprofit group Reset and published last week. The group examined these campaigns across 10 languages and over a year. It can serve as useful guidance for U.S. regulators and for how businesses should moderate their own social media content.

The report found conclusive evidence “that the Kremlin’s ongoing disinformation campaign not only forms an integral part of Russia’s military agenda, but also causes risks to public security, fundamental rights and electoral processes inside the European Union. The Russian Federation continues to operate vast networks of social media accounts propagating deceptive, dehumanizing and violent content and engaging in coordinated inauthentic behavior.”

More than 2,200 online accounts were examined across Meta’s Facebook and Instagram networks as well as X/Twitter, TikTok, Google’s YouTube and Telegram networks. Accounts were examined that had direct or ideologically aligned links with Russian state-sponsored actors.

The researchers posit several causes for this, including the dismantling of trust and safety teams at various social networking companies, the lack of inconsistent administration by the social media companies of terms of service guardrails, the spotty or nascent legal enforcement by European regulators, and the compelling nature of hate speech in attracting likes, comments and reposted messages.

Russian state-sponsored disinformation campaigns are nothing new: The Kremlin has been doing this since the Soviet Union and the Cold War during the last century. What is new is that social media platforms have “supercharged the Kremlin’s ability to way information war and stoke ethnic conflict.”

The report points out that the EU has anticipated these circumstances and passed the Digital Services Act. Yet this act doesn’t go into full force until next spring.

Reset researchers found that the virality of this disinformation has continued into 2023, with average engagement increasing by 22% across all platforms. They say this “was largely driven by Twitter,” now known as X Corp., and the various changes brought about with Elon Musk’s purchase of the company and new policies.

In a new controversy this weekend over the way Musk runs his company, he accused the Anti-Defamation League of defaming X. The Reset report provides more grist for this mill, and takes a careful analytic approach using a newly developed data and risk framework to track the way disinformation content is amplified and transmitted across online networks.

The researchers found at least 165 million accounts are actively used to spread this content, which was viewed during 2022 at least 16 billion times, including more than 3 billion YouTube video views. “The subscriber numbers of pro-Kremlin channels more than tripled on Telegram since the start of the war, more than doubled on TikTok and rose by almost 90% on YouTube.”

The study is a useful document, especially as U.S.-based regulators begin to formulate their own response to the toxicity of social media. Front and center is the framework developed by Reset, and how it can be used for American purposes. This framework tries to organize an analytical schema that can quantify risks of disseminating illegal and other types of hateful content through any online platform and how the risks can be mitigated. There are both qualitative and quantitative evaluations metrics, such as the likelihood of potential harm and the potential audience size.

There is no current equivalent and as thorough an evaluation framework from any American legislative effort.

Another useful lesson and one aspect that until now has not received much attention is how the Kremlin-backed accounts leverage one social network against another. The Kremlin agents first target the least-regulated environments and then amplify them across other platforms.

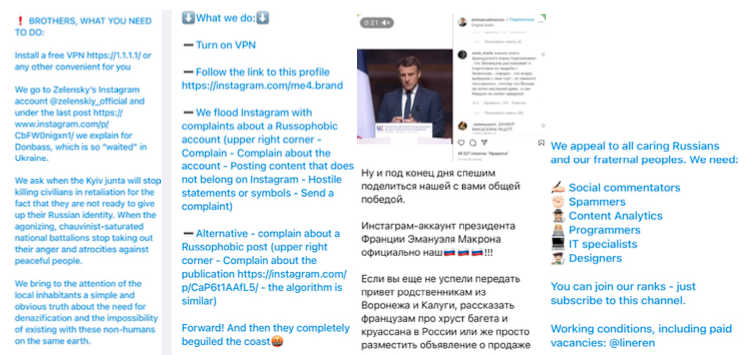

The report shows a series of translations into English various recruitment lures to join Russia’s cyber army who call themselves “Cyber Front Z” and are looking for help on Telegram to post content across networks, up- and down-vote posts, and hound opponents with derogatory comments. The photo shown in the screenshot below is one such target, a pro-Ukraine politician.

The report highlights another tactic, which is to post content that promotes harm but flies just under the legal definitions used by the EU that would explicitly prohibit it. “The Kremlin propagates discriminatory content at scale, denigrating and often dehumanizing particular groups or individuals on the basis of protected characteristics such as nationality, sex, gender or religion,” the report states.

The authors offer up several examples, including targeting female political leaders of nearby countries for violence. And many of the accounts used by the Kremlin masquerade as news media reporters that were created to get around geolocation blocks in response to EU sanctions. The researchers found 30 of these sham accounts on Facebook with a collective audience of 1.6 million followers that were targeting French-speakers in Europe and Africa.

This has implications for U.S.-based regulators, which are currently grappling with how to best identify and then codify potential legal obligations for algorithmic amplification.

Perhaps the least surprising but still important conclusion, and something hinted at with this past weekend’s Twitter imbroglio with antisemitic content, is ineffective content moderation by the social networks. “Platforms rarely reviewed and removed more than 50% of the clearly violative content we flagged in repeated tests in several Central and Eastern European languages.”

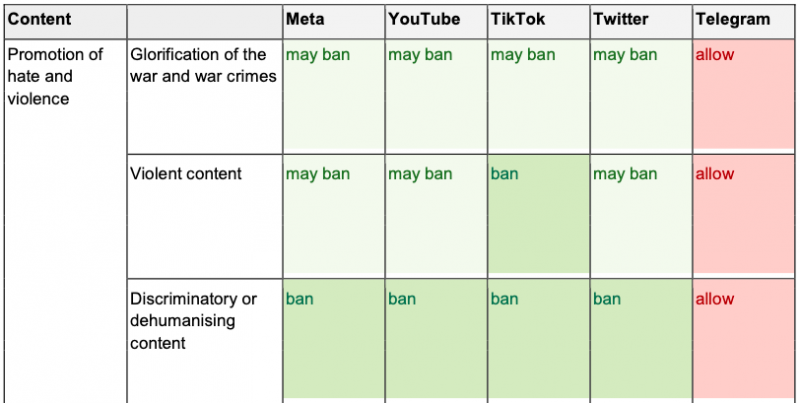

The researchers carefully examined the content policies of each social network and documented them in their report. “None of the major platforms we examined banned all Kremlin-backed accounts, including official government accounts, such as the accounts of Russian Embassies, or the personal accounts of Kremlin staffers.” The researchers tested both proactive and reactive content moderation actions for Facebook, YouTube and Twitter across several Eastern European language posts during the summer of 2022 and found a wide variation in the application of these policies.

Twitter’s response was particularly disturbing, not flagging any of the posts identified by the researchers. The table of various hate and violent speech promotion for each of the five social networking companies show that Telegram was the only one to allow this content across the board.

Finally, there is one sad prediction that Americans should take notice: “There is a high risk that the Russian Federation will continue its efforts at interfering in electoral processes in the European Union, including 2024’s elections to the European Parliament.” What this means for our own 2024 elections remains to be seen, but it certainly is another cautionary tale.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.