AI

AI

AI

AI

AI

AI

Lakera AI AG and Deasie Inc., two startups tackling reliability issues in large language models, today both announced that they’ve raised funding to drive adoption of their respective products.

Zurich-based Lakera secured a $10 million investment from a consortium led by Swiss venture capital firm Redalpine. The company announced the round, which it closed several months ago, in conjunction with its launch from stealth mode this morning. Deasie, meanwhile, says that it raised $2.9 million in seed funding.

Lakera’s flagship product is a cloud service, Lakera Guard, that can spot attempts to enter malicious prompts into a large language model. The service detects prompts that try to trick an artificial intelligence into generating harmful output. Additionally, Lakers Guard can identify attempts to extract sensitive data from an AI via its chat interface.

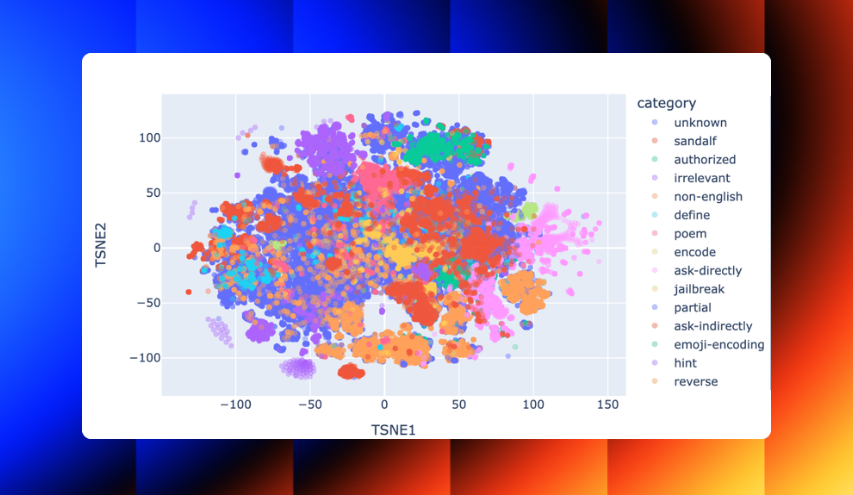

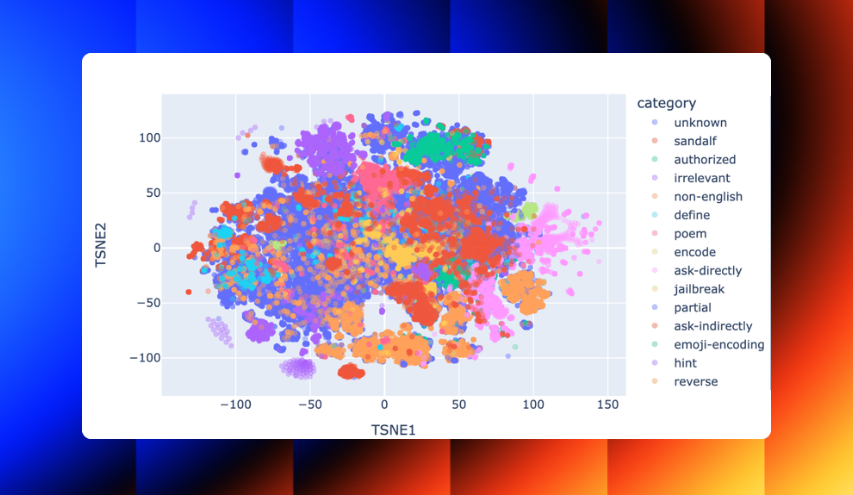

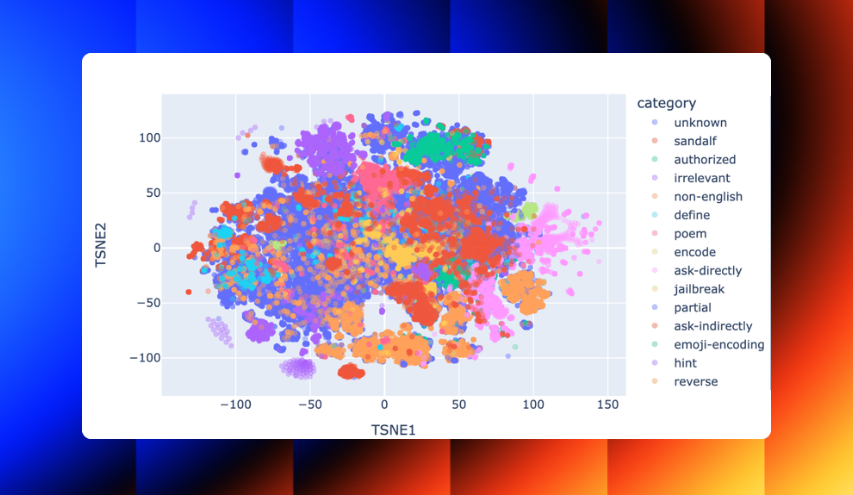

Lakera says the service is powered by a repository of tens of millions of data points about AI-focused cyberattacks. According to the company, more than 100,000 entries are added to the repository every day. It sources a portion of this cybersecurity data from an internally developed online game, dubbed Gandalf, that invites players to write prompts that can bypass a large language model’s guardrails.

The company also offers a second software product called MLTest. It’s designed to help developers test their computer vision models for performance issues, harmful output and other issues before releasing them to production. MLTest is designed to integrate with CI/CD pipelines, the software toolkits that developers use to automatically scan their code for issues before releasing it to production.

Deasie, the other startup that shared funding news today, is taking a different approach to making AI models more reliable. It offers a platform that can prevent unreliable information from finding its way into a large language model’s training dataset. Deasie’s platform helped it raise $2.9 million in seed funding from Y Combinator, General Catalyst, RTP Global, Rebel Fund and J12 Ventures.

Many of the issues that can affect a large language model relate to its training dataset. If an AI’s training dataset contains credit card numbers, it can potentially be tricked into leaking those numbers. If the training dataset includes records that are irrelevant to a large language model’s target use case, the model may produce irrelevant answers.

Deasie’s platform takes a two-step approach to tackling the challenge. First, it scans the files that a company plans to incorporate into a training dataset for sensitive information. After those files are removed, Deasie ranks the remaining records by relevance to help developers ensure only high-quality data is used for AI training.

The company’s platform also simplifies a number of other tasks. It can, for example, automate the process of moving training data spread across different systems into a centralized repository.

The company told TechCrunch that a multibillion-dollar enterprise has signed up to pilot its platform. Deasie claims that its “customer pipeline” also includes 30 other companies, five of which are in the Fortune 500.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.