AI

AI

AI

AI

AI

AI

Alphabet Inc.’s Intrinsic unit, which develops technology that makes industrial robots easier to program, today debuted a set of artificial intelligence models created by its engineers.

Executives detailed the AI models at the Automate 2024 robotics event taking place this week at Chicago. Several of the neural networks were developed in partnership with Google DeepMind, the search giant’s AI research unit, while others were created through a collaboration with Nvidia Corp.

Teaching industrial robots to perform tasks such as placing merchandise in boxes historically required a significant amount of custom code. In some cases, the programming work involved is so complicated that it can become an obstacle to manufacturers’ factory automation initiatives. Alphabet launched Intrinsic in 2021 to develop software that can ease the task of programming robots and thereby make the technology more accessible.

Before a robotic arm can pick up an item, it has to detect its presence and then perform so-called 3D pose estimation. That’s the task of identifying the location of an object and the direction in which it’s facing. The robotic arm uses this information to find the optimal angle from which it should pick up the item to minimize the risk of falls, collisions with other objects and related issues.

The first AI model that Intrinsic detailed today can identify an object and estimate its pose in a few seconds. According to the Alphabet unit, its engineers pre-trained the model to interact with more than 130,000 types of items. Moreover, the AI is capable of adapting to variations in its operating environment, such as cases when the camera a robotic arm uses to track objects is switched or lightning conditions change.

“The model is fast, generalized, and accurate,” Intrinsic Chief Executive Officer Wendy Tan White detailed in a blog post. “We are working to bring this and others like it onto the Intrinsic platform as new capabilities, so that they become easy to develop, deploy, and operate.”

At Automate 2024 today, the Alphabet unit also detailed two AI projects carried out in collaboration with Google DeepMind. Both focused on optimizing industrial robots’ movements.

According to Intrinsic, the first initiative produced an AI tool that can ease so-called motion planning. That’s the process of determining the optimal sequence of motions a robot should carry out to complete a given task. The AI tool is geared toward situations where multiple autonomous machines work side-by-side and must move in a way that avoids collisions.

The software takes data about a robot’s dimensions, movement patterns and assigned tasks as input. It then generates motion plans automatically to reduce the need for manual coding. In a simulation that involved four robots collaborating on a virtual welding project, the AI tool managed to outperform traditional motion planning methods by about 25%.

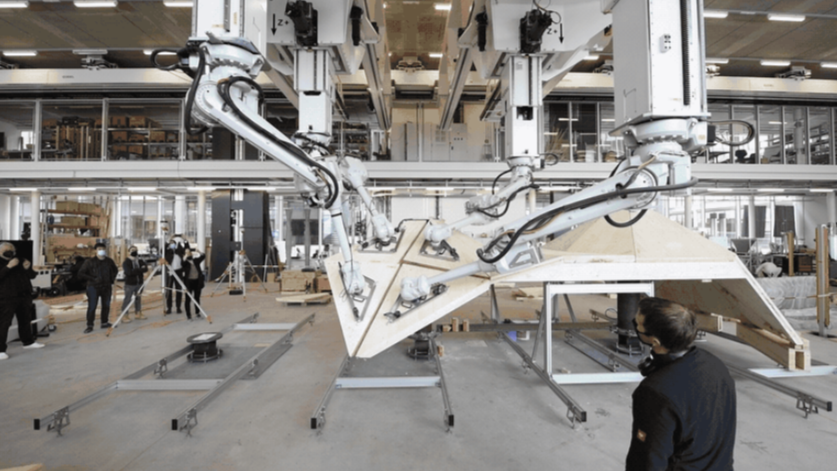

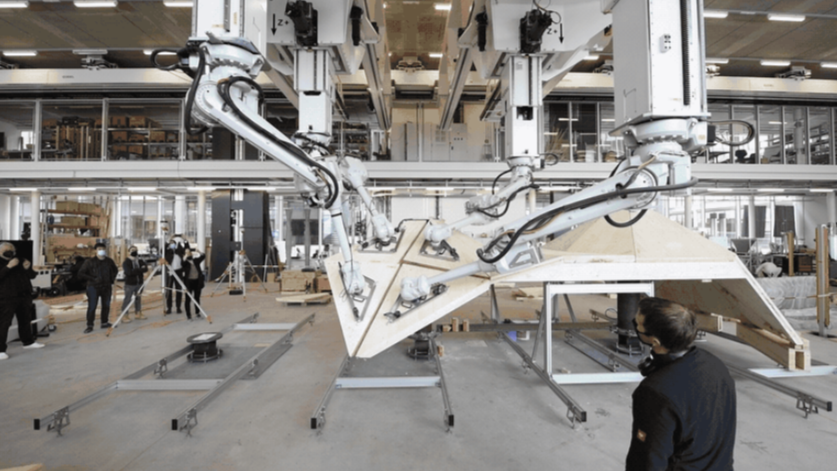

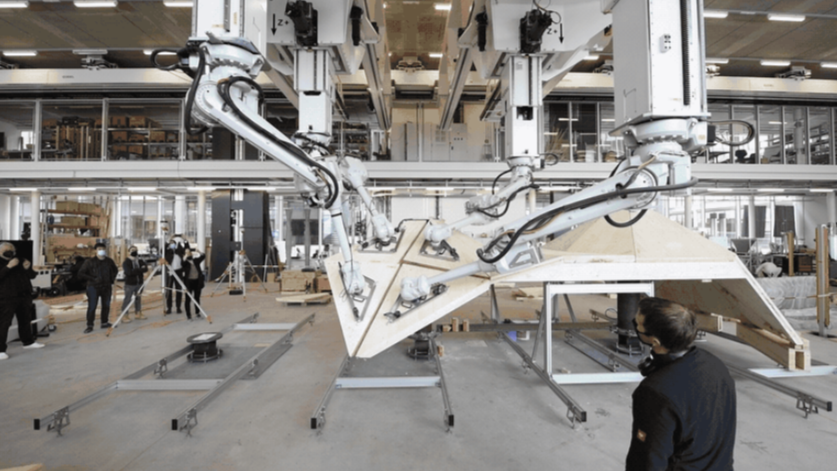

Intrinsic’s other joint project with Google DeepMind focused on optimizing situations where two robotic hands work side-by-side on the same task. Researchers from the latter group developed AI software optimized for such use cases using technical assets provided by Intrinsic. “One of Google DeepMind’s methods of training a model — based on human input using remote devices — benefits from Intrinsic’s enablement of real-world data, managing sensor data and high-frequency real-time controls infrastructure,” Tan White wrote.

On the occasion, Intrinsic also revealed a collaboration with Nvidia that focuses on teaching robots to grasp objects reliably. Historically, the software code that controls how a robotic arm picks up an object had to be customized for each individual type of item it interacts with. That involved a significant amount of manual work.

Intrinsic used Isaac Sim, a robot simulation platform developed by Nvidia, to create an AI system that can automate the process. It can generate the code a robot requires to pick up a given item without manual input. Moreover, the AI is capable adapting this code to address the fact that different robotic arms often use different types of gripping instruments to pick up objects.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.