AI

AI

AI

AI

AI

AI

Amazon Web Services Inc. today announced an update on its commitment to responsible generative artificial intelligence at its annual Summit in New York.

Before the summit, which is being covered by theCUBE, SiliconANGLE’s livestreaming studio, I spoke with two AWS team members — Diya Wynn, responsible AI lead, and Anubhav Mishra, principal product manager for Guardrails for Amazon Bedrock.

Wynn described ongoing innovation to tackle issues in generative AI, such as hallucinations, that play an important role in its overall effort to support responsible AI. To help its customers combat the problem of hallucinations, AWS announced a new capability called Contextual Grounding Check in Guardrails for Amazon Bedrock to help customers detect hallucinations in model responses based on a reference source and a user query. The company claims it can filter more than 75% hallucinated responses for retrieval-augmented generation, or RAG, and summarization workloads.

“Hallucinations tend to be one of those areas of concern for customers as they look to leverage larger language models,” Wynn told me. “And we’ve seen some examples of systems publicly and otherwise, perhaps not giving you factual information.”

During the call, I inquired about why hallucinations continue to happen: Would a lack of data cause the large language model to infer something incorrectly? Mishra said generative AI models are designed to predict the next best token or word. At times, those predictions can be incorrect, leading to hallucinations.

“Even if you’ve provided a certain amount of enterprise context or enterprise information as part of the input model, the model’s output can get confused,” he told me. “They can conflate information, they can combine, or they can, at times, also generate new information based on their training data.”

Another aspect Amazon is trying to cover with its commitment to responsible AI is relevance, Mishra said. “If an user has asked a certain question, it is possible that the model still generated information that’s completely grounded in your enterprise data but did not answer the question,” he said. “So that is another kind of hallucination also covered as part of this. Grounding and relevance are the two different paradigms we’re evaluating in Contextual Grounding Check.”

Key components of the announcement are as follows:

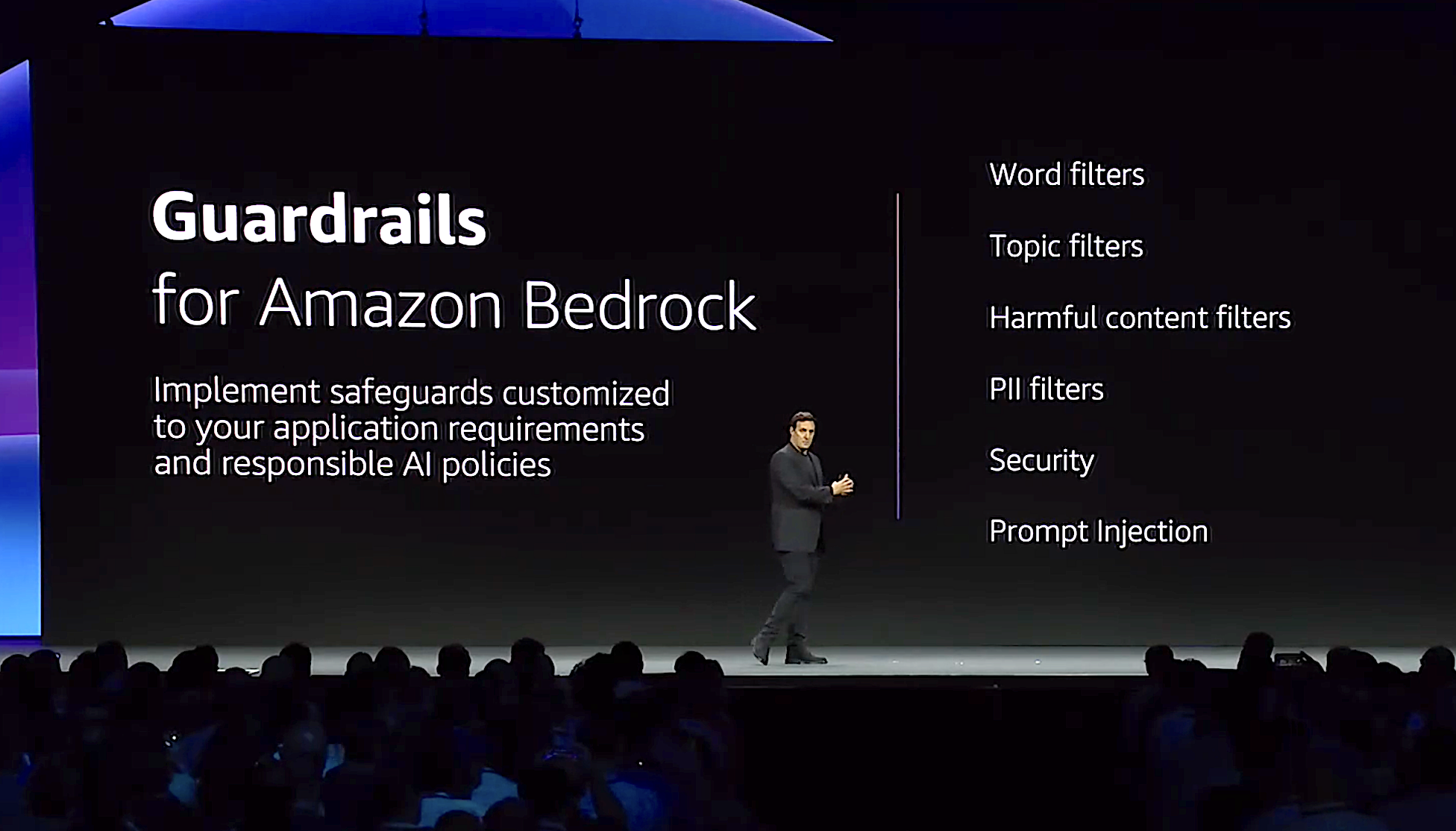

In April 2024, Amazon introduced new tools to assist AWS customers and Amazon’s businesses in building generative AI responsibly. These tools include Guardrails for Amazon Bedrock and Model Evaluation in Amazon Bedrock, designed to introduce safeguards, prevent harmful content, and evaluate models against safety and accuracy criteria. According to the company, Guardrails helps block up to 85% of harmful content compared to protection natively provided by foundation models on Amazon Bedrock.

Aside from Contextual Grounding Check, AWS also announced an independent API for Guardrails at the NY Summit, enabling its customers to apply safeguards for their generative AI applications across different foundation models.

Amazon said it uses manual red-teaming, among other methods, to test its AI systems, including Amazon Titan models. Red-teaming involves human testers probing an AI system for flaws in an adversarial style, complementing other testing techniques like automated benchmarking and human evaluation. Each model undergoes repeated human testing for safety, security, privacy, veracity and fairness.

To address the potential for disinformation that sometimes accompanies generative AI, Amazon introduced the Amazon Titan Image Generator in April 2024. This feature adds invisible watermarks in every image by default. Amazon’s watermark detection can identify AI-generated images and is tamper-resistant, which should help increase transparency around AI-generated content and combat disinformation.

Amazon has engaged with numerous organizations to develop AI responsibly and build global trust. In February 2024, Amazon joined the U.S. Artificial Intelligence Safety Institute Consortium, collaborating with the National Institute of Standards and Technology to establish new measurement science for trustworthy AI. Amazon also invests $5 million in AWS compute credits to support NIST’s tool and methodology development.

Amazon also joined the Tech Accord to Combat Deceptive Use of AI in 2024 Elections at the Munich Security Conference, working to advance safeguards against deceptive activity and protect election integrity.

In addition, in April 2024, Amazon joined Thorn, All Tech is Human, and several other organizations to prevent risks related to the misuse of generative AI technologies in perpetrating harms against children.

AWS released a new AI Service Card for Amazon Titan Text Premier in May to support responsible, transparent, generative AI. AI Service Cards provide information on intended use cases, limitations, responsible AI design choices, and deployment and performance optimization best practices for AI services and models. The company has created more than 10 AI Service Cards as part of a comprehensive development process.

Amazon says it is committed to promoting the safe and responsible development of AI for societal benefit. For example, Brainbox AI uses generative AI to optimize energy usage and reduce carbon emissions in commercial buildings.

Amazon also prioritizes education and continuous learning on responsible AI for employees and the public. Since July 2023, Amazon has delivered tens of thousands of training hours to its employees on risk assessments and considerations surrounding fairness, privacy and model explainability. Amazon has recently launched new courses on safe and responsible AI use as part of its “AI Ready” initiative, which aims to provide free AI skills training to 2 million people globally by 2025.

As evidenced by this announcement, Amazon is still striving to create a safe, responsible and trustworthy environment for the development of AI technology. The raison d’être for Amazon is what it always has been: Do what’s best for the customers, and success will follow.

“We want to ensure that the customers are getting accurate information back,” Wynn said. “So I’m excited about capabilities like Contextual Grounding Check that allow them to feel much more comfortable deploying generative AI services and making them available to their customers.”

Because of its size and market position, Amazon can dictate the rules in many business lines. It is encouraging that it is taking the initiative to put up guardrails in such an essential and rapidly moving space, with so much at stake. Hopefully, all other players in the AI ecosystem will heed the call and join in. My feeling is that these kinds of initiatives should be table stakes.

Zeus Kerravala is a principal analyst at ZK Research, a division of Kerravala Consulting. He wrote this article for SiliconANGLE.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.