AI

AI

AI

AI

AI

AI

There hasn’t been a tech tailwind as strong as artificial intelligence since the early days of the internet. Many companies are vying to be the kingpin in the AI battleground, with Nvidia Corp. taking the early lead.

The company has kept that position by taking a systems approach to AI. One of the key differentiators for Nvidia has been NVLink and NVSwitch, which enabled better and faster connectivity between graphic processing units to help with inferencing.

LLMs continue to grow in size and complexity, so demand for efficient, high-performance computing systems has also grown. In a recent blog post, Nvidia examined the role of NVLink and NVSwitch technologies in enabling the scalability and performance required for large language model inference, particularly in multi-GPU environments.

After reading the post, I was intrigued, so I interviewed Nvidia’s Dave Salvator, director of accelerated computing products, Nick Comly, product manager for AI platform inference, and Taylor Allison, senior product marketing manager for networking for AI, to understand better how NVLink and NVSwitch can significantly speed up the inferencing process.

Salvator told me that the architecture of NVLink and NVSwitch is critical. “It’s already helping us today and will help us even more going forward, delivering generative AI inference to the market,” he said.

In reality, the points made are fundamental network principles that have never been applied at the silicon layer. For example, performance would be terrible if we connected several computers with point-to-point connections, but performance would improve dramatically through a switch.

“That’s a good way of thinking about it,” he told me. “I mean, point-to-point has a lot of limitations, as you correctly point out. The blog gets into this notion of talking about computing versus communication time. And the more communication becomes part of your performance equation, the more benefits you’ll ultimately see from NVSwitch and NVLink.”

In the blog, Nvidia notes that LLMs are computationally intense, often requiring the power of multiple GPUs to handle the workload efficiently. In a multi-GPU setup, the processing of each model layer is distributed across different GPUs.

However, after each GPU processes its portion, it must share the results with other GPUs before proceeding to the next layer. This step is crucial and demands extremely fast communication between GPUs to avoid bottlenecks that could slow down the entire inference process.

Traditional methods of GPU communication, such as point-to-point connections, face limitations as they distribute available bandwidth among multiple GPUs. As the number of GPUs in a system increases, these connections can become a bottleneck, leading to increased latency and reduced overall performance.

NVLink is Nvidia’s solution to the challenges of GPU-to-GPU communication in large-scale models. In the Hopper platform generation, it offers a communication bandwidth of 900 gigabits per second between GPUs, far surpassing the capabilities of traditional connections. NVLink ensures that data can be transferred quickly and efficiently between GPUs while minimizing latency and keeping the GPUs fully utilized. The Blackwell platform will increase the bandwidth to 1.8 terabits per second, and the NVIDIA NVLink Switch Chip will enable 130 TB/s of GPU bandwidth in one 72-GPU NVLink domain (NVL72).

Taylor Allison shared some further details about NVLink. “NVLink is a different technology from InfiniBand,” he told me. “We’re able to leverage some of the knowledge and best practices that we have from the InfiniBand side of the house with the design of this architecture — in particular, things like in-network computing that we’ve been doing for a long time in InfiniBand. We’ve been able to port those to NVLink, but they’re different.”

He quickly compared InfiniBand and Ethernet and then described how NVLink fits in. “InfiniBand, like Ethernet, is using a traditional switching/routing protocol — an OSI model you don’t have in NVLink,” he said. “NVLink is a compute fabric and uses different semantics.”

He told me that NVLink is a high-speed interconnect technology that enables a shared memory pool. Ethernet and InfiniBand have different paradigms. Nvidia designed NVLink’s architecture to scale with the number of GPUs, ensuring the communication speed remains consistent even with GPUs added to the system. This scalability is crucial for LLMs, where the computational demands continuously increase.

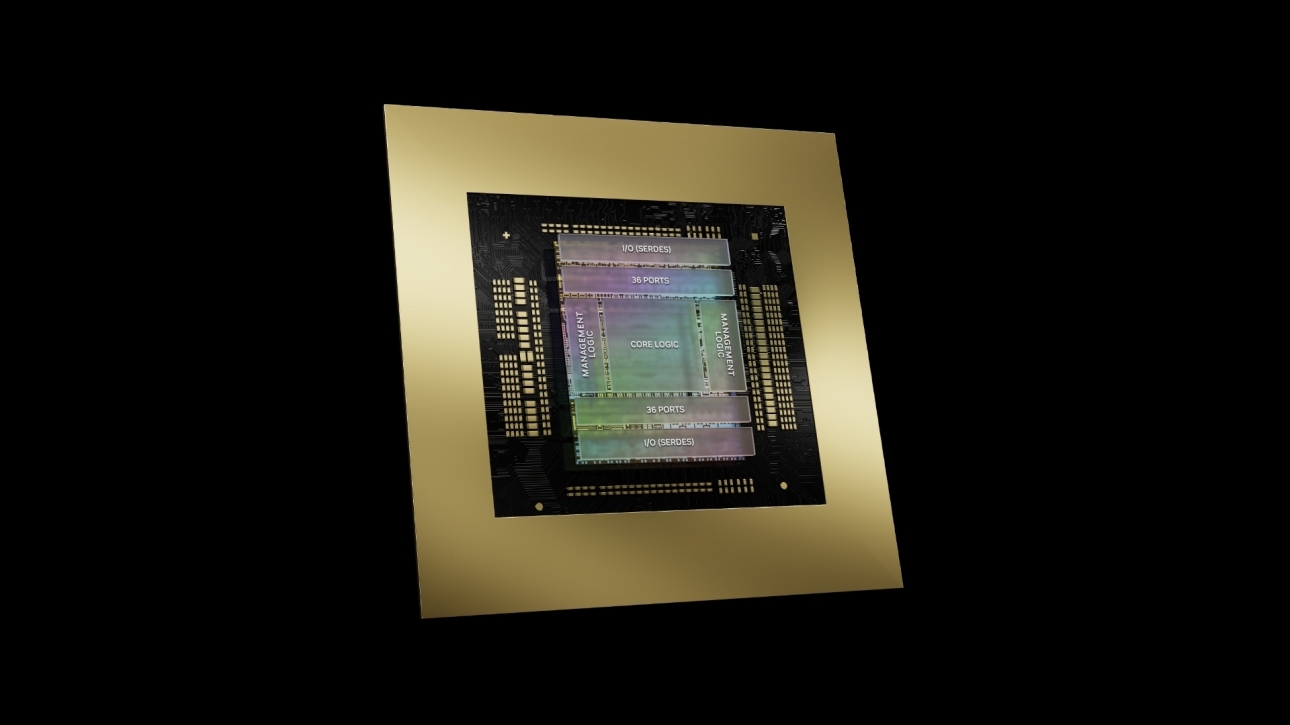

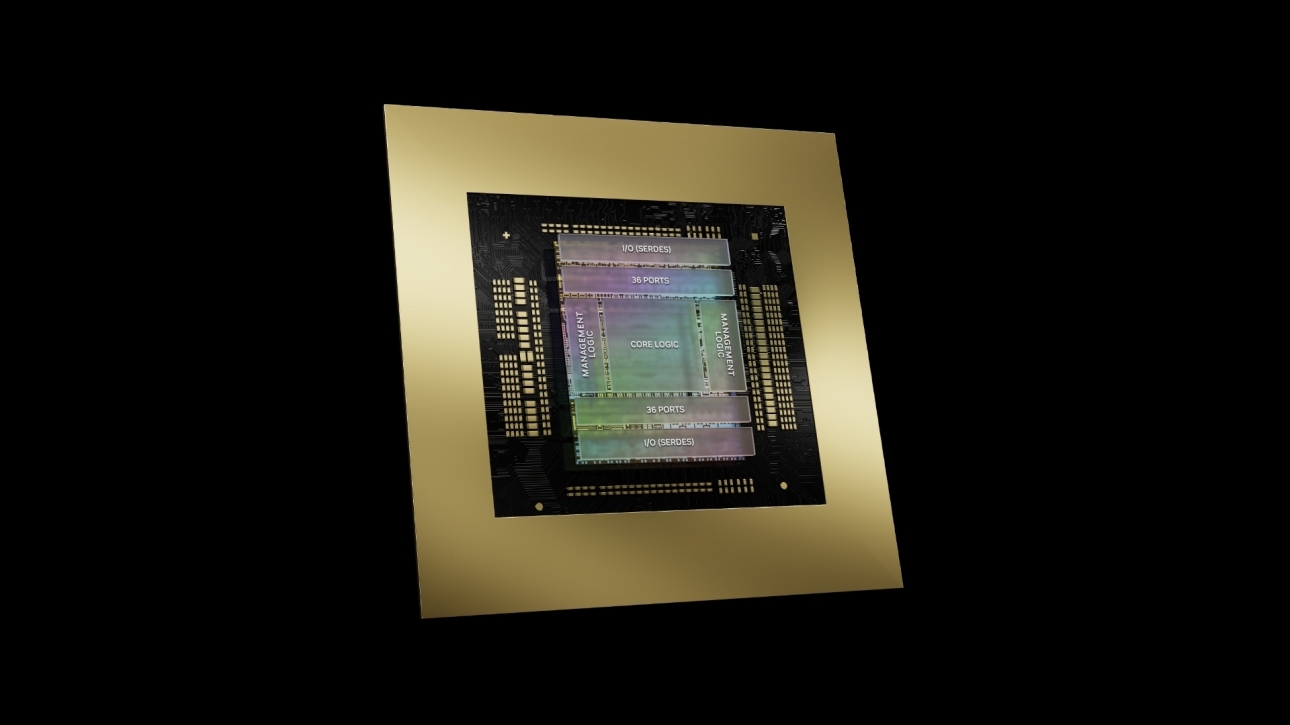

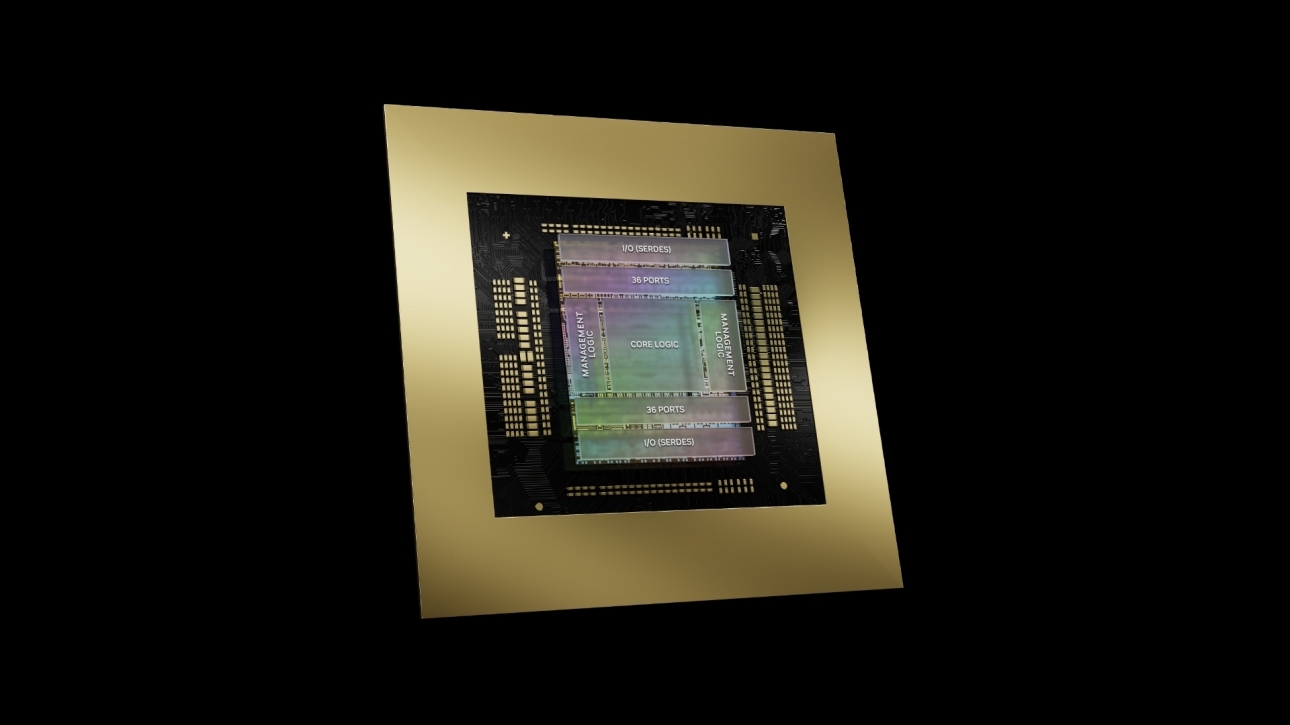

To further enhance multi-GPU communication, Nvidia introduced NVSwitch, a network switch that enables all GPUs in a system to communicate simultaneously at the total NVLink bandwidth. Unlike point-to-point connections, where multiple GPUs must split bandwidth, NVSwitch ensures that each GPU can transfer data at maximum speed without interference from other GPUs.

“Blackwell has our fourth generation of NVSwitch,” Salvator said. “This is a technology we’ve been evolving. And this is not the first time we’ve done a switching chip on our platform. The first NVSwitch was in the Volta architecture.” He added that NVSwitch delivers benefits on both the inference and training sides.

“Training is where you invest in AI,” Salvator told me. “And when you go to inference and deploy, an organization starts seeing the return on that investment. And so if you can have performance benefits on both sides, the presence of the NVSwitch and the NVLink fabric is delivering value.”

NVSwitch’s nonblocking architecture enables faster data sharing between GPUs, critical for maintaining high throughput during model inference. This especially benefits models such as Llama 3.1 70B, which has substantial communication demands. Using NVSwitch in these scenarios can lead to up to 1.5 times greater throughput, enhancing the overall efficiency and performance of the system.

The blog post looked at NVLink and NVSwitch’s impact on using the Llama 3.1 70B model. In Nvidia’s test, the results showed that systems equipped with NVSwitch outperformed those using traditional point-to-point connections, particularly when handling larger batch sizes.

According to Nvidia, NVSwitch reduced the time required for GPU-to-GPU communication and improved overall inference throughput. This improvement translates to faster response times in real-world applications, crucial for maintaining a seamless user experience in AI-driven products and services.

Nvidia’s Blackwell architecture introduces the fifth generation of NVLink and new NVSwitch chips. These advancements increase the bandwidth by two times to 1,800 GB/s per GPU and efficiency of GPU-to-GPU communication, enabling the processing of even larger and more complex models in real time. Only time will tell on this, though.

Nvidia’s NVLink and NVSwitch technologies are critical components in the ongoing development of LLMs. In thinking about these technologies and the rapid pace of development, there are three key points to keep in mind:

These developments are exciting, and it will be interesting to see how the industry and customers respond. Nvidia continues to push the AI envelope, and that has kept it in the lead, but the race is far from over.

Zeus Kerravala is a principal analyst at ZK Research, a division of Kerravala Consulting. He wrote this article for SiliconANGLE.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.