INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

IBM Corp. today revealed the specifications of its upcoming Telum II Processor, which is set to power the next generation of its iconic mainframe systems and boost their relevance in the artificial intelligence processing industry.

Details of the new chip were unveiled at the Hot Chips 2024 event taking place at Stanford University this week. The company said its enhanced processing capabilities will help to accelerate both traditional AI models and emerging large language models using a new technique known as “ensemble AI.”

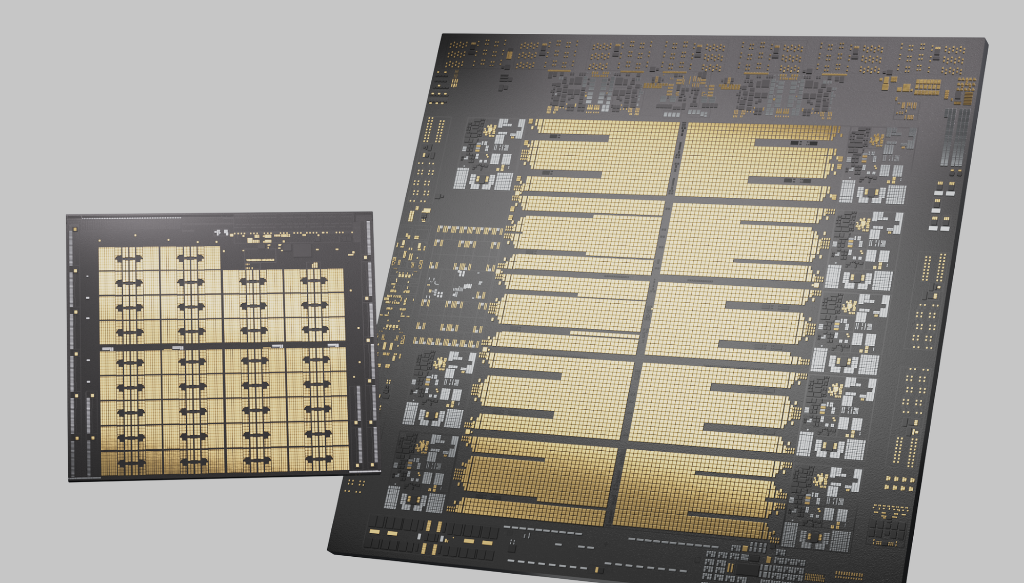

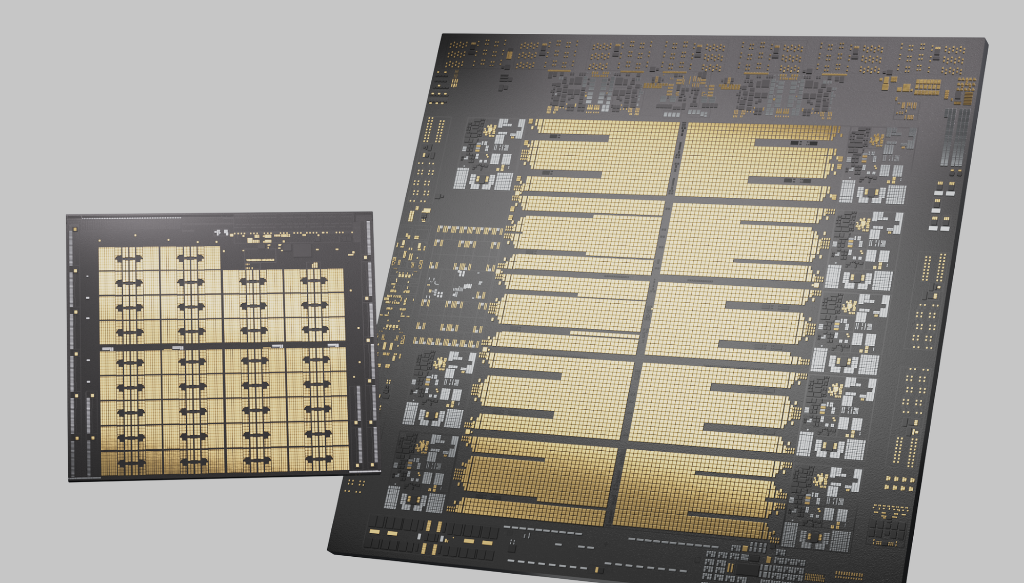

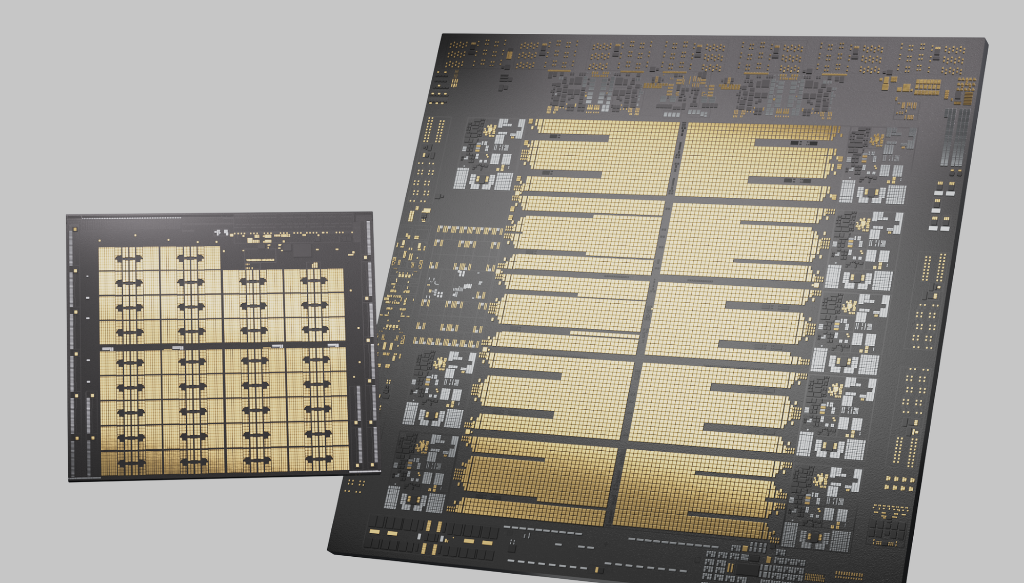

The IBM Telum II Processor (pictured) notably features a completely new data processing unit, which is used to offload certain computing tasks and improve the overall computing efficiency of the chip. According to the company, the new DPU is designed to accelerate complex input/output protocols for networking and storage on the company’s mainframe systems.

In addition to the new chip, the company provided details of its all-new IBM Spyre Accelerator that’s meant to be used in tandem with the Telum chips, providing additional oomph for AI workloads.

IBM is promising quite a boost in overall compute performance when its next-generation mainframe launches later in the year. The new Telum chip, which is built on Samsung Foundry’s most advanced 5-nanometer process, will sit at the heart of the new IBM Z mainframe, providing increased frequency and memory capacity, which enables it to deliver a 40% improvement in cache and integrated AI accelerator core performance.

Digging deeper, IBM said the new chip, the successor to the original Telum processor that debuted in 2021, features eight high-performance cores running at 5.5 gigahertz, with 36 megabytes of memory per core. That amounts to an increase of 40% in on-chip cache capacity, for a total of 360 megabytes.

In addition, the Telum II chip comes with an enhanced integrated AI accelerator for low-latency and high-throughput in-transaction AI inference operations, which makes it more suitable for application such as real-time fraud detection in financial transactions

Meanwhile, the integrated I/O Acceleration Unit DPU should result in a significant improvement in the chip’s data handling capabilities, with IBM promising a 50% increase in overall I/O density.

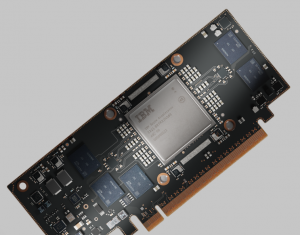

As for the Spyre Accelerator (pictured, right), it’s a purpose-built and enterprise-grade accelerator that’s specifically designed for customers that want to use their mainframe systems for AI processing. It’s designed to ramp up the performance of the most complex AI models, IBM said, including generative AI applications.

As for the Spyre Accelerator (pictured, right), it’s a purpose-built and enterprise-grade accelerator that’s specifically designed for customers that want to use their mainframe systems for AI processing. It’s designed to ramp up the performance of the most complex AI models, IBM said, including generative AI applications.

To do this, it packs 1 terabyte of memory spread across eight cards of a regular I/O drawer. It has 32 compute cores that support int4, int8, fp8 and fp16 data types, enabling it to reduce latency and improve throughput for any kind of AI application.

IBM explained that Telum II and Spyre have been designed to work in tandem, providing a scalable architecture for ensemble methods of AI modeling. Ensemble methods involve combining multiple machine learning and deep learning AI models with encoder LLMs. By drawing on the strengths of each model architecture, ensemble models can deliver more accurate results compared to using a single model type alone.

Tina Tarquinio, IBM’s vice president of product management for IBM Z and LinuxONE, said the new chips enable the company to remain “ahead of the curve” as it strives to cater to the escalating demands of AI. “The Telum II processor and Spyre accelerator are built to deliver high-performance, secured and more power efficient enterprise computing solutions,” she promised.

The company said Telum II is suitable for a range of specialized AI applications that are traditionally powered by its Z mainframe systems. For instance, it said ensemble methods of AI are particularly well suited for enhancing insurance fraud detection. The chips can also support money laundering detection systems, powering advanced algorithms that can spot suspicious financial activities in real-time, reducing the risk of financial crime.

In addition, Telum II is said to be an ideal foundation for generative AI assistants, supporting knowledge transfer and code explanation, among other necessary tasks.

The company said the Telum II chips will make their debut in the next version of the IBM Z mainframe and IBM LinuxONE systems, which are set to be launched later this year.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.