AI

AI

AI

AI

AI

AI

When your stock has been called “the most important on planet Earth” by Goldman Sachs Group, it’s hard to exceed expectations.

That’s the challenge Nvidia Corp. encountered today when it announced 122% growth in second-quarter revenue and earnings. That beat analyst expectations and the company raised its revenue estimates for the third quarter, only to see its shares fall more than 7% after hours. The pullback is perhaps unsurprising given that the company has posted operating earnings surprises of between 10% and 31% each of the last four quarters.

“This is the problem with being the face of an entire movement,” said Alvin Nguyen, senior analyst at Forrester Research Inc. “We want to be wowed by larger and larger margins every time.”

There was little in the numbers to indicate that Nvidia’s core graphics processing unit business fueling the generative artificial intelligence craze is under serious competitive pressure. Earnings per share of 68 cents was way up from 27 cents a year earlier and beat analysts’ expectations of 64 cents.

Revenue of $30.04 billion, up from $13.51 billion a year earlier, beat the consensus estimate of $20.75 billion. Data center revenue jumped 154%, to $26.3 billion. Net profit more than doubled, to $16.6 billion.

Nvidia raised revenue expectations for the third quarter to $32.5 billion, slightly ahead of the consensus forecast of $31.77 billion.

If there was any weakness in the results, it was in the quarter’s 75.1% gross profit margin, down from 78.4% the previous quarter. Chief Financial Officer Colette Kress blamed the decline on design changes to its next-generation Blackwell GB200 GPUs. Nvidia recently announced a delay in Blackwell’s rollout, although it said it expects to ramp up shipments in the fourth quarter and generate several billion dollars of revenue.

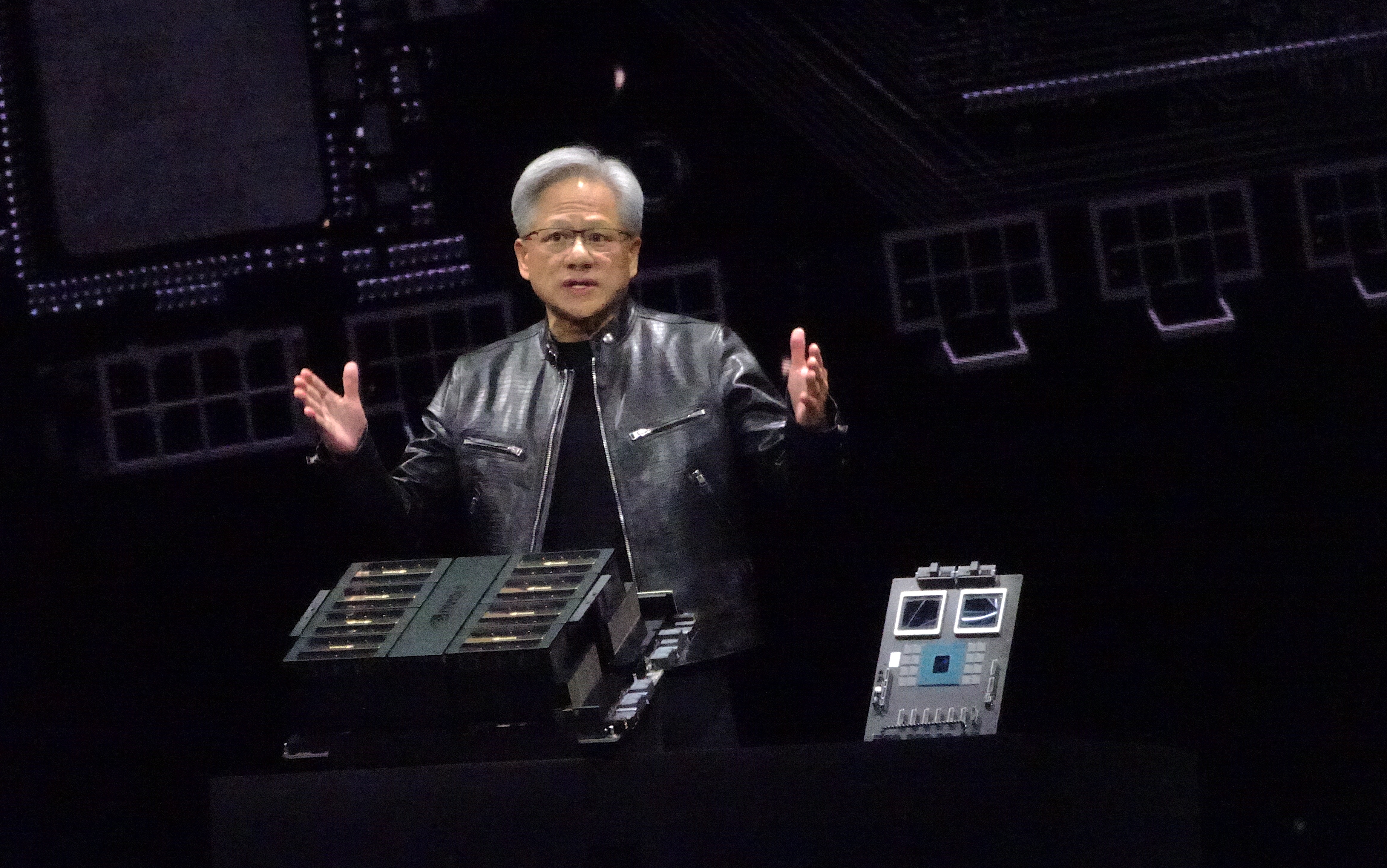

“Blackwell is an AI infrastructure platform that took nearly five years and seven one-of-a-kind chips to realize,” said Chief Executive Jensen Huang (pictured). “Customers want to deploy it as soon as they get their hands on it.”

Huang said Blackwell will fuel the rise of what he called “AI factories,” which are data centers outfitted with high-speed networks and the cabling, power and cooling to support extremely process-intensive workloads. “Global data centers are in full throttle to modernize the entire computing stack with accelerated computing and generative AI,” he said.

Though the growth in Nvidia’s data center business has been impressive, Forrester’s Nguyen said the company may be putting too many eggs in that basket. “I always worry when too much revenue is being focused on too few markets,” he said, noting, “It’s not their fault. The market wants it.”

Building new data centers and retrofitting existing facilities is costly and not even practical in markets where power and water supplies are constrained, he said. “In some areas you can’t build a data center because it takes away power from 20,000 homes,” he said. “There’s a lot of local government pushback.” As a result, Nvidia’s expectations of nearly limitless growth in data center capacity may be unrealistic.

Nevertheless, hyperscaler cloud providers are figuring out ways around those barriers and they could comprise more than 45% of Nvidia’s data center revenue over the next several years, Lucas Keh, analyst at global research firm Third Bridge Group Ltd., said in remarks distributed to media outlets. “The GPU build out/revenue run rate is set to continue for the next 12 to 18 months,” he said. “Our experts have opined that 60% to 70% of hyperscaler training will be done on Blackwell by the end of 2025.”

Accelerated computing is a concept Nvidia has been promoting to compensate for the high-power consumption of generative AI models and the overall slowdown in the pace of performance improvements of CPUs. Accelerated computing uses specialized hardware — in particular GPUs – to speed up work dramatically using parallel processing and software that offloads work from central processing units and can execute tasks in serial fashion.

“CPU scaling has slowed to a crawl and yet demand hasn’t slowed,” Huang said. “The answer is accelerated computing. It allows you to compute at a much larger scale at lower cost and with much lower energy consumed. It’s not unusual to see someone saving 90% of their computing cost.”

Investors have been watching nervously over the past several quarters and rivals such as Advanced Micro Devices Inc. and Intel Corp. have set their eyes on the GPU market. There have also been reports that China’s Huawei Technologies Co. Ltd. may be close to producing GPUs that equal the performance of Nvidia’s current H100 line.

That could be a problem in the Chinese market, which “grew sequentially and is a significant contributor to our data center revenue,” despite government export restrictions, Kress said.

For now, Nvidia remains the undisputed leader in AI silicon. MarketWatch columnist Louis Navellier reported that Alphabet Inc. has ordered more than 400,000 GB200 chips at a price of over $10 billion. Meta Platforms Inc. has also placed a $10 billion order to supply OpenAI LLC with 55,000 to 65,000 GB200 GPUs by the first quarter of 2025.

Nevertheless, Nvidia has been working to diversify its revenue stream beyond GPUs. This year it has launched dozens of NIMs, which are packaged microservices that can be used to accelerate the deployment of foundation models on cloud platforms. It has also amped up its release of CUDA-X libraries, which are building blocks for essential AI development tasks such as data preparation, customization and training development.

The software business is growing, but it exists primarily to move more hardware. “New libraries open new markets for Nvidia,” Huang said.

Networking has also become a major revenue source, contributing $3.7 billion during the quarter. The company said its high-speed Spectrum-X Ethernet networking platform is being broadly adopted by cloud service providers and enterprises. “Our networking footprint is much bigger than ever before,” Huang said.

Nguyen likened the diversified revenue streams to the “castle and moat” strategy in which GPUs are the castle and the moats are partnerships and ancillary businesses that keep competitors at bay.

“They have such a lead and so many people are familiar with their GPUs that they can optimize to a level others can’t,” he said. “All their platforms are going to be supported on day zero and Nvidia in some cases doesn’t have to lift a finger.”

Natalie Hwang, founding managing partner of Apeira Capital Advisors LLC, saw little reason to worry. “The true value of AI is still emerging,” she said. “As a key industry bellwether, Nvidia provides an early indication for those looking to get ahead of market consensus before the broader market fully appreciates AI’s implications.”

During the first half of fiscal 2025, Nvidia returned $15.4 billion to shareholders in the form of shares repurchased and cash dividends.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.