AI

AI

AI

AI

AI

AI

Google Search is building on the generative artificial intelligence search experiences introduced with AI Overviews last year, as part of a broader effort that will ultimately see it go beyond simply providing information.

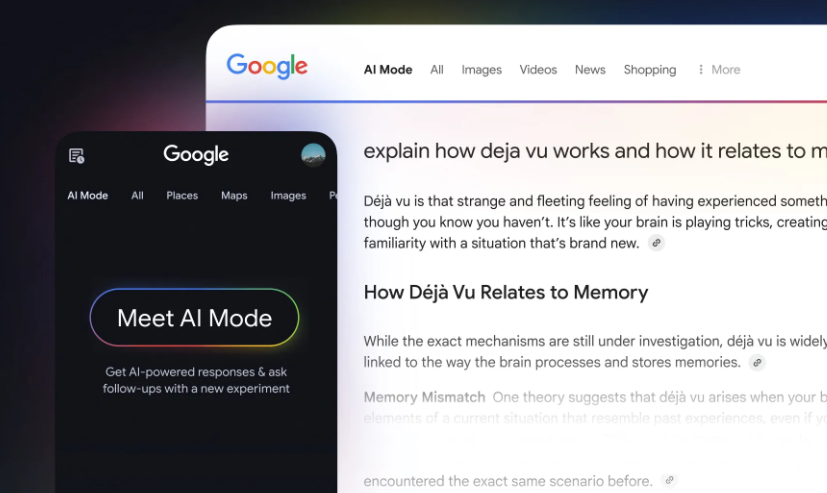

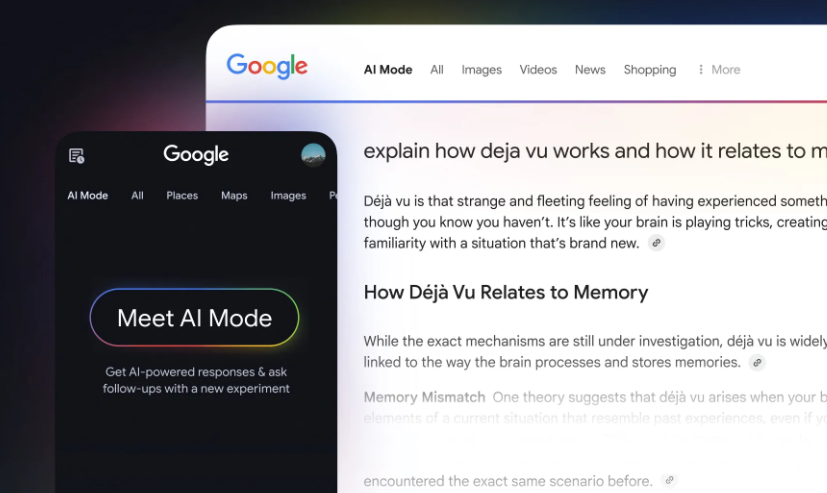

The enhanced search capabilities were announced at Google’s annual developer conference I/O near its Mountain View headquarters. They’re centered on a new “AI Mode” that uses advanced reasoning and multimodality to explore topics much more deeply than before. It uses what Google calls a “query fan-out technique” that breaks down user’s questions into various query subtopics. It issues multiple queries at once on behalf of the user, enabling a deeper dive into almost any topic.

AI Mode in Search, which was previously available to a limited number of testers, is rolling out to all U.S. users with no signup required. From now on, it will serve as a testing ground for generative AI capabilities in search, with the best features set to graduate to become core capabilities of AI Overviews in Google Search.

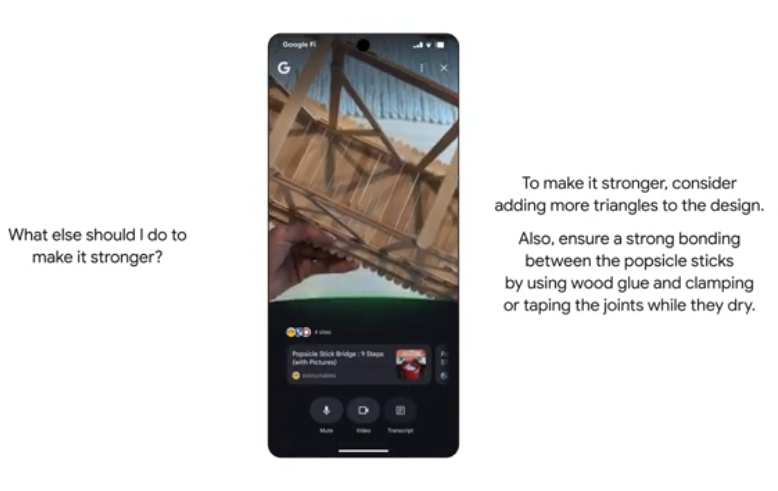

One of the most compelling features in AI Mode is “Search Live,” which might appear familiar to anyone who has previously experienced Gemini Live, a special version of Google’s AI chatbot that enables users to share their screens or camera to enhance context. Search now gets the same treatment, allowing users to talk more naturally with Google Search in a kind of back-and-forth conversation, while taking advantage of their camera.

For instance, users can point their camera at an object and ask Google what it is, and it will do its best to respond correctly, the company said. They can then ask follow-up questions, or ask about a different object.

In addition, Google Search is going to start taking more actions on people’s behalf, Google said. In AI Mode, if someone enters a query like “find two affordable tickets for this weekend’s Giants game in the lower level,” Google Search will generate a query fan-out to analyze dozens of websites to see which one has the best ticket prices, in real time. It will even perform the tedious form-filling that’s required to purchase a ticket, helping users save tons of time that would otherwise be spent doing it themselves.

Shoppers should also be able to save time thanks to the new AI Mode shopping experience, which merges the capabilities of Google’s Gemini Assistant with its Shopping Graph to assist in browsing for products. So people looking for a dress to wear at a wedding can outline ideas, such as a “flowery yellow dress and a matching hat,” and Google will immediately get to work, surfacing various options.

Should shoppers see something they like, they can upload an image of themselves and Google will perform some AI image generation trickery to show them what they’ll look like when wearing that dress. Once they’ve found the item they want, they can advance to a new agentic checkout feature that will buy the dress on their behalf. Users can even set it to wait until an item is discounted, and immediately purchase it when the price is right.

It sounds like a very personal experience, and that is where Google is headed with its new personalized suggestions in AI Mode, which are based on the user’s previous searches. The company explained that if someone is looking for “things to do in Nashville this weekend with friends, who are big foodies and like music,” it will generate a range of personal recommendations for that user. For instance, it might show up restaurants with outdoor seating, taking into account the person’s previous bookings and searches, Google said.

AI Mode can also be linked to other Google apps such as Gmail and Maps to bring more context into its results. It will always make it clear when AI Mode is bringing personal context into its results, and users will be able to switch it off if they desire.

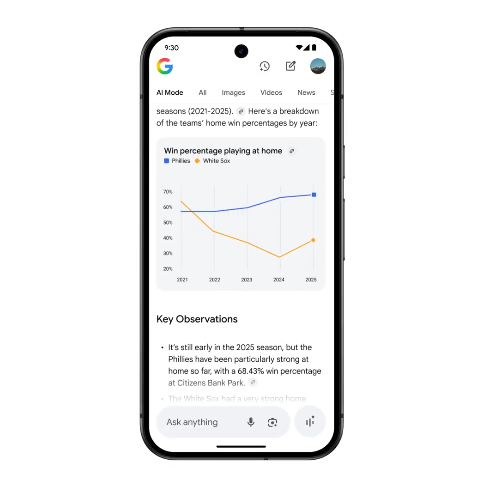

Lastly, AI Mode is getting some new analytics capabilities to help people crunch numbers and visualize data more easily, Google said. AI Mode aims to bring complex datasets to life via easy-to-digest graphics, and it can do it for all kinds of queries. For instance, if a baseball fan asks Google to compare the home field advantage of two different teams, the search engine will analyze the data it finds and create an interactive graph that makes it simple to explore the same theme in more detail. Google said this capability is specific to sports and financial information, for now.

One final search update pertains not to AI Mode, but rather to the original AI Overviews feature that it expands upon. The company said at I/O that AI Overviews is now available in more than 200 countries, and in more than 40 languages, meaning many more people all over the world will be able to access the helpful information provided by its AI assistant. Google said these users will see AI Overviews appear within its search results when it deems it helpful to do so, together with prominent links back to the source of those responses.

The expansion of AI Overviews is not really a surprise, for Google claims that the feature has driven a 10% increase in the number of search queries made by users of its flagship search tool.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.