AI

AI

AI

AI

AI

AI

Artificial intelligence has stepped out of the lab and into the center of global business. Once seen as experimental with uncertain returns, AI infrastructure itself has become the foundation for how — and how well — companies can drive results.

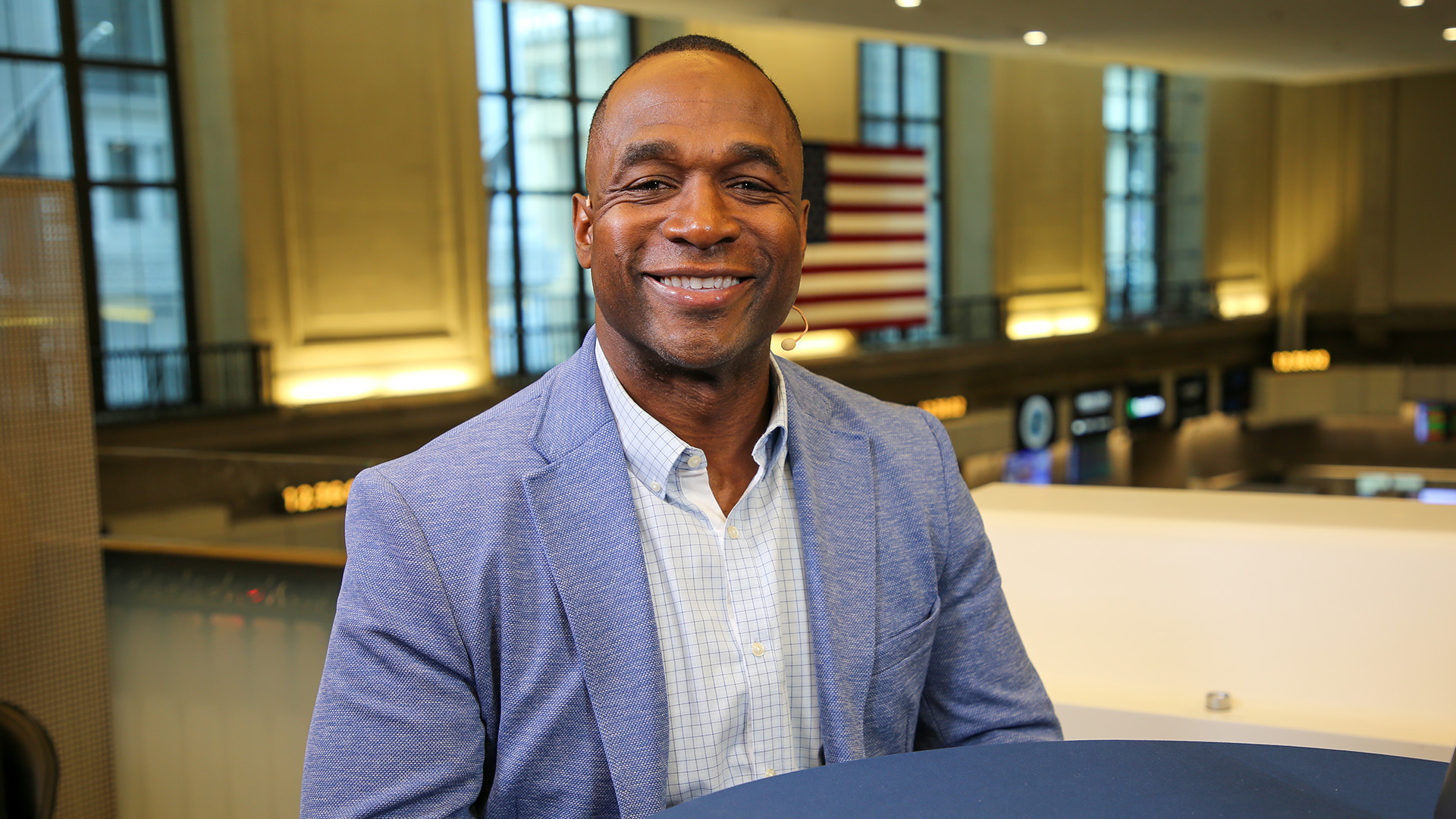

Nvidia’s Dion Harris joins theCUBE to talk about AI infrastructure and the future of data centers.

This marked change in AI’s perception has come with huge investments and for good reason. Once dismissed as resource-eaters, these systems are now emerging as value-generators with global implications, according to Dion Harris (pictured), senior director of HPC, cloud and AI infrastructure solutions at Nvidia Corp.

“These AI factories are revenue centers now,” Harris said. “They’re not just cost centers that are driving efficiency gains and productivity gains. They’re actually driving revenue.”

Harris spoke with theCUBE’s John Furrier at theCUBE + NYSE Wired: AI Factories – Data Centers of the Future event, during an exclusive broadcast on theCUBE, SiliconANGLE Media’s livestreaming studio. They discussed how AI infrastructure is becoming central to enterprise strategy and how data centers are evolving to support both business growth and global-scale challenges.

Viewing AI as a production system is redefining its role in the enterprise. Cue the rise of AI factories — data centers built to produce intelligence rather than just process workloads. Tokens, their output, are then applied to breakthroughs in medicine, the discovery of new materials and the development of large-scale applications, according to Harris.

“In this case, an AI factory, the output is tokens,” he said. “And these tokens are used to synthesize new drugs, they’re used to discover new materials [and] they’re used to create all sorts of innovative large language models that have different applications.”

But making those outputs useful at scale requires more than raw compute power. Enterprises need tools that simplify deployment, ensure security and make AI accessible within existing operations, Harris explained.

“We recognize that in order to really build these solutions, deliver them to the entire market, Nvidia couldn’t do it alone,” he said. “We really need to make sure that we invest heavily in making sure that the entire ecosystem is ready and prepared for the solutions that we’re building.”

That heavy investment is unlikely to slow anytime soon, as the physical demands of AI infrastructure are reshaping data centers. As workloads expand from training to real-time inference, designs now emphasize efficiency at scale, from liquid cooling to orchestration across compute, networking and storage layers, Harris noted.

“When you get to the inference […] you need to make sure you can understand not just the type of model you’re running, but obviously the profile of it,” he said. “And then you need to do that not for one user, but millions of users.”

The data center’s ability to operate at scale is not just being directed at large userbases and even larger return on investments, but at global challenges. AI-driven simulations are being applied to weather forecasting, agriculture, logistics and energy management, all in an effort to address crises such as climate change, according to Harris. This meeting point between computing and sustainability underscores the broader role of AI in addressing the planet’s toughest problems.

“Weather is one of those things that is so ubiquitous and wide-reaching in terms of the impact,” he said. “We saw it as a huge problem that we could contribute these technologies to help drive some efficiency.”

Here’s the complete video interview, part of SiliconANGLE’s and theCUBE’s coverage of theCUBE + NYSE Wired: AI Factories – Data Centers of the Future event:

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.