AI

AI

AI

AI

AI

AI

Artificial intelligence leader Nvidia Corp. Monday announced the Nemotron-3 family of models, data and tools, and the release is further evidence of the company’s commitment to the open ecosystem, focusing on delivering highly efficient, accurate and transparent models essential for building sophisticated agentic AI applications.

Nvidia executives, including Chief Executive Jensen Huang, have talked about the importance open source plays in democratizing access to AI models, tools and software to create that “rising tide,” and bringing AI to everyone. The announcement underscores Nvidia’s belief that open source is the foundation of AI innovation, driving global collaboration and lowering the barrier to entry for diverse developers.

As large language models achieve reasoning accuracy suitable for enterprise applications, on an analyst prebrief Nvidia highlighted three critical challenges facing businesses today:

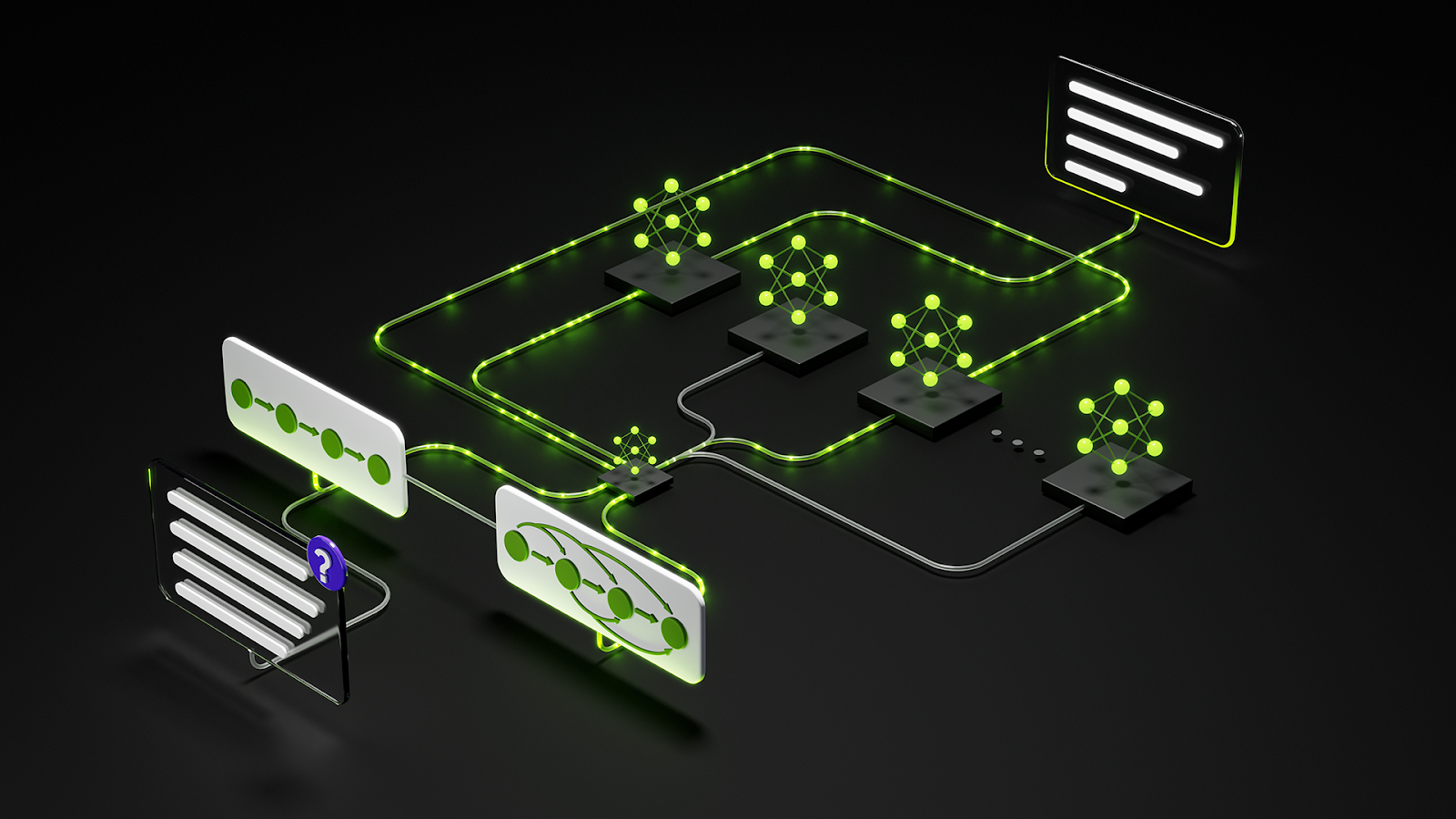

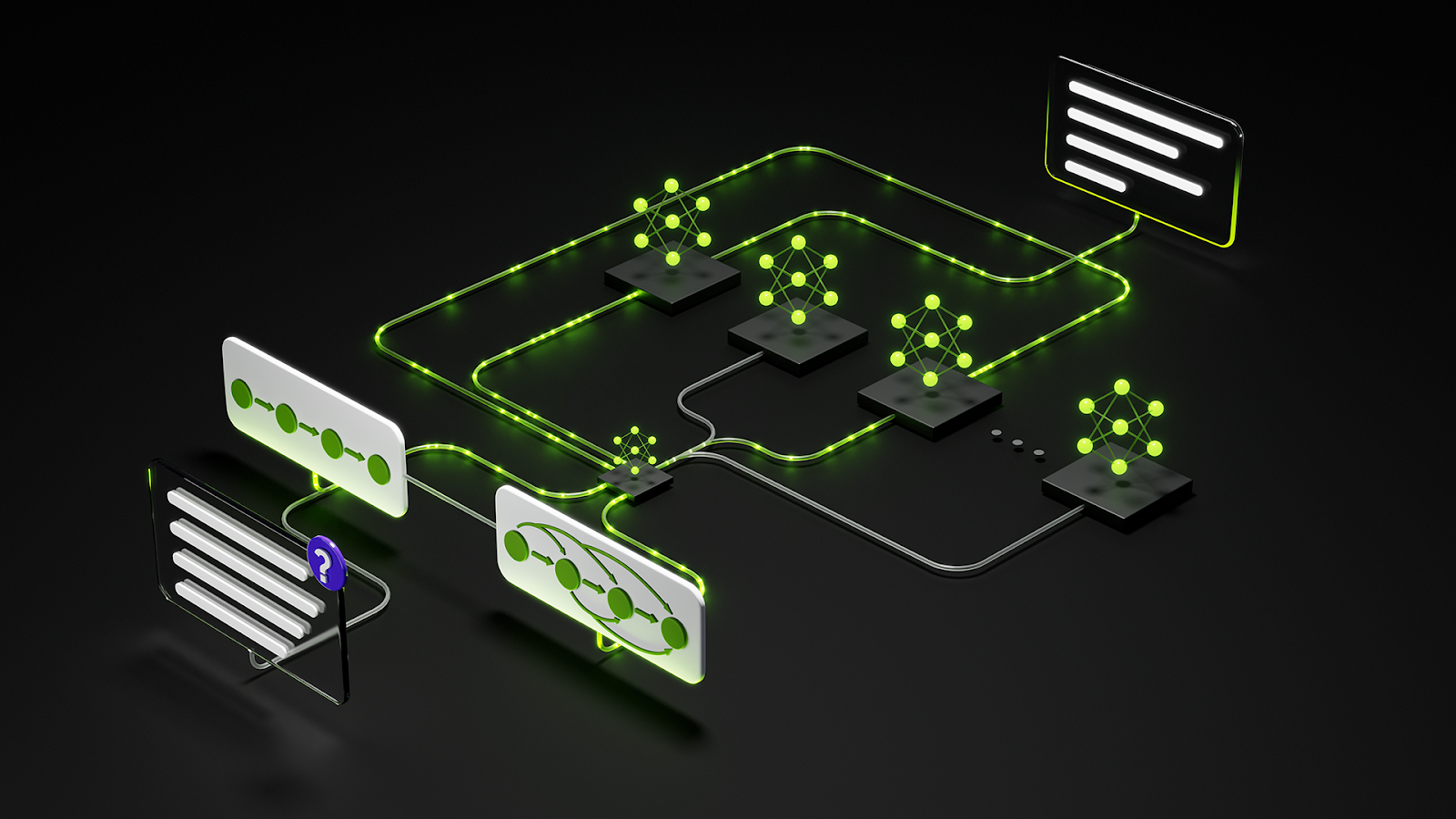

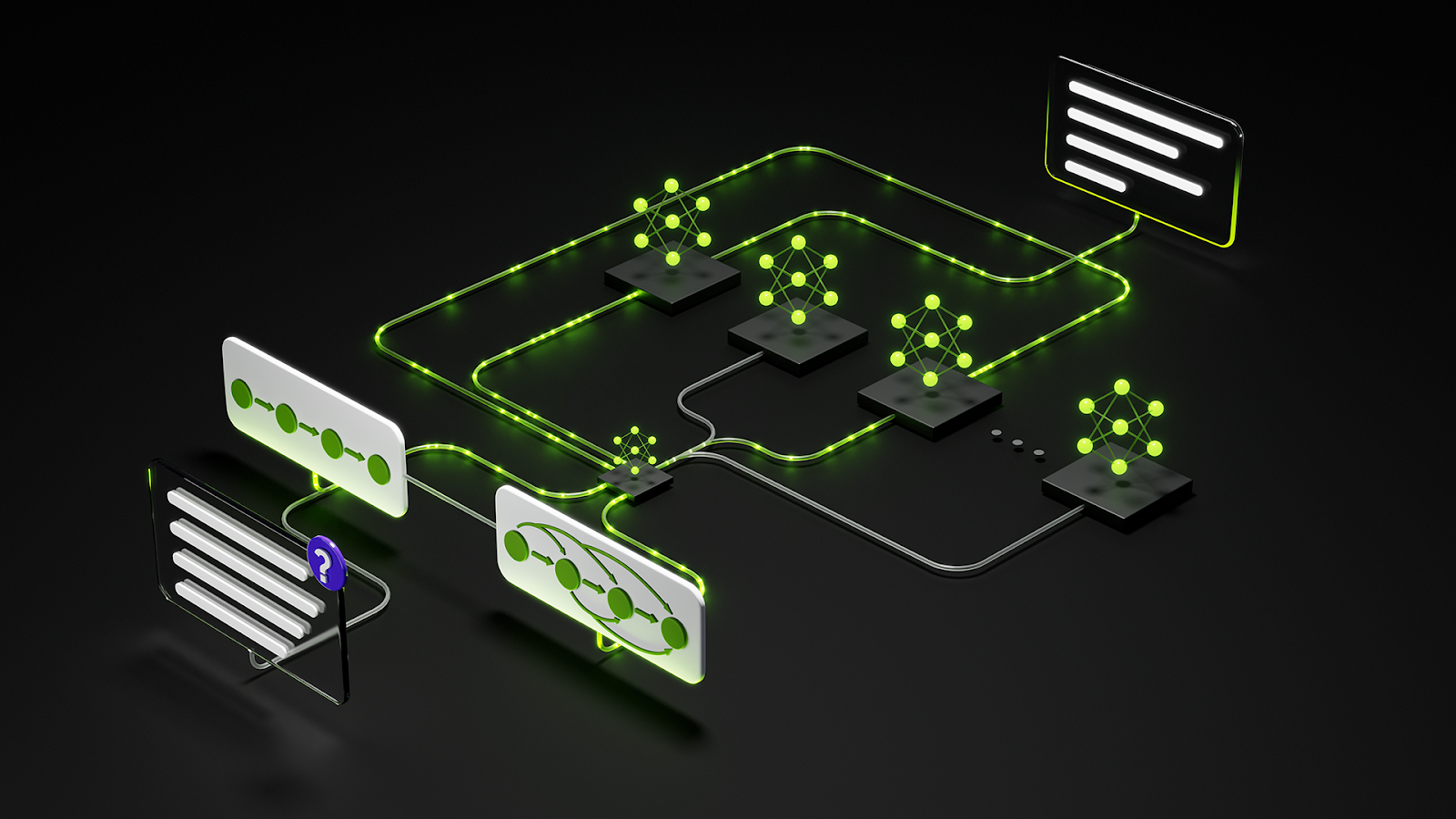

Nvidia’s answer to the above challenges is the Nemotron-3 family, characterized by its focus on being open, accurate, and efficient. The new models use a hybrid Mamba-Transformer mixture-of-experts or MoE architecture. This design dramatically improves efficiency as it runs several times faster with reduce memory requirements.

The Nemotron-3 family will be rolled out in three sizes, catering to different compute needs and performance requirements:

Nemotron-3 offers leading accuracy within its class, as evidenced by independent benchmarks from testing firm Artificial Analysis. In one test, Nemotron-3 Nano was shown to be the most open and intelligent model in its tiny, small reasoning class.

Furthermore, the model’s competitive advantage comes from its focus on token efficiency and speed. On the call, Nvidia highlighted Nemotron-3 tokens-to-intelligence rate ratio, which is crucial as the demand for tokens from cooperating agents increases. A significant feature of this family is the 1 million-token context length. This massive context window allows the models to perform dense, long-range reasoning at lower cost, enabling them to process full code bases, long technical specifications and multiday conversations within a single pass.

A core component of the Nemotron-3 release is the use of NeMo Gym environments and data sets for reinforcement learning, or RL. This provides the exact tools and infrastructure Nvidia used to train Nemotron-3. The company is the first to release open, state-of-the-art, full reinforcement learning environments, alongside the open models, libraries and data to help developers build more accurate and capable, specialized agents.

The RL framework allows developers to pick up the environment and start generating specialized training data in hours.

The process involves:

This systematic loop enables models to get better at choosing actions that earn higher rewards, like a student improving their skills through repeated, guided practice. Nvidia released 12 Gym environments targeting high-impact tasks like competitive coding, math and practical calendar scheduling.

The Nemotron release is backed by a substantial commitment across three areas:

Nvidia is releasing the actual code used to train Nemotron-3, ensuring full transparency. This includes the Nemotron-3 research paper detailing techniques like synthetic data generation and RL.

Nvidia researchers continue to push the boundaries of AI, with notable research including:

Nvidia is shifting the data narrative from big data to smart and improved data curation and quality. To accomplish this, the company is releasing several new data sets:

Nvidia is providing reference blueprints to accelerate adoption, integrating Nemotron-3 models and acceleration libraries:

The Nemotron ecosystem is broad, with day-zero support for Nemotron-3 on platforms such as Amazon Bedrock. Key partners such as CrowdStrike Holdings Inc. and ServiceNow Inc. are actively using Nemotron data and tools, with ServiceNow noting that 15% of the pretraining data for their Apriel 1.6 Thinker model came from an Nvidia Nemotron data set.

The industry is winding down the hype phase of AI and we should start to see more production use cases. The Nemotron 3 family is well-suited for this era as it provides a performant and efficient open-source foundation for the development of the next generation of Agentic AI, reinforcing Nvidia’s deep commitment to democratizing AI innovation.

Zeus Kerravala is a principal analyst at ZK Research, a division of Kerravala Consulting. He wrote this article for SiliconANGLE.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.