EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

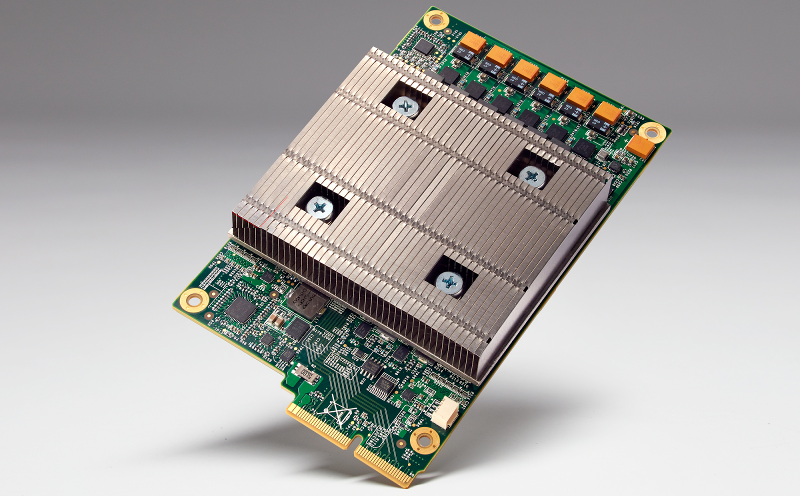

Google Inc. made headlines at its I/O developer event last May after revealing the existence of an internally developed chip (pictured) for running artificial intelligence workloads. Nearly a year later, the search giant is finally opening up about how the processor matches up against commercially available alternatives.

Members of Google’s hardware team released a paper today that claims the system beats central processing units and graphics processing unit in its weight class on several key fronts. One of them is power consumption, which is a major economic factor for a company that operates as much hardware as the search giant does. Its engineers highlight that the Tensor Processing Unit, as the chip is called, can provide 30 to 80 times more horsepower per watt than a comparable Intel Corp. Haswell CPU or Nvidia Inc.’s Tesla K80 GPU.

Google’s TSU leads in overall speed as well. Internal tests have shown that the chip can consistently provide 15 to 30 times better performance than commercial alternatives when handling AI workloads. One of the models that Google used during the trials, which the paper refers to only as CNN1, ran 70 times faster.

The company’s engineers have managed to pack all this horsepower into a chip that is smaller than Nvidia’s K80. It’s housed on a board configured to fit into the hard drive slots on the likewise custom-made server racks that Google employs in its data centers. According to the search giant, more than 100 internal teams are using TSUs to power support for Street View and the voice recognition features of other key services.

Google is one of several web-scale giants that use silicon optimized for their specific requirements. The group also includes Microsoft Corp. and Amazon.com Inc., which employ custom processors commissioned from Intel to power their public clouds. Moreover, the retail giant has a subsidiary called Annapurna Labs that sells specialized chips designed for use in networking equipment.

It’s worth mentioning that these chips are for actual operation of AI workloads, known as “inference.” As Patrick Moorhead, president and principal analyst at Moor Insights & Strategy, pointed out, the training of machine learning models is still carried out on vast server farms using CPUs and GPUs.

THANK YOU